Most surveys collect answers. Few collect anything useful. The difference comes down to the questions you ask. Vague prompts get vague responses. Specific, well-structured feedback survey questions get data you…

Table of Contents

You send a survey. Half your audience ignores it. The other half quits halfway through.

Sound familiar?

Survey fatigue is killing your response rates and destroying data quality. When participants feel overwhelmed by questionnaires, they either abandon them entirely or rush through with meaningless answers.

The result? Unreliable feedback that misleads rather than informs.

This guide shows you how to avoid survey fatigue and increase response rates through proven strategies. You’ll learn why respondents drop off, which question types reduce cognitive load, and how to optimize survey length for maximum completion.

Whether you’re running customer satisfaction surveys, employee engagement surveys, or market research, these techniques will transform your feedback collection.

What is Survey Fatigue?

Survey fatigue is the loss of motivation participants experience when completing questionnaires.

It causes respondents to abandon surveys, provide rushed answers, or skip questions entirely. Research from a 2024 study published in the Journal of Caring Sciences identifies survey fatigue as a major limitation in questionnaire-based research, leading to careless responses and decreased survey responsiveness.

The result? Lower completion rates and unreliable feedback that can mislead business decisions.

Types of Survey Fatigue

Survey-taking fatigue happens when people receive too many survey requests over time.

Your audience gets overwhelmed before they even open your questionnaire. According to Marketplace reporting on Bureau of Labor Statistics data, response rates to the JOLTS survey dropped from 64% in 2017 to under 31% just five years later.

Survey-response fatigue occurs during a single survey when respondents grow tired of answering questions.

Research from SurveyMonkey shows that surveys exceeding 7-8 minutes see abandonment rates increase anywhere from 5% to 20%. Jotform data indicates surveys with 1-3 questions achieve 83.3% completion rates, while surveys with 15+ questions drop to just 41.9%.

Question fatigue results from repetitive or poorly worded questions that frustrate participants.

Badly designed questionnaires trigger this fastest.

Signs Your Respondents Are Experiencing Fatigue

Watch for these warning signals in your survey analytics:

- Straight-line responding (selecting the same answer repeatedly down a column)

- Increased “don’t know” or neutral responses in later sections

- Higher questionnaire abandonment rates as surveys progress

- Declining completion times per question

- Blank open-ended responses where participants skip text fields entirely

Research published in Scientific Reports found that straight-lining is more common among younger respondents and can introduce systematic errors that undermine data quality.

When you spot these patterns, your feedback forms need redesigning.

The satisficing behavior, where respondents give minimal effort just to finish, destroys your response quality. A study from ScienceDirect found that each additional hour of survey time increases the probability a respondent skips a question by 10-64%.

Why Survey Fatigue Hurts Your Data Quality

Response bias becomes inevitable when only people with extreme opinions complete your surveys.

Those mildly satisfied or slightly dissatisfied simply drop off.

Your sample size shrinks, statistical significance suffers, and demographic representation skews toward whoever has patience for long questionnaires.

According to Userpilot, 67% of respondents have abandoned an ongoing survey due to fatigue. That’s two-thirds of potential insights lost.

The rushed answers from fatigued participants misrepresent actual opinions, leading to flawed Voice of the Customer programs and misguided business strategies.

Your Net Promoter Score and Customer Satisfaction Score become unreliable metrics when respondent burnout taints the data.

Quick Reference: Survey Fatigue Impact

| Metric | Stat | Source |

|---|---|---|

| Survey abandonment rate | 67% have abandoned due to fatigue | Userpilot |

| Question skip increase | 10-64% per additional hour | ScienceDirect |

| Completion rate (1-3 questions) | 83.3% | Jotform |

| Completion rate (15+ questions) | 41.9% | Jotform |

| Response rate decline | 64% → 31% in 5 years | Bureau of Labor Statistics |

Actionable Steps to Reduce Survey Fatigue

Keep surveys under 10 minutes. Research from Kantar shows the “golden standard” is 10-12 minutes. Even better: aim for 5 minutes for customer satisfaction surveys.

Limit questions to 5-10. InMoment research shows 74% of customers are only willing to answer five questions or less.

Use skip logic. Kantar data shows routing can increase completion likelihood by 100-200% by making surveys more relevant.

Avoid matrix questions. Qualtrics research indicates grid formats invite straight-lining behavior and false economies in design.

One question per page. This reduces the visual overwhelm that triggers fatigue.

Causes of Survey Fatigue

Over-Surveying

Sending frequent survey requests in short periods overwhelms your audience.

They see another feedback request in their inbox and immediately delete it. According to Marketplace reporting, the Bureau of Labor Statistics saw JOLTS survey response rates plummet from 64% to under 31% in just five years, largely due to general survey fatigue across all channels.

Only 9% of respondents take time to complete long questionnaires according to Customer Thermometer data. The rest have learned to ignore brands that ask too much, too often.

Space your market research requests appropriately based on relationship depth with each customer segment. Pointerpro research recommends waiting at least two months between survey invitations to the same recipient.

Long Surveys

Surveys exceeding 10 minutes see completion rates drop significantly compared to shorter versions.

Survicate data shows the impact clearly:

| Question Count | Completion Rate |

|---|---|

| 1-3 questions | 83.34% |

| 4-8 questions | 65.15% |

| 9-14 questions | 56.28% |

| 15+ questions | 41.94% |

SurveySparrow research confirms surveys over 12 minutes see three times more dropouts than those under 5 minutes.

Here’s the reality: InMoment found 74% of customers are only willing to answer five questions or less.

Your 30-question customer feedback collection form is dead on arrival.

Following best practices for creating feedback forms means cutting ruthlessly.

Poorly Designed Questions

Bad question design triggers immediate abandonment:

- Repetitive questions phrased differently (question fatigue)

- Confusing or ambiguous wording that requires too much thought

- Questions requiring cognitive load that exhausts participants

- Irrelevant questions that don’t match participant experience

Your question wording matters more than most realize.

SurveyMonkey research found that each additional word in question text has a negative effect on completion rates. Even after accounting for total words, the first question’s word count alone significantly predicts lower completion rates.

A single confusing item can cause someone to abandon the entire survey.

Strong form design principles apply equally to surveys.

Wrong Timing

Sending surveys at inconvenient times kills engagement before it starts.

SurveyMonkey research across 100,000 surveys found:

- Monday surveys receive 10% more responses than average

- Friday surveys receive 13% fewer responses than average

Peak times according to multiple studies: mid-morning (9 AM to 12 PM) when people are focused.

Deploy customer satisfaction surveys immediately after interactions while the experience stays fresh in memory.

For employee surveys, avoid Monday mornings and Friday afternoons. SurveyVista data shows internal employee surveys sent on Mondays generate 13% more responses than other weekdays.

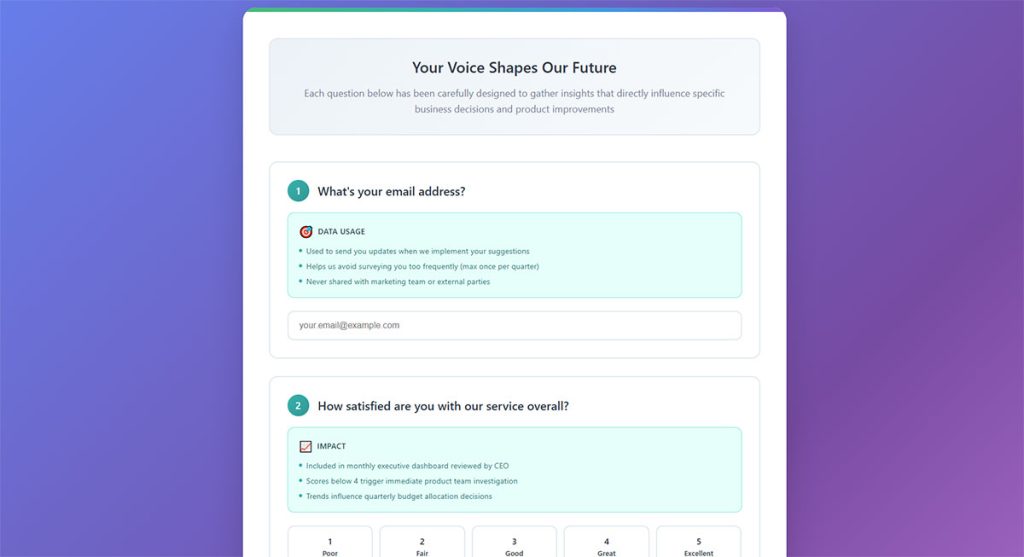

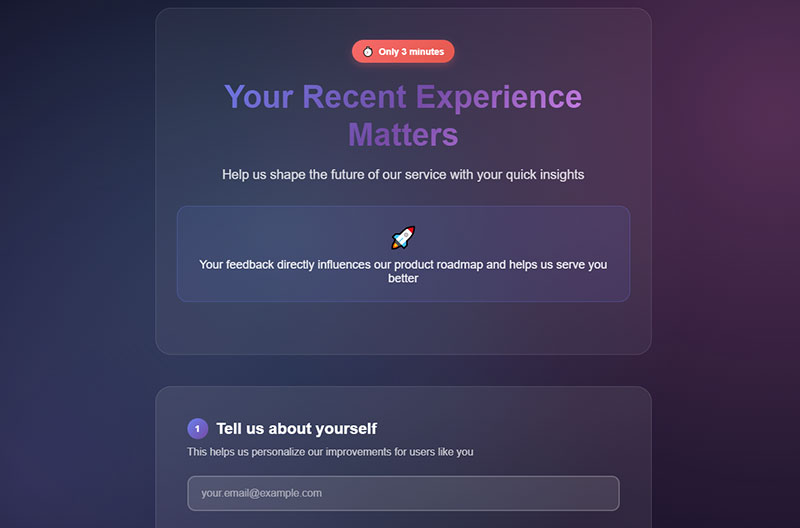

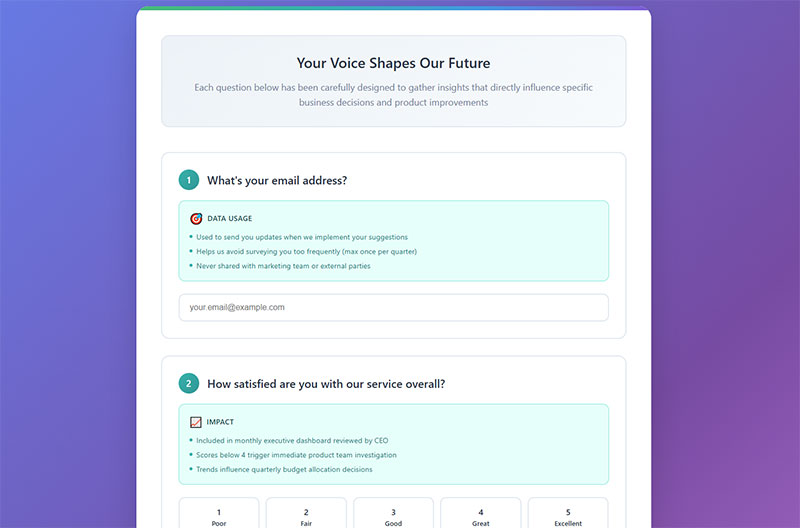

Lack of Purpose Transparency

Participants who don’t believe their responses will affect outcomes have zero motivation to complete surveys.

They’ve filled out countless questionnaires before and seen nothing change.

Research from MoldStud shows 75% of individuals are more likely to provide feedback when they understand their contributions lead to tangible improvements. TheySaid’s analysis across 50,000+ surveys found privacy statements alone can increase completion rates by up to 23% among enterprise respondents.

Tell respondents exactly:

- Why you’re asking

- How long it takes

- What you’ll do with their feedback

This transparency builds trust and increases participation rates dramatically.

How Long Should a Survey Be

The ideal survey length falls between 5-7 minutes, translating to roughly 10-15 questions.

Completion rates drop significantly after the 7-10 minute mark according to SurveyMonkey research. Their analysis of 100,000 surveys found abandonment rates increase 5-20% for surveys exceeding 7-8 minutes.

SurveySparrow research confirms surveys over 12 minutes see three times more dropouts than those under 5 minutes.

| Survey Type | Recommended Questions | Time Estimate |

|---|---|---|

| NPS/CSAT surveys | 2-4 questions | 2 minutes |

| Customer satisfaction survey | 5-10 questions | 5 minutes |

| Employee engagement survey | 10-15 questions | 7 minutes |

| Market research surveys | 15-20 questions | 10 minutes |

Every additional 10 questions added to a survey decreases completion measurably. SurveyMonkey data shows:

- 10-question surveys: 89% completion rate

- 20-question surveys: 87% completion rate

- 40-question surveys: 79% completion rate

Qualtrics data shows respondents spend less time per question as surveys grow longer, indicating satisficing behavior kicks in around question 15. SurveyMonkey found respondents average 75 seconds on the first question but drop to just 19 seconds per question by questions 26-30.

When building a survey form, remove any question that doesn’t directly support your research goal.

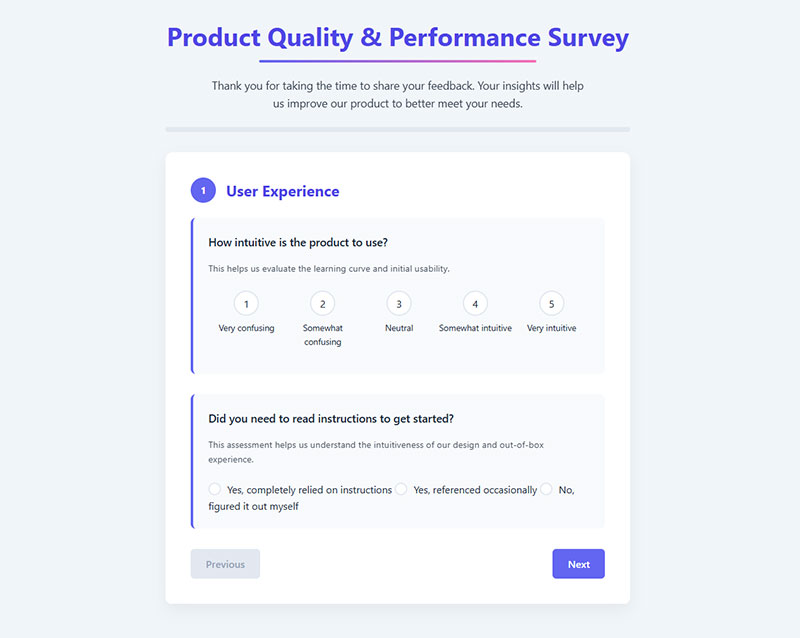

Survey Question Types That Reduce Fatigue

Rating Scale Questions

Quick to answer and easy to analyze.

Used in Net Promoter Score (0-10 scale), Customer Satisfaction Score (1-5 scale), and Customer Effort Score surveys.

Best for quantifiable data you can track over time with benchmark metrics.

The Likert scale works well when you need to measure agreement levels. Research from Babakus and Mangold found that 5-point scales increase response rate and response quality while reducing respondent frustration compared to longer scales.

Multiple Choice Questions

Provide structured options that respondents can select quickly.

Pew Research Center data confirms closed-ended questions result in significantly lower nonresponse rates than open-ended questions. Their average nonresponse rate for closed-ended questions hovers between 1-2%, compared to an 18% average for open-ended questions.

Include an “Other” option with a text field for comprehensive capture without limiting responses.

Understanding types of survey questions helps you choose the right format for each data point.

Binary Questions

Yes/No or thumbs up/down format creates the lowest cognitive load.

Best for quick pulse checks, filtering questions, and mobile surveys where screen space is limited.

Participants breeze through these without thinking twice.

Open-Ended Questions

Allow detailed feedback but require significantly more effort from respondents.

Pew Research found open-ended item nonresponse rates range from 3% to over 50%, with high-burden questions showing three times higher nonresponse than low-burden ones. SurveyMonkey research shows surveys starting with an open-ended question have 6 percentage points lower completion rates (83% vs 89%) than those starting with multiple choice.

Use sparingly, one or two per survey maximum.

Place after rating questions so participants can explain their scores if they choose.

These responses need manual analysis or sentiment analysis tools to process effectively.

Likert Scale Questions

Measure agreement levels from strongly disagree to strongly agree.

Research published in ResearchGate shows 5-point scales are less confusing and increase response rates compared to 7-point versions. However, MeasuringU analysis found 7-point scales are slightly more accurate for detecting nuanced opinions, particularly when you have fewer than 10 questions and large sample sizes.

The American Customer Satisfaction Index uses this format extensively for standardized measurement.

Keep scale labels consistent throughout your survey to prevent confusion and straight-line responding.

How to Reduce Survey Fatigue

Keep Surveys Short

Remove questions that don’t directly support your research goal.

Every additional 10 questions decreases completion rates measurably. SurveyMonkey data shows completion drops from 89% at 10 questions to 79% at 40 questions, so cut ruthlessly.

Ask yourself: do I really need this data point, or is it just nice to have?

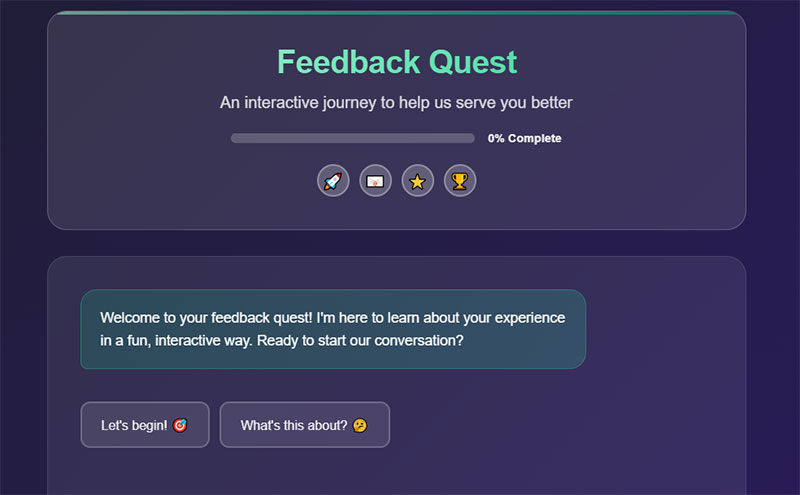

Use Skip Logic and Branching

Route respondents past irrelevant questions using conditional logic.

Kantar research shows this personalization increases completion likelihood by 100-200%. BlockSurvey data suggests well-implemented skip logic can increase completion rates by as much as 375% in some cases.

Nobody wants to answer questions about products they’ve never used.

Skip logic benefits according to QuestionPro research:

- Reduces effective survey length

- Lowers dropout rates from irrelevant questions

- Improves data accuracy through targeted questioning

- Creates personalized experience for each respondent

Send at the Right Time

Deploy surveys immediately after interactions while experience stays fresh.

SurveyMonkey research across 100,000 surveys found Monday surveys receive 10% more responses than average, while Friday surveys receive 13% fewer responses.

Best times according to multiple studies:

- Mornings (9 AM-12 PM): Peak engagement when focus is highest

- Mid-week (Tuesday-Thursday): Higher completion rates than Monday or Friday

- Within 24-48 hours of interaction: Delighted recommends this window for transactional surveys

Explain the Purpose

Tell respondents why you’re asking, how long it takes, and how their feedback gets used.

Research from MoldStud shows 75% of individuals are more likely to provide feedback when they understand their contributions lead to tangible improvements. TheySaid analysis found clear privacy statements increase completion rates by up to 23%.

Transparency builds trust and directly increases participation rates.

People complete surveys when they believe their input matters.

Limit Survey Frequency

Avoid surveying the same audience repeatedly in short periods.

Best practice frequency guidelines:

| Audience Type | Recommended Frequency |

|---|---|

| B2B customers | Quarterly (every 90 days) |

| B2C customers | 2x customer interaction frequency |

| NPS relationship surveys | Semi-annually to quarterly |

| Transactional surveys | Immediately after interaction |

AskNicely recommends surveying customers no more than every 90 days to avoid fatigue while still touching each customer once per quarter.

Qualtrics research confirms that more frequently than quarterly puts you at great risk of survey fatigue, especially if you also send transactional surveys.

Over-surveying trains people to ignore your brand entirely.

How to Increase Survey Response Rates

Personalize Your Invitations

Address respondents by name and reference specific interactions or purchases.

Qualtrics research shows personalization increases response rates by up to 48%. A 2024 study published in PMC found personal invitations achieved a 40.2% response rate compared to just 25.3% for generic link groups.

Pointerpro research confirms personalized invites (using “Dear John” vs. “Dear Customer”) increased response rates by 7.8% and reduced dropout by 2.6%.

Generic blast emails get deleted; personalized requests get answered.

Use Clear Subject Lines

State the time commitment and any incentive upfront.

| Subject Line Type | Performance |

|---|---|

| Strong: “2-minute survey for a $30 Amazon gift card” | Higher open rates |

| Weak: “We’d love your feedback!” | Lower engagement |

Pointerpro data shows avoiding the word “survey” in subject lines can increase response rates by 10%. Gofraze research found personalized subject lines boost open rates by 26%.

Send Reminder Emails

Response rates remain above 20% through the fourth reminder according to Panorama Education analysis.

Qualtrics research shows well-timed reminders can increase response rates by up to 36%. One study found extra reminders raised total response rates by 6.1 percentage points among initial non-responders.

Best practices:

- First reminder: 2-3 days after initial invite

- Second reminder: 2 days before deadline

- Final reminder: Day of deadline

- Limit to 2-3 reminders total to avoid annoyance

Only contact those who haven’t completed the survey. Use fresh language with each follow-up.

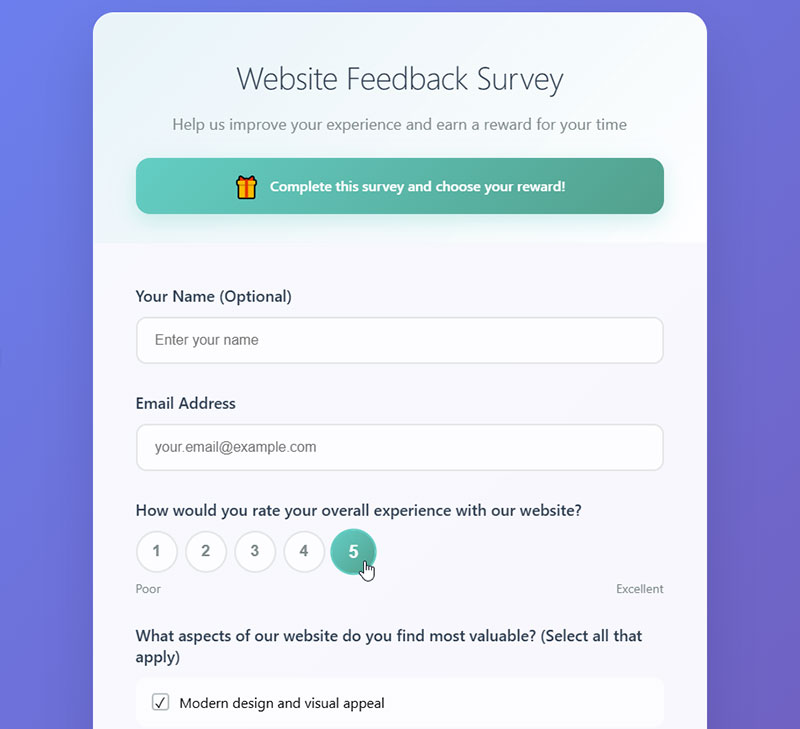

Offer Meaningful Incentives

Compensation increases completion rates dramatically.

PMC research shows moving from uncompensated to compensated surveys raises completion from 54% to 71%. Zonka Feedback data indicates incentives can increase response rates by up to 19.1% for monetary rewards and 7.9% for non-monetary rewards.

Gift cards, discounts, and prize draws all work. Pointerpro found guaranteed rewards outperform lottery-style prizes.

Match incentives to your audience’s preferences.

Make It Mobile-Friendly

SurveySparrow reports 60% of survey responses now come from mobile devices.

Follow mobile forms best practices:

- Large tap targets (minimum 44×44 pixels)

- Minimal scrolling

- Simple question formats

- One question per screen when possible

A survey that’s painful on phones loses a significant portion of potential responses.

Close the Feedback Loop

Share survey results with participants after collection ends.

Qualtrics emphasizes that providing feedback on results helps close the loop and demonstrates the value of the respondent’s contribution. This builds trust for future surveys.

When people see their input made a difference, they respond to future surveys willingly.

This single habit builds long-term respondent engagement better than any incentive program.

Best Distribution Channels for Surveys

In-App Surveys

Trigger based on user behavior for maximum relevance.

InMoment case study shows Entelo achieved 2x response rate using in-app NPS surveys versus email, jumping from 24% to around 50%. Screeb data indicates in-app surveys average 12% response rate compared to just 8% for email surveys.

Higher completion rates because context is already established.

Perfect for post-purchase survey questions and feature feedback.

Email Surveys

Average response rate hovers around 24.8% for email distribution according to FluidSurveys data. SurveySparrow’s 2024 analysis shows rates between 15-25%, with Delighted reporting their users average around 6%.

Use pre-notification emails to increase participation. Segment lists for better targeting.

Pair with WordPress survey plugins for seamless integration with your website.

Web Intercept Surveys

Capture feedback during website visits using targeted triggers.

Refiner reports passive feedback buttons get just 3-5% response rates from anonymous visitors. However, web surveys shown to logged-in users can reach 60-70% response rates.

Set engagement thresholds like time on site, scroll depth, or pages visited to target interested visitors only.

Works well for website feedback survey questions and usability research.

SMS Surveys

The 2024 Mobile Engagement Report shows SMS surveys achieve 45-60% response rates. Text messages have a 98% open rate according to Retently data.

Best for one or two questions maximum. Time-sensitive feedback only.

Keep questions simple since typing on mobile frustrates respondents quickly.

| Channel | Average Response Rate |

|---|---|

| In-person | 57-85% |

| SMS | 45-60% |

| In-app | 12-50% |

| 6-25% | |

| Web intercept (anonymous) | 3-5% |

How to Measure Survey Success

Response Rate

Number of completed surveys divided by total sent, expressed as percentage.

Acceptable rates range from 5-30% depending on audience relationship according to Delighted’s 2024 analysis. Rates above 50% are considered excellent.

Internal employee surveys should hit 60%+. Cold outreach might struggle past 10%.

Completion Rate

Percentage of starters who actually finish the entire survey.

Survicate research shows surveys with 1-3 questions achieve 83.34% completion, while 15+ questions drop to just 41.94%.

Low completion with high starts indicates internal survey problems, usually length or confusing questions.

Track this metric to identify where improving form abandonment rate efforts should focus.

Average Completion Time

Tracks how long respondents spend answering.

SurveyMonkey data shows respondents average 75 seconds on question one but drop to just 19 seconds by questions 26-30. This decline signals satisficing behavior and data quality degradation.

If average time drops below expected thresholds, your later questions produce unreliable data.

Drop-Off Points

Identify specific questions where abandonment spikes occur.

SurveyMonkey research found the sharpest increase in drop-off occurs with each additional question up to 15 questions. After that, respondents willing to continue show more tolerance.

These problem questions need revision, simplification, or removal entirely.

Heat mapping your survey funnel reveals exactly where respondent patience runs out.

Common Mistakes That Cause Low Response Rates

Avoid these errors that tank your feedback collection efforts:

- Asking too many demographic questions upfront before delivering value

- Using jargon or technical language respondents don’t understand

- Requiring answers for every single question with no skip options

- Not testing the survey internally before launch

- Sending from generic or unfamiliar email addresses that trigger spam filters

- Failing to explain data privacy and anonymity protections clearly

Each mistake compounds the others.

A long survey with confusing questions sent from an unknown sender at the wrong time? Zero chance of meaningful response rates.

Your form validation should catch errors without frustrating users, and your GDPR compliant forms should reassure participants about data handling.

Following proper form UX design principles prevents most of these issues before they start.

When analyzing survey data, low response rates make statistical significance nearly impossible to achieve.

FAQ on How To Avoid Survey Fatigue

What is survey fatigue?

Survey fatigue is the loss of motivation respondents experience when completing questionnaires. It causes participants to abandon surveys, rush through answers, or skip questions entirely. This phenomenon reduces completion rates and degrades data quality across all feedback collection efforts.

How long should a survey be to avoid fatigue?

Keep surveys between 5-7 minutes, roughly 10-15 questions. Research shows completion rates drop significantly after 10 minutes. Kantar data confirms surveys exceeding 25 minutes lose three times more respondents than those under five minutes.

What causes low survey response rates?

Common causes include over-surveying audiences, poorly designed questions, wrong timing, excessive length, and lack of transparency about purpose. Sending from unfamiliar email addresses and requiring answers for every question also tank response rates significantly.

How can I increase survey completion rates?

Use skip logic to route past irrelevant questions, keep surveys under 10 minutes, send at optimal times (lunch or evening), offer meaningful incentives, and personalize invitations. Mobile-friendly design matters since 30-40% complete surveys on phones.

What are signs of respondent fatigue?

Watch for straight-line responding, increased “don’t know” answers, higher abandonment in later sections, declining time per question, and blank open-ended responses. These patterns indicate participants are satisficing rather than providing thoughtful feedback.

Which survey question types reduce fatigue?

Rating scales, binary questions (yes/no), and multiple choice questions create the lowest cognitive load. Limit open-ended questions to one or two per survey. The Likert scale works well for measuring agreement without exhausting respondents.

How often should I survey my audience?

Space requests based on relationship depth. Monthly works for loyal customers; quarterly suits general audiences. Over-surveying trains people to ignore your brand. Back-to-back surveys cause the biggest response rate drops according to research.

Do incentives improve survey response rates?

Yes. Research shows compensation increases completion from 54% to 71%. Gift cards, discounts, and prize draws all work effectively. Match incentives to audience preferences and clearly state rewards in subject lines for maximum impact.

What is the best time to send surveys?

Deploy immediately after customer interactions while experience stays fresh. Email open rates peak during lunch (11 AM-2 PM) and evening (5-8 PM). Thursday shows optimal engagement. Avoid Monday mornings, Friday afternoons, weekends, and holidays.

How do I measure survey success?

Track response rate (completed divided by sent), completion rate (finishers divided by starters), average completion time, and drop-off points. Low completion with high starts indicates internal survey problems requiring question revision or length reduction.

Conclusion

Knowing how to avoid survey fatigue and increase response rates separates useful feedback from worthless data.

The fundamentals are straightforward: keep questionnaires under 10 minutes, use rating scales and multiple choice questions over open-ended formats, and send at optimal times.

Respondent engagement depends on respecting your audience’s time.

Personalized invitations, meaningful incentives, and closing the feedback loop build long-term participation habits.

Track your completion rate and drop-off points religiously. These metrics reveal exactly where your survey design fails.

Whether you’re measuring Net Promoter Score, running employee satisfaction surveys, or collecting Voice of the Customer data, the same principles apply.

Shorter surveys. Smarter timing. Clear purpose.

Your response rates will follow.