Most surveys collect answers. Few collect anything useful. The difference comes down to the questions you ask. Vague prompts get vague responses. Specific, well-structured feedback survey questions get data you…

Table of Contents

Most companies collect customer feedback. Few ask the right questions.

The difference between useful data and noise comes down to how you structure your customer service survey questions.

Poor questions produce misleading metrics. Good questions reveal exactly where your support team excels and where customers feel frustrated.

This guide covers the question types that actually work: CSAT, NPS, Customer Effort Score, and open-ended formats.

You’ll learn which questions to ask, when to send them, and how to turn responses into measurable improvements.

Whether you’re building your first survey or refining an existing feedback survey, these frameworks apply.

What is a Customer Service Survey Question

A customer service survey question is a structured inquiry that collects feedback about a customer’s experience with a support team, product, or service process.

Organizations use these questions to measure customer satisfaction, identify service gaps, and track support quality benchmarks.

The feedback gathered through these surveys informs strategic decisions about training, processes, and resource allocation.

Companies like Zendesk, Qualtrics, and SurveyMonkey have built entire platforms around this concept.

Customer Service Survey Questions

Overall Experience

Overall Satisfaction Rating

Question: How satisfied were you with your recent customer service interaction?

Type: Multiple Choice (1-5 scale from “Very dissatisfied” to “Very satisfied”)

Purpose: Measures general customer sentiment and serves as a baseline metric.

When to Ask: Immediately after service interaction.

Issue Resolution

Question: Did we resolve your issue completely?

Type: Yes/No with optional comment field

Purpose: Determines first contact resolution rate and identifies recurring issues.

When to Ask: After service completion or case closure.

Ease of Service

Question: How easy was it to get the help you needed?

Type: Multiple Choice (1-5 scale from “Very difficult” to “Very easy”)

Purpose: Evaluates customer effort score and identifies friction points.

When to Ask: After service completion.

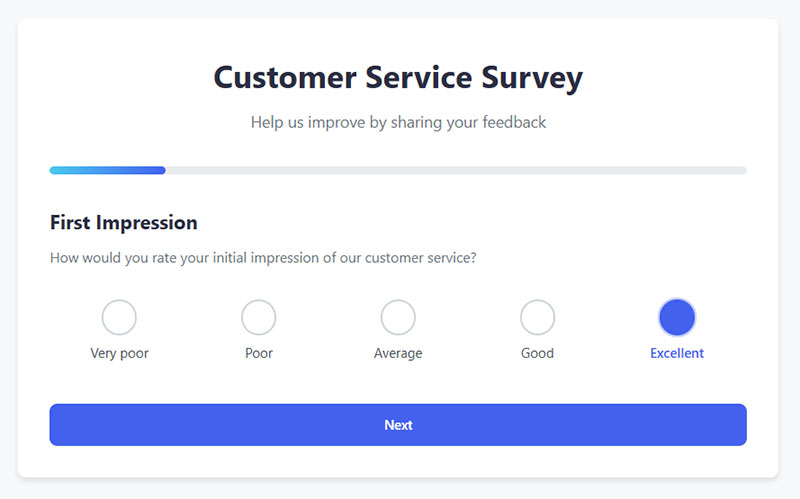

First Impression

Question: How would you rate your initial impression of our customer service?

Type: Multiple Choice (1-5 scale from “Very poor” to “Excellent”)

Purpose: Captures emotional response to first touch point.

When to Ask: Early in the survey.

Expectation Alignment

Question: Did our service meet your expectations?

Type: Multiple Choice (1-5 scale from “Far below expectations” to “Far exceeded expectations”)

Purpose: Measures gap between customer expectations and delivery.

When to Ask: After service completion.

Value Assessment

Question: Do you feel the service you received was worth your time?

Type: Multiple Choice (1-5 scale from “Not at all valuable” to “Extremely valuable”)

Purpose: Evaluates perceived value of service interaction.

When to Ask: After issue resolution.

Agent Performance

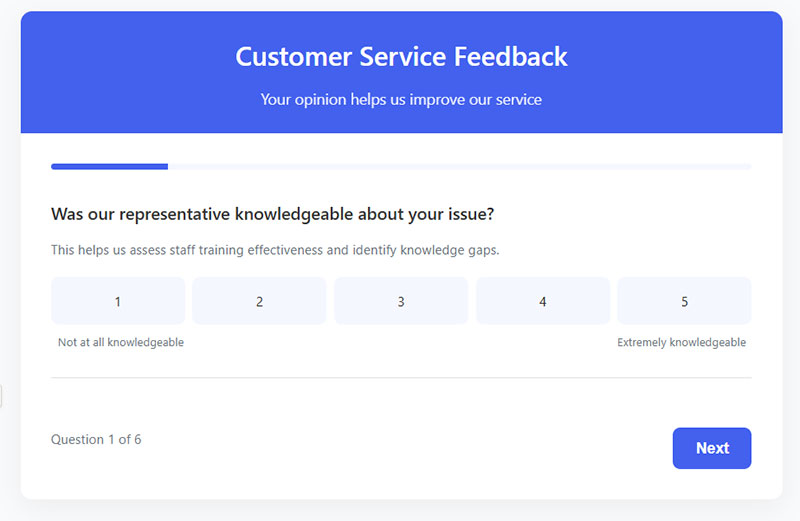

Agent Knowledge

Question: Was our representative knowledgeable about your issue?

Type: Multiple Choice (1-5 scale from “Not at all knowledgeable” to “Extremely knowledgeable”)

Purpose: Assesses staff training effectiveness and knowledge gaps.

When to Ask: After customer-agent interaction.

Communication Clarity

Question: Did the representative communicate clearly?

Type: Multiple Choice (1-5 scale from “Not at all clear” to “Extremely clear”)

Purpose: Evaluates agent communication skills and identifies training needs.

When to Ask: After verbal or written communication.

Professionalism Rating

Question: How would you rate the politeness and professionalism of our staff?

Type: Multiple Choice (1-5 scale from “Very unprofessional” to “Very professional”)

Purpose: Measures customer perception of staff conduct and service quality.

When to Ask: After customer-agent interaction.

Problem-Solving Skills

Question: How effectively did our representative solve your problem?

Type: Multiple Choice (1-5 scale from “Not at all effective” to “Very effective”)

Purpose: Assesses critical thinking and resolution capabilities.

When to Ask: After issue resolution.

Personalization Level

Question: Did you feel the representative treated you as an individual rather than just another customer?

Type: Multiple Choice (1-5 scale from “Not at all personalized” to “Highly personalized”)

Purpose: Measures perception of personalized service.

When to Ask: After customer-agent interaction.

Empathy Rating

Question: Did the representative seem to understand and care about your situation?

Type: Multiple Choice (1-5 scale from “Not at all empathetic” to “Very empathetic”)

Purpose: Evaluates emotional intelligence and customer connection.

When to Ask: After service interaction, particularly for complaint handling.

Timeliness

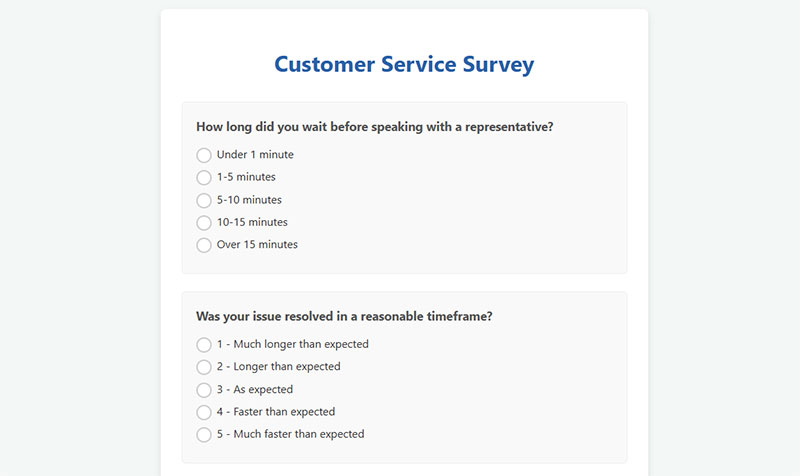

Wait Time Assessment

Question: How long did you wait before speaking with a representative?

Type: Multiple Choice (Time ranges: “Under 1 minute”, “1-5 minutes”, “5-10 minutes”, “10-15 minutes”, “Over 15 minutes”)

Purpose: Identifies staffing needs and peak time issues.

When to Ask: After initial contact and queuing.

Resolution Timeframe

Question: Was your issue resolved in a reasonable timeframe?

Type: Multiple Choice (1-5 scale from “Much longer than expected” to “Much faster than expected”)

Purpose: Measures efficiency against customer expectations.

When to Ask: After case resolution.

Response Time Satisfaction

Question: How satisfied were you with our response time to your initial inquiry?

Type: Multiple Choice (1-5 scale from “Very dissatisfied” to “Very satisfied”)

Purpose: Evaluates perception of initial responsiveness.

When to Ask: After first response received.

Follow-up Timeliness

Question: If follow-up was required, how promptly did we get back to you?

Type: Multiple Choice (1-5 scale from “Very slow” to “Very prompt”) with N/A option

Purpose: Measures consistency in follow-up communications.

When to Ask: For multi-step resolutions.

Urgency Recognition

Question: How well did we recognize the urgency of your situation?

Type: Multiple Choice (1-5 scale from “Did not recognize urgency at all” to “Perfectly recognized urgency”)

Purpose: Assesses prioritization effectiveness and customer perception.

When to Ask: After urgent issues or time-sensitive matters.

Communication

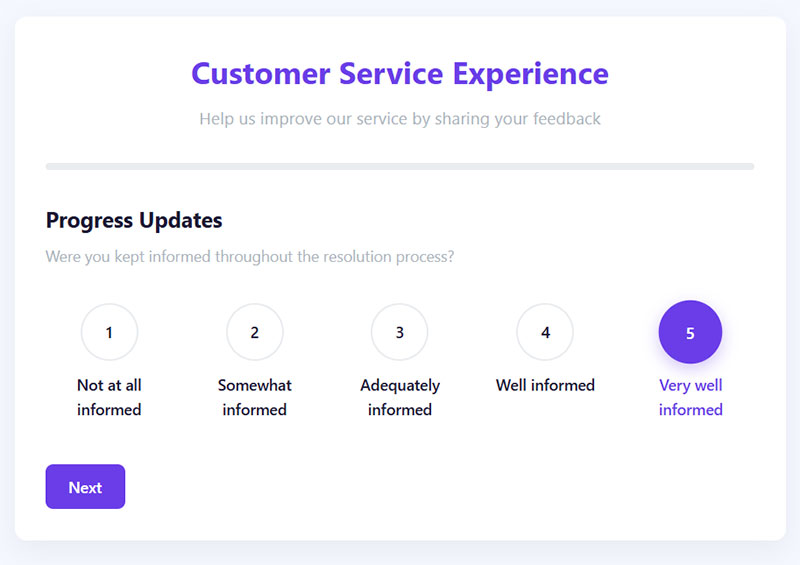

Progress Updates

Question: Were you kept informed throughout the resolution process?

Type: Multiple Choice (1-5 scale from “Not at all informed” to “Very well informed”)

Purpose: Evaluates communication frequency and transparency.

When to Ask: For multi-step or extended service interactions.

Information Clarity

Question: How satisfied were you with the clarity of information provided?

Type: Multiple Choice (1-5 scale from “Very unclear” to “Very clear”)

Purpose: Assesses quality of explanations and instructions.

When to Ask: After receiving information or instructions.

Communication Frequency

Question: How would you rate the frequency of our communications?

Type: Multiple Choice (1-5 scale from “Too infrequent” to “Too frequent” with “Just right” in the middle)

Purpose: Evaluates communication cadence and customer preferences.

When to Ask: For interactions with multiple touchpoints.

Channel Appropriateness

Question: Was the communication channel used (email, phone, text) appropriate for your needs?

Type: Multiple Choice (Yes/No with preference option)

Purpose: Identifies preferred communication channels.

When to Ask: After case resolution.

Proactive Communication

Question: Did we anticipate your questions and provide information before you had to ask?

Type: Multiple Choice (1-5 scale from “Not at all proactive” to “Extremely proactive”)

Purpose: Measures predictive service quality and proactive problem-solving.

When to Ask: After service completion.

Product/Service Feedback

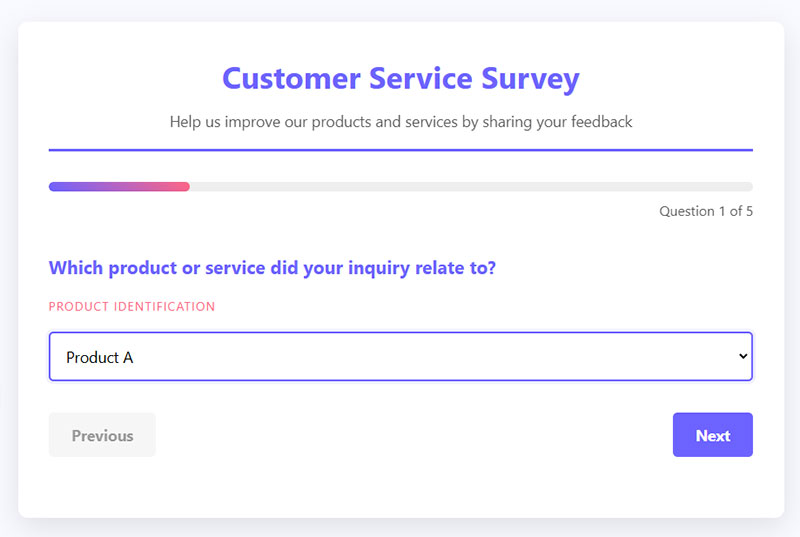

Product Identification

Question: Which product or service did your inquiry relate to?

Type: Dropdown menu or Multiple Choice (list of products/services)

Purpose: Segments feedback by product line and identifies problematic offerings.

When to Ask: Early in the survey.

Product Improvement

Question: What improvements would make this product/service better?

Type: Open-ended text field

Purpose: Gathers specific product enhancement ideas.

When to Ask: After product-specific questions.

Feature Satisfaction

Question: How satisfied are you with the features of the product/service you purchased?

Type: Multiple Choice (1-5 scale from “Very dissatisfied” to “Very satisfied”)

Purpose: Evaluates product feature quality and alignment with expectations.

When to Ask: After purchase and initial use.

Product Usage Challenges

Question: What challenges, if any, have you faced while using our product/service?

Type: Open-ended text field

Purpose: Identifies friction points in product usage.

When to Ask: After customer has used the product.

Value for Money

Question: How would you rate the value for money of our product/service?

Type: Multiple Choice (1-5 scale from “Poor value” to “Excellent value”)

Purpose: Assesses price-to-value perception.

When to Ask: After purchase and usage experience.

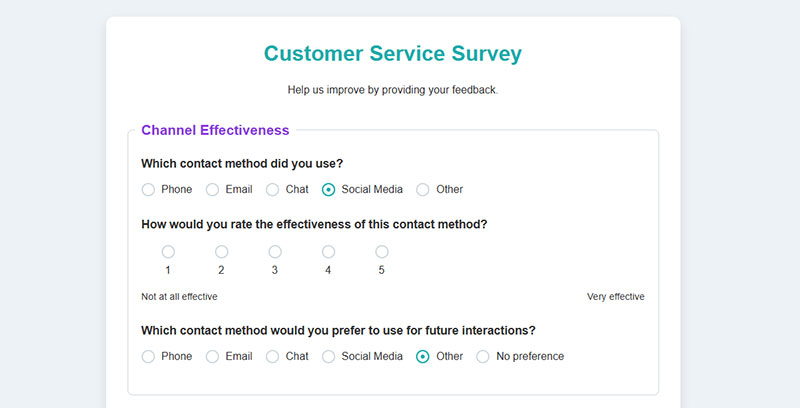

Channel Effectiveness

Contact Method

Question: Which contact method did you use (phone, email, chat, etc.)?

Type: Multiple Choice (list of available contact methods)

Purpose: Tracks channel usage and segments feedback by contact method.

When to Ask: Early in the survey.

Channel Satisfaction

Question: How would you rate the effectiveness of this contact method?

Type: Multiple Choice (1-5 scale from “Not at all effective” to “Very effective”)

Purpose: Compares channel performance and identifies improvement areas.

When to Ask: After channel-specific experience.

Channel Preference

Question: Which contact method would you prefer to use for future interactions?

Type: Multiple Choice (list of available contact methods)

Purpose: Identifies channel preferences for future service design.

When to Ask: After rating current channel experience.

Website/App Usability

Question: If you used our website or app, how easy was it to navigate?

Type: Multiple Choice (1-5 scale from “Very difficult” to “Very easy”) with N/A option

Purpose: Evaluates digital interface usability.

When to Ask: After digital interactions.

Self-Service Effectiveness

Question: If you attempted to use self-service options before contacting us, how helpful were they?

Type: Multiple Choice (1-5 scale from “Not at all helpful” to “Very helpful”) with N/A option

Purpose: Measures self-service effectiveness and identifies improvement areas.

When to Ask: After service completion.

Loyalty & Recommendation

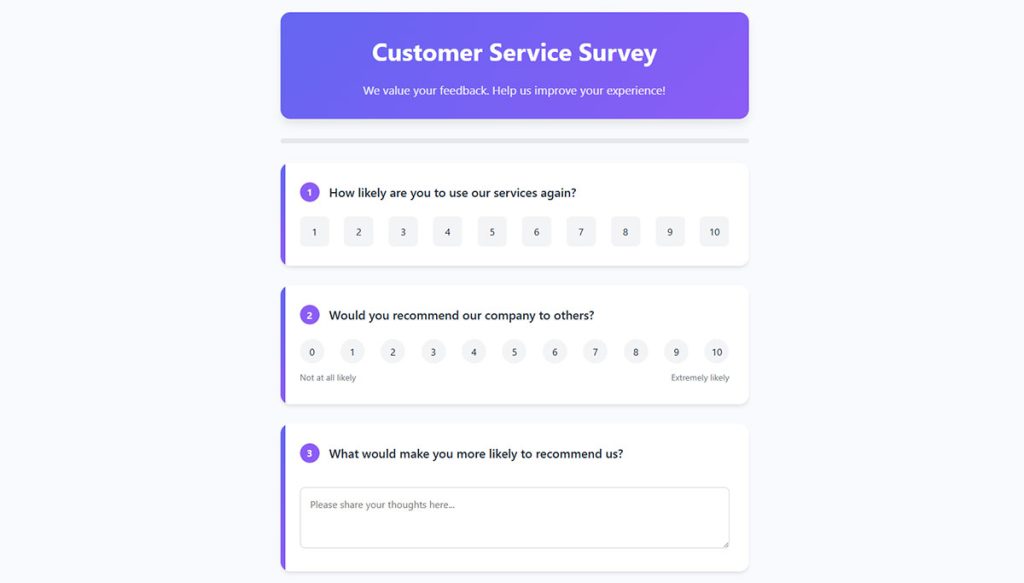

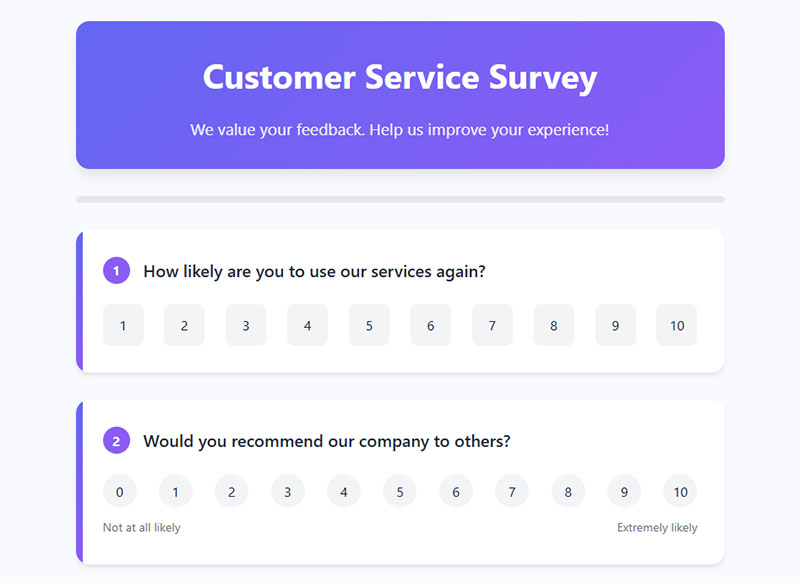

Repeat Business Likelihood

Question: How likely are you to use our services again?

Type: Multiple Choice (1-10 scale or 1-5 scale)

Purpose: Predicts customer retention and identifies at-risk customers.

When to Ask: End of survey.

Net Promoter Score

Question: Would you recommend our company to others?

Type: Multiple Choice (0-10 scale from “Not at all likely” to “Extremely likely”)

Purpose: Calculates NPS score and identifies promoters and detractors.

When to Ask: End of survey.

Recommendation Drivers

Question: What would make you more likely to recommend us?

Type: Open-ended text field

Purpose: Identifies key loyalty drivers and improvement opportunities.

When to Ask: After NPS question, especially for scores below 9.

Competitive Comparison

Question: How would you rate our service compared to our competitors?

Type: Multiple Choice (1-5 scale from “Much worse” to “Much better”) with “Haven’t used competitors” option

Purpose: Benchmarks service against competition.

When to Ask: After service completion.

Brand Perception

Question: Has your perception of our brand changed after this service experience?

Type: Multiple Choice (“Improved significantly,” “Improved somewhat,” “No change,” “Decreased somewhat,” “Decreased significantly”)

Purpose: Measures impact of service experience on brand perception.

When to Ask: End of survey.

Relationship Strength

Question: How would you describe your relationship with our company?

Type: Multiple Choice (“One-time customer,” “Occasional customer,” “Regular customer,” “Loyal advocate”)

Purpose: Segments customers by relationship type and loyalty level.

When to Ask: End of survey.

Open Feedback

Improvement Suggestion

Question: What one thing could we have done better?

Type: Open-ended text field

Purpose: Identifies priority improvement areas from customer perspective.

When to Ask: End of survey.

Additional Comments

Question: Is there anything else you’d like to share about your experience?

Type: Open-ended text field

Purpose: Captures unexpected feedback and gives customers a voice.

When to Ask: Final question of survey.

Unresolved Concerns

Question: Do you have any remaining concerns or questions we haven’t addressed?

Type: Open-ended text field with Yes/No option first

Purpose: Identifies loose ends and provides opportunity for additional support.

When to Ask: Before survey completion.

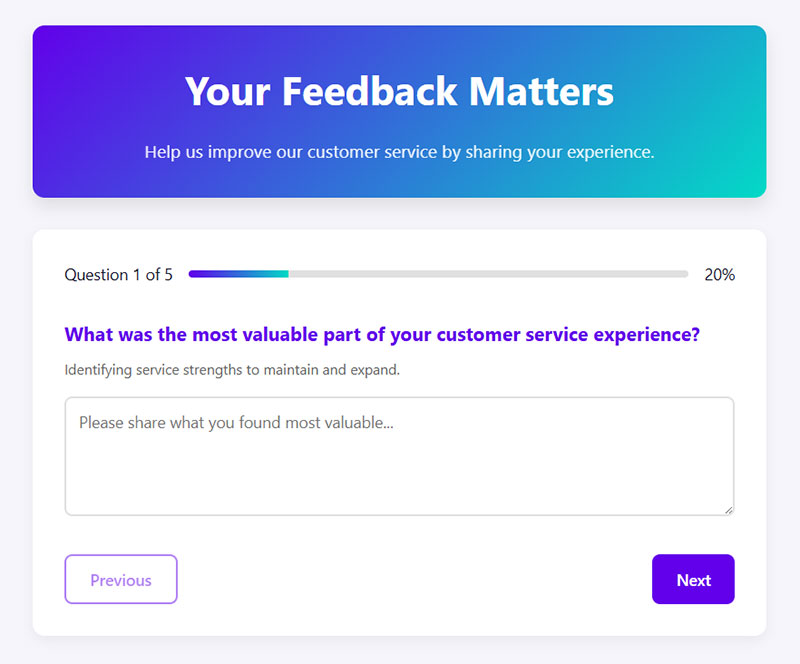

Most Valuable Aspect

Question: What was the most valuable part of your customer service experience?

Type: Open-ended text field

Purpose: Identifies service strengths to maintain and expand.

When to Ask: End of survey.

Specific Team/Department Feedback

Question: If you interacted with multiple teams or departments, which one provided the best service and why?

Type: Dropdown for department selection with open text field

Purpose: Identifies internal best practices and performance variations.

When to Ask: For complex service interactions involving multiple departments.

What are the Types of Customer Service Survey Questions

Different question formats serve different purposes. Choosing the right types of survey questions directly impacts data quality and response rates.

What is a Likert Scale Question

A Likert scale question asks respondents to rate agreement or satisfaction on a fixed scale, typically ranging from “Strongly Disagree” to “Strongly Agree.”

Most CSAT surveys use 5-point or 7-point scales. This format captures intensity of feeling, not just yes or no.

What is an Open-Ended Survey Question

Open-ended questions allow free-text responses without predefined answer options.

They reveal unexpected insights but require manual analysis or sentiment analysis tools to process at scale.

What is a Rating Scale Question

Rating scale questions ask customers to assign a numerical value, usually 1-10, to their experience.

The Net Promoter Score uses this format. Simple to answer; easy to benchmark across time periods.

What is a Yes-No Survey Question

Binary questions provide straightforward data points. “Was your issue resolved?” Yes or no.

Quick to complete but limited in nuance. Best used alongside other question types.

What is a Multiple Choice Survey Question

Multiple choice questions offer predefined response options. Useful for categorizing feedback into actionable buckets.

Always include an “Other” option with a text field to capture responses you didn’t anticipate.

What is a Semantic Differential Question

Semantic differential questions place two opposite adjectives at either end of a scale.

“How would you describe our support: Unhelpful _ Helpful.” Captures emotional perception better than standard ratings.

What are the Categories of Customer Service Survey Questions

Survey questions group into categories based on what they measure. Each category targets a specific aspect of the customer experience.

What are Customer Satisfaction Score (CSAT) Questions

CSAT questions measure immediate satisfaction with a specific interaction or transaction.

“How satisfied are you with the support you received today?” Scored 1-5 or 1-7. Industry standard for post-interaction surveys.

What are Net Promoter Score (NPS) Questions

NPS measures customer loyalty through one question: “How likely are you to recommend us to a friend or colleague?”

Responses range 0-10. Fred Reichheld at Bain & Company developed this metric. Learn more about crafting effective NPS survey questions for your business.

What are Customer Effort Score (CES) Questions

CES tracks how much effort customers expend to get their issue resolved.

“How easy was it to get help today?” Low effort correlates with higher retention. Gartner research supports this metric.

What are Agent Performance Questions

These questions evaluate individual support representatives. Professionalism, knowledge, communication skills.

“Did the agent understand your issue?” “Was the representative courteous?” Direct feedback for coaching and training.

What are Product and Service Quality Questions

Quality questions assess the actual product or service, separate from the support experience.

Consider exploring survey questions about product quality to build comprehensive feedback collection.

What are Response Time and Resolution Questions

Speed matters. These questions measure timeliness of initial response and total resolution time.

“How satisfied are you with the time it took to resolve your issue?” Critical for contact center metrics.

What Questions Should You Ask in a Customer Service Survey

The right questions depend on your goals. Below are question examples organized by measurement purpose.

What Questions Measure Overall Satisfaction

- “Overall, how satisfied are you with our customer service?”

- “How would you rate your experience with our support team?”

- “Did we meet your expectations today?”

What Questions Measure Support Team Performance

- “Was the representative knowledgeable about your issue?”

- “Did our team communicate clearly?”

- “How professional was the service you received?”

What Questions Measure Response Speed and Efficiency

- “How satisfied are you with our response time?”

- “Was your issue handled efficiently?”

- “How long did you wait before receiving assistance?”

What Questions Measure Problem Resolution

- “Was your issue completely resolved?”

- “How many contacts did it take to resolve your problem?”

- “Do you need to follow up on this issue?”

What Questions Identify Improvement Opportunities

- “What could we have done better?”

- “What one thing would improve our service?”

- “Is there anything else you’d like us to know?”

For related feedback collection, check out post-purchase survey questions and website feedback survey questions.

How to Write Effective Customer Service Survey Questions

Question design determines response quality. Follow these best practices for creating feedback forms to get actionable data.

How to Keep Survey Questions Clear and Concise

One question, one concept. “How satisfied are you with our service and pricing?” Bad. Split it into two questions.

Use simple language. Avoid jargon. Read questions aloud to test clarity.

How to Avoid Leading Questions in Surveys

“How amazing was your experience?” leads the respondent. “How was your experience?” stays neutral.

Remove adjectives that imply a desired answer. Let customers form their own opinions.

How to Use Consistent Rating Scales

Pick one scale and stick with it throughout. Switching between 5-point and 10-point scales confuses respondents.

Consistency also makes analyzing survey data much easier.

How to Balance Quantitative and Qualitative Questions

Quantitative questions provide benchmarks. Qualitative questions explain the “why” behind the numbers.

A typical ratio: 70% rating questions, 30% open-ended. Too many open questions increases abandonment. Consider strategies for avoiding survey fatigue when designing longer surveys.

When to Send Customer Service Surveys

Timing affects response rates and data accuracy. Send too early, customers haven’t formed opinions; send too late, they’ve forgotten details.

When to Send Post-Interaction Surveys

Send within 24 hours of support ticket closure. The experience is fresh; emotions are still accessible.

Email surveys work well here. In-app surveys catch customers while still engaged with your platform.

When to Send Transactional Surveys

Transactional surveys trigger after specific actions: purchase completion, subscription renewal, return processing.

Automate delivery through your CRM or helpdesk platform like Freshdesk, Intercom, or Salesforce Service Cloud.

When to Send Periodic Relationship Surveys

Relationship surveys measure overall sentiment, not single interactions. Quarterly or biannually works for most businesses.

Target active customers who’ve had multiple touchpoints. The American Customer Satisfaction Index uses this approach for industry benchmarking.

Use a dedicated survey form that covers broader topics than transactional surveys.

How to Analyze Customer Service Survey Results

Collecting feedback means nothing without proper analysis. Raw data needs structure to drive decisions.

How to Calculate Customer Satisfaction Score

CSAT calculation: (Number of satisfied responses / Total responses) x 100.

“Satisfied” typically means ratings of 4-5 on a 5-point scale. Track this metric monthly to spot trends.

How to Calculate Net Promoter Score

NPS groups respondents into three categories based on their 0-10 rating:

- Promoters (9-10): Loyal customers who refer others

- Passives (7-8): Satisfied but not enthusiastic

- Detractors (0-6): Unhappy customers at risk of churning

Formula: % Promoters – % Detractors = NPS. Scores range from -100 to +100.

Bain & Company benchmarks show average NPS varies wildly by industry. Software companies average around 30; airlines hover near 0.

How to Identify Trends in Survey Responses

Look for patterns across time periods, customer segments, and support channels.

Use customer sentiment analysis tools like Medallia or Hotjar to process open-ended responses at scale.

Cross-reference survey data with operational metrics: ticket volume, resolution time, channel usage.

Segment results by customer type, issue category, and agent. Aggregate scores hide problems; granular data reveals them.

Build dashboards that update in real-time. Forrester Research and Gartner both emphasize the shift toward continuous feedback loops over annual surveys.

Consider using WordPress survey plugins if you need quick implementation. Review feedback form templates for inspiration on structure and question flow.

Track completion rates alongside scores. Low response rates indicate survey design problems. Apply tips for improving form abandonment rate if respondents drop off mid-survey.

FAQ on Customer Service Survey Questions

What is a customer service survey?

A customer service survey is a feedback collection tool that measures customer satisfaction with support interactions.

Companies use these surveys to track service quality, identify training needs, and benchmark performance against competitors.

How many questions should a customer service survey have?

Keep surveys between 3-7 questions. Shorter surveys get higher completion rates.

One CSAT question plus one open-ended follow-up often provides enough actionable data without causing respondent fatigue.

What is a good CSAT score?

CSAT scores above 80% indicate strong customer satisfaction. Industry averages vary significantly.

The American Customer Satisfaction Index shows retail averaging 77% while utilities hover around 73%. Compare against your specific sector.

What is the difference between CSAT and NPS?

CSAT measures satisfaction with a specific interaction. NPS measures overall loyalty and likelihood to recommend.

Use CSAT for transactional feedback after support tickets. Use NPS for quarterly relationship health checks.

When should I send customer service surveys?

Send post-interaction surveys within 24 hours of ticket resolution. Memory fades quickly.

For relationship surveys, quarterly timing works for most B2B companies. B2C brands often survey after key touchpoints like purchases or renewals.

How do I increase survey response rates?

Keep surveys short. Personalize the invitation. Send at optimal times, typically mid-morning on weekdays.

Mobile-friendly form design matters since over 60% of emails open on phones. Offer incentives sparingly.

What are open-ended vs closed-ended survey questions?

Closed-ended questions provide predefined answers like rating scales or multiple choice. Open-ended questions allow free-text responses.

Closed questions give quantitative benchmarks. Open questions reveal the “why” behind scores.

How often should I survey customers?

Survey after every support interaction for transactional feedback. Limit relationship surveys to quarterly or biannually.

Over-surveying damages response rates and annoys customers. Quality over quantity.

What tools can I use to create customer service surveys?

Popular platforms include SurveyMonkey, Qualtrics, Typeform, and Google Forms. Helpdesk tools like Zendesk and Freshdesk have built-in survey features.

Choose based on integration needs, analytics depth, and budget constraints.

How do I analyze customer service survey results?

Calculate CSAT and NPS scores first. Segment data by agent, channel, and issue type.

Use sentiment analysis tools like Medallia for open-ended responses. Track trends monthly rather than reacting to individual scores.

Conclusion

Effective customer service survey questions transform scattered opinions into measurable insights. The questions you choose determine whether you collect actionable data or noise.

Start with clear goals. Match question types to those goals.

Use rating scales for benchmarking. Add open-ended questions to understand the reasoning behind scores.

Timing matters as much as question design. Post-interaction surveys capture fresh experiences; relationship surveys track long-term satisfaction trends.

Build a continuous feedback loop rather than treating surveys as one-time projects. Track customer satisfaction metrics monthly. Segment results by agent, channel, and issue type.

Voice of Customer programs succeed when insights drive real changes. Survey data sitting in dashboards helps no one.

Measure, analyze, improve, repeat.