Your best employees are already thinking about leaving. The right employee satisfaction survey questions reveal problems before resignation letters hit your desk. Gallup research shows that only 32% of U.S….

Table of Contents

Your analytics show what visitors do. They never explain why.

Website feedback survey questions bridge that gap by capturing visitor opinions, frustrations, and suggestions directly.

Bounce rates climb. Conversions stall. Without asking visitors what went wrong, you’re guessing at solutions.

This guide covers the question types that actually produce useful data: usability questions, satisfaction metrics like NPS and CSAT, content quality assessments, and design feedback.

You’ll find ready-to-use question examples for e-commerce sites, SaaS platforms, and content websites.

Plus placement strategies, timing triggers, and analysis methods that turn raw responses into site improvements.

What is a Website Feedback Survey Question

A website feedback survey question is a structured inquiry presented to visitors that collects opinions about their browsing experience, site usability, and content quality.

These questions appear through on-site pop-up surveys, embedded forms, or exit-intent triggers.

Tools like Hotjar, Qualtrics, and SurveyMonkey deliver these questions at specific touchpoints during the customer journey.

How Do Website Feedback Survey Questions Work

Survey triggers activate based on visitor behavior: time on page, scroll depth, or exit intent.

The visitor feedback collection process captures both quantitative metrics (ratings, scores) and qualitative data (open-ended responses).

What Information Do Website Feedback Surveys Collect

Surveys gather user experience feedback across navigation, content, design, and functionality; response data feeds into UX research methods and site performance evaluation.

Most feedback forms also capture visitor demographics and intent signals.

Website Feedback Survey Questions

General Experience

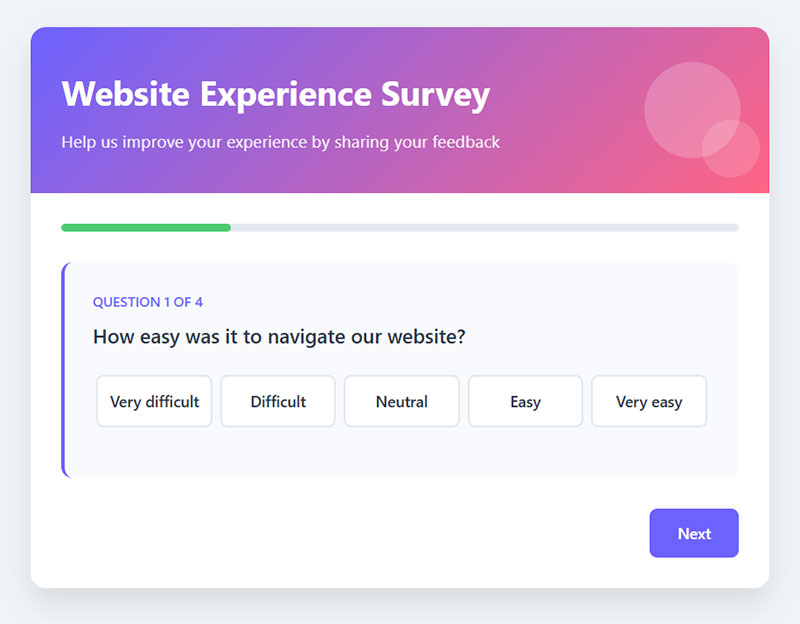

Website Navigation Ease

Question: How easy was it to navigate our website?

Type: Multiple Choice (1–5 scale from “Very difficult” to “Very easy”)

Purpose: Evaluates the intuitive design of menus, links, and overall site structure.

When to Ask: After users have explored multiple pages of your website.

Task Completion Success

Question: Did you find what you were looking for today?

Type: Yes/No with optional comment field

Purpose: Directly measures if the website fulfilled the user’s primary goal.

When to Ask: Immediately after a search or at exit intent.

Overall Design Rating

Question: How would you rate the overall design of our website?

Type: Star rating (1-5 stars)

Purpose: Captures general impression of visual appeal and professionalism.

When to Ask: After sufficient exposure to website design elements.

Task Identification

Question: What task were you trying to complete on our website?

Type: Open-ended text field

Purpose: Reveals user intent and most common use cases.

When to Ask: Early in the survey to provide context for later answers.

Visual Design & Layout

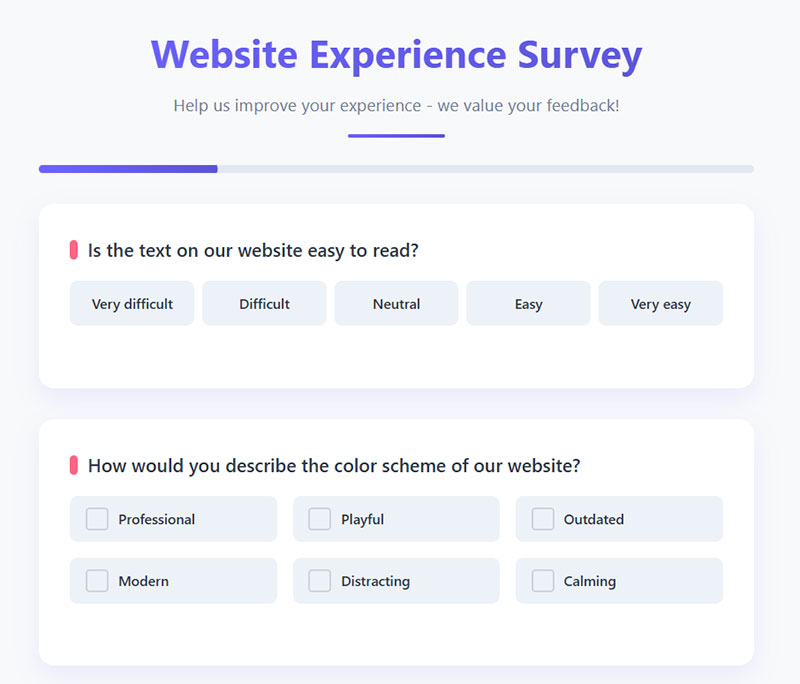

Text Readability

Question: Is the text on our website easy to read?

Type: Multiple Choice (1–5 scale from “Very difficult” to “Very easy”)

Purpose: Assesses font choices, sizes, contrast, and spacing.

When to Ask: After users have read content on multiple pages.

Color Scheme Impression

Question: How would you describe the color scheme of our website?

Type: Multiple select (Professional, Playful, Outdated, Modern, Distracting, Calming, etc.)

Purpose: Evaluates emotional response to brand colors and design choices.

When to Ask: After users have viewed multiple pages with consistent branding.

Distraction Elements

Question: Did any elements of the page distract you from your task?

Type: Yes/No with conditional followup asking which elements

Purpose: Identifies problematic ads, popups, or design elements.

When to Ask: After task completion attempts or abandoned sessions.

Standout Elements

Question: Which page elements stood out most to you?

Type: Multiple select (Header, Navigation menu, Images, Call-to-action buttons, Text content, Footer)

Purpose: Reveals what captures user attention for better design focus.

When to Ask: After sufficient website exposure.

Content Quality

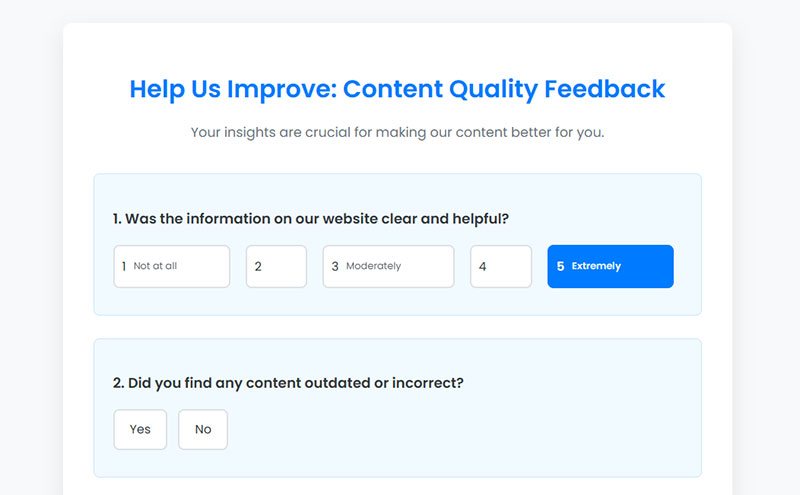

Information Clarity

Question: Was the information on our website clear and helpful?

Type: Multiple Choice (1–5 scale from “Not at all” to “Extremely”)

Purpose: Assesses content quality and communication effectiveness.

When to Ask: After users have consumed key content pages.

Content Accuracy

Question: Did you find any content outdated or incorrect?

Type: Yes/No with conditional followup for details

Purpose: Identifies specific content issues requiring updates.

When to Ask: After users have viewed informational pages.

Content Gaps

Question: What additional information would you like to see on our website?

Type: Open-ended text field

Purpose: Uncovers missing content that users expect or need.

When to Ask: After users have thoroughly explored relevant sections.

Content Relevance

Question: How relevant was our content to your needs?

Type: Multiple Choice (1–5 scale from “Not relevant” to “Extremely relevant”)

Purpose: Measures alignment between content strategy and user expectations.

When to Ask: After users have consumed multiple content pieces.

Functionality

Link Functionality

Question: Did all features and links work as expected?

Type: Yes/No with conditional followup for details

Purpose: Identifies broken links or malfunctioning elements.

When to Ask: After users have clicked multiple links or used interactive features.

Page Load Speed

Question: How fast did pages load for you?

Type: Multiple Choice (1–5 scale from “Very slow” to “Very fast”)

Purpose: Assesses perceived performance and technical issues.

When to Ask: After users have navigated to multiple pages.

Error Messages

Question: Did you encounter any error messages?

Type: Yes/No with conditional followup requesting details

Purpose: Identifies specific technical problems for immediate fixing.

When to Ask: At exit or after completion of multi-step processes.

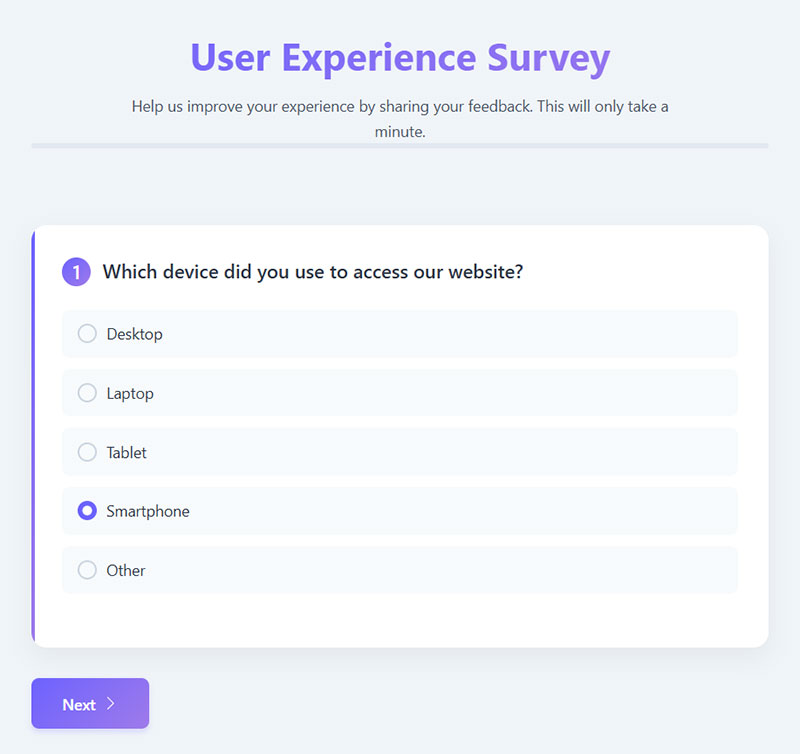

Device Usage

Question: Which device did you use to access our website?

Type: Multiple choice (Desktop, Laptop, Tablet, Smartphone, Other)

Purpose: Correlates user experience with device type for responsive design improvements.

When to Ask: Early in survey to provide context for other answers.

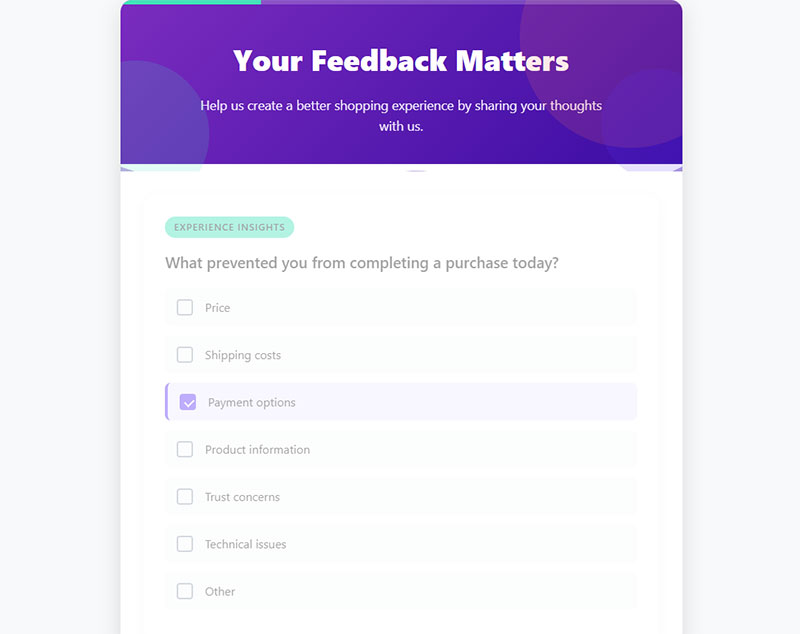

Conversion Elements

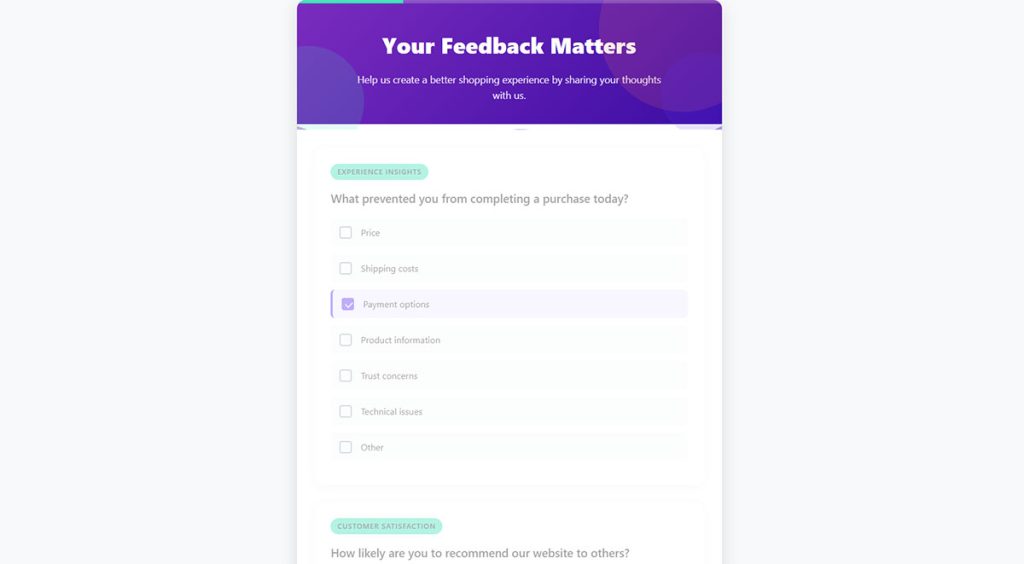

Purchase Barriers

Question: What prevented you from completing a purchase today?

Type: Multiple select (Price, Shipping costs, Payment options, Product information, Trust concerns, Technical issues, Other)

Purpose: Identifies specific obstacles in the conversion funnel.

When to Ask: After cart abandonment or exit intent.

Recommendation Likelihood

Question: How likely are you to recommend our website to others?

Type: NPS scale (0-10)

Purpose: Measures overall satisfaction and potential for word-of-mouth growth.

When to Ask: End of survey or after significant engagement.

Purchase Incentives

Question: What would make you more likely to buy from us?

Type: Multiple select (Lower prices, Free shipping, Better product images, More payment options, Better product descriptions, Faster checkout)

Purpose: Reveals high-impact conversion optimizations.

When to Ask: After cart abandonment or to non-converting visitors.

Pricing Clarity

Question: Was our pricing clearly displayed?

Type: Multiple Choice (1–5 scale from “Not clear at all” to “Very clear”)

Purpose: Assesses transparency and potential confusion points.

When to Ask: After users have viewed product pages or pricing information.

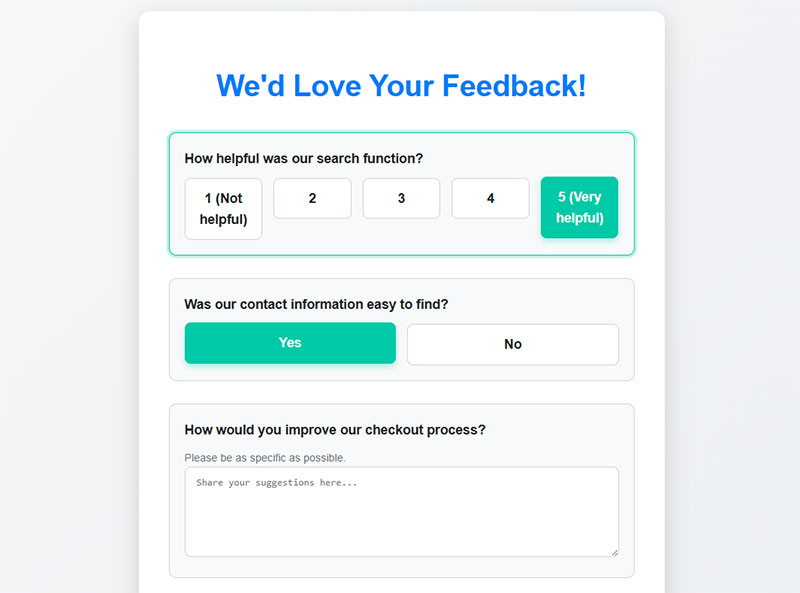

Specific Features

Search Function Utility

Question: How helpful was our search function?

Type: Multiple Choice (1–5 scale from “Not helpful” to “Very helpful”)

Purpose: Evaluates search algorithm effectiveness and result relevance.

When to Ask: After users have used the search function.

Contact Info Findability

Question: Was our contact information easy to find?

Type: Yes/No

Purpose: Assesses accessibility of critical trust-building information.

When to Ask: After users have had reason to look for contact details.

Checkout Process Improvement

Question: How would you improve our checkout process?

Type: Open-ended text field

Purpose: Collects specific suggestions for reducing cart abandonment.

When to Ask: After checkout completion or abandonment.

FAQ Effectiveness

Question: Did our FAQ section answer your questions?

Type: Multiple Choice (1–5 scale from “Not at all” to “Completely”)

Purpose: Evaluates help content comprehensiveness.

When to Ask: After users visit FAQ or help sections.

Open-ended Feedback

Top Improvement Areas

Question: What three things would you improve about our website?

Type: Open-ended text field

Purpose: Prioritizes improvement areas based on user perspective.

When to Ask: End of survey after all other questions.

Best Experience Element

Question: What was the best part of your experience on our site?

Type: Open-ended text field

Purpose: Identifies strengths to maintain and potentially expand.

When to Ask: After users have explored multiple site areas.

Competitor Comparison

Question: What websites do you prefer over ours and why?

Type: Open-ended text field

Purpose: Reveals competitive advantages and improvement opportunities.

When to Ask: End of survey after establishing rapport.

Additional Feedback

Question: Is there anything else you’d like to tell us?

Type: Open-ended text field

Purpose: Catches valuable insights not covered by other questions.

When to Ask: As final survey question.

What Are the Types of Website Feedback Survey Questions

Website surveys use four core question formats: open-ended, closed-ended, rating scale, and multiple choice.

Each type of survey question serves different data collection goals.

Mixing formats increases both response rates and insight quality.

What Are Open-Ended Website Feedback Questions

Open-ended questions let visitors respond in their own words without predefined options.

Examples:

- “What brought you to our website today?”

- “What could we do to improve your experience?”

- “What information were you looking for but couldn’t find?”

- “How would you describe our website to a colleague?”

These questions excel at capturing voice of customer insights and unexpected pain points.

What Are Closed-Ended Website Feedback Questions

Closed-ended questions offer predefined answer choices for quick, quantifiable responses.

Examples:

- “Did you find what you were looking for?” (Yes/No)

- “Were you able to complete your task today?” (Yes/No/Partially)

- “How did you hear about us?” (Search engine/Social media/Referral/Advertisement)

Best for tracking specific metrics over time and benchmarking against industry standards.

What Are Rating Scale Questions for Website Feedback

Rating scales measure satisfaction intensity using numeric or visual spectrums.

Common formats:

- Likert scale: 5-point agreement range (Strongly Disagree to Strongly Agree)

- Numeric scale: 1-10 satisfaction rating

- Emoji scale: Visual sentiment indicators

- Star rating: 1-5 star evaluation

The Net Promoter Score (NPS) uses a 0-10 scale to measure recommendation likelihood.

What Are Multiple Choice Website Survey Questions

Multiple choice questions present three or more answer options; visitors select one or several responses depending on the configuration.

Works well for categorizing visitor intent, identifying traffic sources, and segmenting user preferences.

What Website Feedback Questions Measure Usability

Usability questions assess how easily visitors navigate, find information, and complete tasks on your site.

The System Usability Scale (SUS) provides standardized website usability survey questions for benchmarking.

Nielsen Norman Group research shows usability directly impacts conversion rates and bounce rate analysis.

How Do You Ask About Website Navigation

Navigation feedback reveals how visitors move through your site architecture.

Questions to use:

- “How easy was it to find the menu options you needed?” (1-5 scale)

- “Did the site navigation meet your expectations?”

- “Which navigation element confused you most?”

How Do You Ask About Information Architecture

Information architecture questions measure content organization effectiveness.

Ask: “How quickly did you locate the information you needed?” and “Were related topics grouped logically?”

How Do You Measure Task Completion Through Surveys

Task completion surveys trigger after specific user actions: purchases, sign-ups, downloads.

The Customer Effort Score (CES) question format works best here: “How easy was it to complete your task today?”

What Website Feedback Questions Measure Content Quality

Content quality questions evaluate relevance, clarity, and comprehensiveness of your website copy, articles, and product descriptions.

Baymard Institute research connects content clarity to reduced form abandonment and higher engagement.

How Do You Ask About Content Relevance

Relevance questions determine if content matches visitor expectations and search intent.

Use: “Did this page answer your question?” and “How relevant was this content to what you were looking for?” (1-5 scale)

How Do You Ask About Content Clarity

Clarity questions identify confusing terminology, jargon, or poorly structured information.

Ask: “Was any part of this page difficult to understand?” with a follow-up text field for specifics.

How Do You Evaluate Content Comprehensiveness

Comprehensiveness surveys check if content fully addresses visitor needs without requiring additional searches.

“After reading this page, do you need more information on this topic?” (Yes/No/Partially)

What Website Feedback Questions Measure Design and Layout

Design feedback captures visitor reactions to visual elements, page structure, and overall aesthetic appeal.

Following form design principles when creating surveys increases completion rates by reducing cognitive load.

How Do You Ask About Visual Design Satisfaction

Visual design questions assess color schemes, typography, imagery, and whitespace usage.

Ask: “How would you rate the visual appearance of our website?” (1-5 stars) and “Does the design feel trustworthy and professional?”

How Do You Ask About Mobile Responsiveness

Mobile experience questions target smartphone and tablet visitors specifically; proper mobile forms should trigger only on those devices.

Key questions:

- “How easy was it to use our website on your mobile device?”

- “Did any elements appear broken or difficult to tap?”

- “Would you prefer using our mobile site or desktop version?”

How Do You Evaluate Page Load Experience

Page speed questions connect perceived performance to user satisfaction; slow pages increase bounce rates regardless of content quality.

Ask: “Did our pages load quickly enough for you?” and “Did slow loading prevent you from completing any actions?”

What Website Feedback Questions Measure Customer Satisfaction

Customer satisfaction measurement uses standardized scoring frameworks to track visitor sentiment over time.

Three primary metrics dominate: CSAT, NPS, and CES.

What Is the Customer Satisfaction Score (CSAT) Question Format

CSAT asks: “How satisfied are you with your experience today?” using a 1-5 scale; scores above 80% indicate strong performance.

Best deployed immediately after task completion or support interactions.

What Is the Net Promoter Score (NPS) Question Format

The NPS survey question asks: “How likely are you to recommend us to a friend or colleague?” on a 0-10 scale.

Responses split into Promoters (9-10), Passives (7-8), and Detractors (0-6).

What Is the Customer Effort Score (CES) Question Format

CES measures friction: “How easy was it to accomplish what you wanted today?”

Lower effort correlates with higher loyalty; Gartner research confirms CES outperforms CSAT for predicting repeat purchases.

What Website Feedback Questions Identify User Intent

Intent questions reveal why visitors arrived and whether they achieved their goals.

This data shapes content strategy, navigation design, and conversion rate optimization efforts.

How Do You Ask Why Visitors Came to Your Website

Trigger intent questions early in the session: “What’s the main reason for your visit today?” with options like Research, Purchase, Support, Compare prices.

How Do You Ask If Visitors Found What They Needed

The classic findability question: “Did you find what you were looking for?” (Yes/No/Partially).

Follow “No” responses with an open text field to capture specific gaps.

How Do You Determine Visitor Goal Completion

Goal completion questions pair with post-purchase survey questions or post-action triggers.

Ask: “Were you able to complete what you came here to do?” with branching logic for incomplete tasks.

What Website Feedback Questions Gather Suggestions

Suggestion-focused questions tap into visitor creativity and uncover opportunities your team missed.

Open-ended formats work best here.

How Do You Ask for Feature Requests

“What feature or functionality would make our website more useful to you?”

Keep it broad; specific prompts limit unexpected insights.

How Do You Collect Improvement Ideas

“If you could change one thing about our website, what would it be?”

This single question often surfaces the most actionable feedback in qualitative data collection.

Where Should You Place Website Feedback Surveys

Survey placement determines response quality; wrong placement captures wrong audiences.

Match survey type to page context and visitor journey stage.

What Is an On-Site Pop-Up Survey

Popup forms appear over page content based on behavior triggers like time delay or scroll depth.

Keep to 1-2 questions maximum; longer surveys tank response rates.

What Is an Exit-Intent Survey

Exit intent popups detect cursor movement toward the browser close button and trigger before abandonment.

Ideal for capturing feedback from visitors who didn’t convert.

What Is an Embedded Feedback Form

Embedded forms sit directly on pages like Help centers or contact us pages.

Always visible; attracts visitors actively seeking to provide input.

What Is a Post-Transaction Survey

Post-transaction surveys appear on confirmation pages or in follow-up emails after purchases, sign-ups, or downloads.

Captures feedback while experience remains fresh.

When Should You Trigger Website Feedback Surveys

Timing affects both response rates and data quality.

Poor timing annoys visitors; optimal timing captures authentic reactions.

What Is Time-Based Survey Triggering

Time triggers fire after visitors spend a set duration on page (typically 30-60 seconds).

Indicates engagement without interrupting immediate browsing.

What Is Behavior-Based Survey Triggering

Behavior triggers activate on scroll depth (50-75%), click patterns, or page views per session.

More precise targeting than time-based; requires analytics integration.

What Is Event-Based Survey Triggering

Event triggers fire after specific actions: form submissions, downloads, video completions, checkout abandonment.

Connects feedback directly to the experience you want measured.

How Do You Write Effective Website Feedback Questions

Question quality determines insight quality.

Follow best practices for creating feedback forms to maximize response rates and actionable data.

How Long Should a Website Feedback Survey Be

Two to four questions maximum for on-site surveys; completion rates drop 20% per additional question after four.

Email surveys can extend to 8-10 questions for engaged audiences.

What Question Order Works Best for Website Surveys

Start with closed-ended questions (easy commitment), progress to rating scales, end with one open-ended question.

Avoid starting with text fields; they create friction.

How Do You Avoid Biased Survey Questions

Remove leading language (“How great was your experience?”), double-barreled questions (“Was the site fast and easy?”), and assumed premises.

Test questions with colleagues before deployment.

Best Website Feedback Survey Questions by Purpose

Different business goals require different question sets.

Use survey form templates as starting points, then customize.

Best Questions for Measuring Overall Satisfaction

- “Overall, how satisfied are you with our website?” (1-5)

- “How likely are you to return to our website?” (Very likely to Very unlikely)

- “How would you rate your overall experience today?” (Star rating)

- “Would you recommend our website to others?” (Yes/No)

- “Compared to similar websites, how does ours rate?” (Much better to Much worse)

Best Questions for Identifying Usability Issues

- “Did you encounter any errors or broken features?”

- “What task were you trying to complete, and did you succeed?”

- “Which part of the website was most confusing?”

- “How easy was it to find the information you needed?” (1-5)

- “Did the website work as you expected?”

Best Questions for E-Commerce Websites

- “What almost stopped you from completing your purchase?”

- “How would you rate the checkout process?” (1-5)

- “Was product information detailed enough to make a decision?”

- “Did you find the shipping and return policies clear?”

- “What would make you more likely to buy from us again?”

E-commerce sites benefit from checkout optimization insights these questions reveal.

Best Questions for Content Websites

- “Did this article answer your question completely?”

- “How would you rate the quality of our content?” (1-5)

- “What topics would you like us to cover?”

- “Was this content easy to understand?”

- “Would you share this content with others?”

Best Questions for SaaS Websites

- “What feature would make you upgrade to a paid plan?”

- “How clear is our pricing information?” (1-5)

- “What’s preventing you from signing up today?”

- “Did you find the product demo helpful?”

- “How does our solution compare to alternatives you’ve considered?”

SaaS companies should review lead generation for SaaS strategies alongside feedback collection.

What Tools Collect Website Feedback Surveys

Survey deployment requires dedicated tools or WordPress survey plugins depending on your platform.

Key players: Hotjar, Qualaroo, SurveyMonkey, Qualtrics, Mouseflow, Contentsquare, UserSnap, Medallia, GetFeedback, Usabilla, Mopinion.

Most integrate with Google Analytics for behavioral segmentation.

How Do You Analyze Website Feedback Survey Results

Collection without analysis wastes effort.

Build systematic processes for analyzing survey data and translating insights into action.

What Metrics Should You Track

Core metrics: response rate (benchmark: 10-30%), completion rate, CSAT score, NPS score, CES score.

Segment by traffic source, device type, and user journey stage for deeper insights.

What Is a Good CSAT Benchmark for Websites

CSAT scores between 75-85% indicate solid performance; above 85% is excellent.

Forrester Research and Gartner publish industry-specific benchmarks quarterly.

How Do You Act on Website Feedback Data

Prioritize fixes by frequency and impact; a bug mentioned by 40% of respondents beats a suggestion from 2%.

Close the feedback loop by informing respondents when you implement their suggestions.

Avoid survey fatigue by limiting survey frequency and rotating question sets.

FAQ on Website Feedback Survey Questions

What is a website feedback survey?

A website feedback survey is a questionnaire presented to visitors that collects opinions about their browsing experience. It measures satisfaction, usability, and content quality through rating scales, multiple choice, and open-ended questions.

How many questions should a website feedback survey have?

On-site surveys perform best with 2-4 questions maximum. Completion rates drop significantly after four questions. Email-based follow-up surveys can extend to 8-10 questions for engaged audiences willing to provide detailed feedback.

When is the best time to trigger a website feedback survey?

Trigger surveys after meaningful interactions: post-purchase, post-support contact, or after 30-60 seconds of page engagement. Exit-intent timing captures feedback from visitors about to leave. Avoid interrupting active browsing or checkout processes.

What is the difference between CSAT and NPS surveys?

Customer Satisfaction Score (CSAT) measures immediate satisfaction with a specific interaction using a 1-5 scale. Net Promoter Score (NPS) measures overall loyalty and recommendation likelihood using a 0-10 scale.

How do I increase website survey response rates?

Keep surveys short. Use clear, simple language. Offer incentives when appropriate. Time triggers based on user behavior rather than arbitrary delays. Mobile-optimized web forms increase completion rates on smartphones.

What tools can I use to create website feedback surveys?

Popular options include Hotjar, Qualaroo, SurveyMonkey, Qualtrics, Mouseflow, and Contentsquare. WordPress users can implement surveys through dedicated plugins. Most tools integrate with Google Analytics for visitor segmentation.

Should I use open-ended or closed-ended questions?

Use both. Closed-ended questions (rating scales, yes/no) provide quantifiable metrics for tracking trends. Open-ended questions capture unexpected insights and specific suggestions. Start with closed-ended, end with one open-ended question.

Where should I place feedback surveys on my website?

High-traffic pages, post-transaction confirmation screens, help center pages, and exit-intent triggers work best. Match placement to your goal: checkout pages for purchase feedback, content pages for relevance feedback.

How do I analyze website feedback survey results?

Track response rates, CSAT scores, NPS scores, and completion rates. Segment responses by traffic source, device type, and user journey stage. Prioritize issues by frequency and business impact when planning improvements.

What questions should I avoid in website feedback surveys?

Avoid leading questions, double-barreled questions asking two things at once, and questions with assumed premises. Skip jargon visitors won’t understand. Never ask for information you won’t act on.

Conclusion

The right website feedback survey questions transform anonymous visitor behavior into actionable insights.

Start with a clear goal. Match question types to that goal. Place surveys where they won’t disrupt the user experience.

Tools like Hotjar, Mouseflow, and Typeform make deployment straightforward.

Track your response rates and Customer Effort Score alongside traditional metrics.

The feedback loop matters most. Collecting data without acting on it wastes everyone’s time.

Prioritize fixes by frequency. Close the loop by telling visitors when you implement their suggestions.

Your visitor feedback collection strategy should evolve as your site does. Review questions quarterly. Rotate formats to prevent survey fatigue.

The sites that improve fastest are the ones that ask, listen, and adapt.