Most surveys collect answers. Few collect anything useful. The difference comes down to the questions you ask. Vague prompts get vague responses. Specific, well-structured feedback survey questions get data you…

Table of Contents

Most corporate training programs collect feedback. Few collect feedback that actually changes anything.

The difference comes down to asking the right training survey questions, ones that measure knowledge retention, trainer effectiveness, and content relevance instead of just “did you enjoy the session.”

Organizations that align their post-training assessment with frameworks like the Kirkpatrick Model consistently make better decisions about where to invest L&D budgets.

This guide covers every type of training evaluation question you’ll need, from pre-training baselines to post-training feedback, plus how to write them, distribute them, and turn the results into action.

What Are Training Survey Questions

Training survey questions are structured prompts used by organizations to collect participant feedback about a learning experience, course, or professional development program.

They measure everything from content relevance and instructor effectiveness to knowledge retention and skill application after a workshop or eLearning module.

HR departments, L&D teams, and training managers use these questions inside feedback forms distributed before, during, or after a training session.

The collected data feeds directly into training program evaluation, helping decision-makers figure out what worked, what flopped, and where money got wasted.

Without them, you’re guessing. And guessing with training budgets that often run into six figures per year is a bad idea.

Training Survey Questions

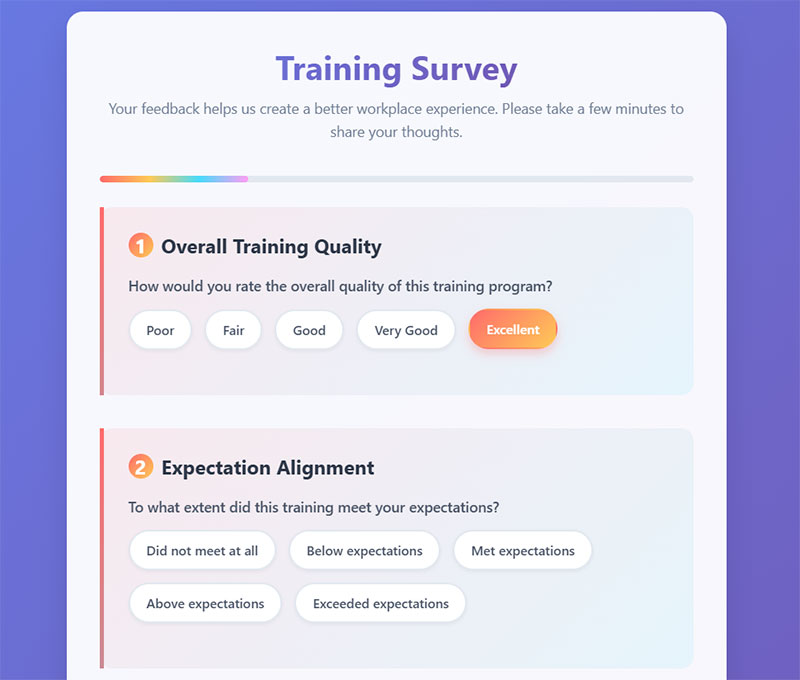

Overall Training Evaluation

Overall Quality Rating

Question: How would you rate the overall quality of this training program?

Type: Multiple Choice (1–5 scale from “Poor” to “Excellent”)

Purpose: Provides a comprehensive assessment of the entire training experience and serves as a key performance indicator for program success.

When to Ask: At the end of the training program during the final evaluation survey.

Expectation Alignment

Question: To what extent did this training meet your expectations?

Type: Multiple Choice (1–5 scale from “Did not meet expectations at all” to “Exceeded expectations”)

Purpose: Measures the gap between participant expectations and actual training delivery to identify areas for improvement in marketing and content design.

When to Ask: During the post-training evaluation, ideally within 24-48 hours of completion.

Recommendation Likelihood

Question: Would you recommend this training to a colleague?

Type: Multiple Choice (Yes/No or 1–10 Net Promoter Score scale)

Purpose: Gauges participant satisfaction and identifies training advocates who can help promote future programs through word-of-mouth.

When to Ask: In follow-up surveys 1-2 weeks after training completion when participants have had time to reflect.

Application Intent

Question: How likely are you to apply what you learned in your day-to-day work?

Type: Multiple Choice (1–5 scale from “Very unlikely” to “Very likely”)

Purpose: Predicts training transfer and helps identify potential barriers to implementation before they occur.

When to Ask: Immediately after training and again in follow-up surveys 30-60 days later to track changes in intent.

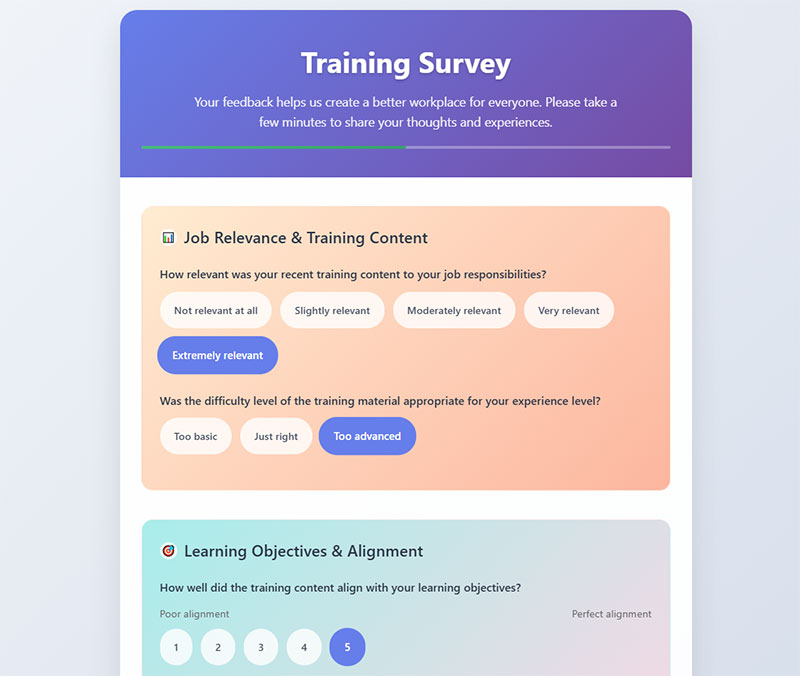

Content and Relevance

Job Relevance

Question: How relevant was the training content to your job responsibilities?

Type: Multiple Choice (1–5 scale from “Not relevant at all” to “Extremely relevant”)

Purpose: Ensures training content aligns with participant needs and helps identify content that may need updating or customization.

When to Ask: During mid-training check-ins and final evaluations to capture both immediate and overall impressions.

Difficulty Appropriateness

Question: Was the difficulty level of the material appropriate for your experience level?

Type: Multiple Choice (“Too basic,” “Just right,” “Too advanced”)

Purpose: Helps calibrate content difficulty and identify when different skill level tracks or prerequisites might be needed.

When to Ask: During training breaks and in post-training surveys to allow participants time to process the material.

Most Valuable Topics

Question: Which topics were most valuable to you and why?

Type: Open-ended with optional ranking of topics

Purpose: Identifies high-impact content areas that should be emphasized in future training and helps prioritize curriculum development efforts.

When to Ask: At the end of each training module and in comprehensive post-training evaluations.

Content Gaps

Question: Were there any topics you felt were missing or needed more coverage?

Type: Open-ended

Purpose: Reveals curriculum gaps and opportunities for program expansion or refinement based on participant expertise and needs.

When to Ask: During final evaluations when participants have experienced the full program scope.

Learning Objective Alignment

Question: How well did the training content align with your learning objectives?

Type: Multiple Choice (1–5 scale from “Poor alignment” to “Perfect alignment”)

Purpose: Measures whether the training delivered on its promised outcomes and helps refine objective-setting for future programs.

When to Ask: Post-training, after participants have had time to compare their initial goals with actual outcomes.

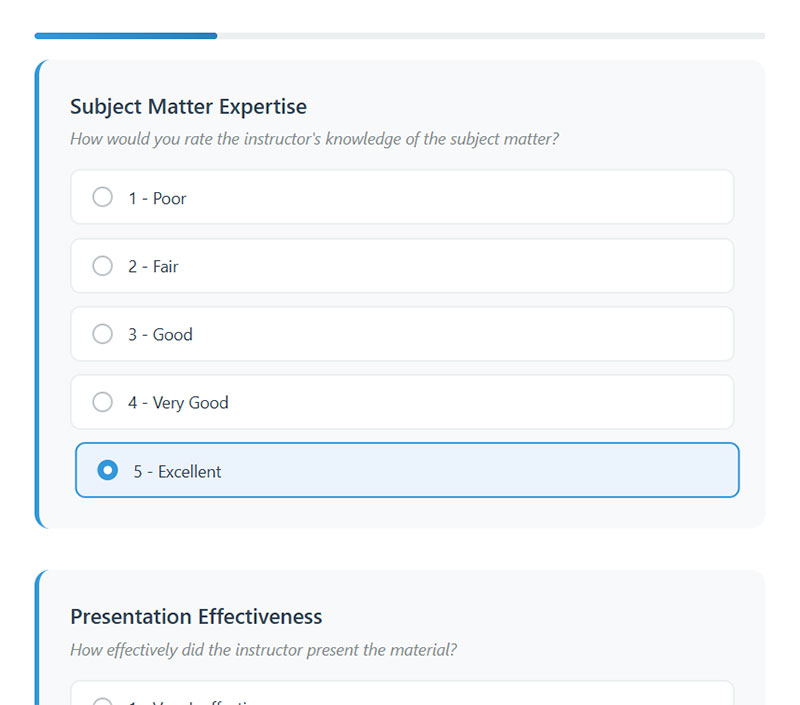

Instructor/Facilitator Effectiveness

Subject Matter Expertise

Question: How would you rate the instructor’s knowledge of the subject matter?

Type: Multiple Choice (1–5 scale from “Poor” to “Excellent”)

Purpose: Evaluates instructor credibility and identifies needs for additional subject matter training or expert guest speakers.

When to Ask: During mid-training evaluations and final assessments to capture sustained impressions of expertise.

Presentation Effectiveness

Question: How effectively did the instructor present the material?

Type: Multiple Choice (1–5 scale from “Very ineffective” to “Very effective”)

Purpose: Assesses instructional delivery skills and helps identify areas for instructor development or coaching.

When to Ask: After each major training session and in comprehensive post-training evaluations.

Participation Encouragement

Question: Did the instructor encourage participation and answer questions clearly?

Type: Multiple Choice (1–5 scale from “Strongly disagree” to “Strongly agree”)

Purpose: Measures the instructor’s ability to create an engaging, interactive learning environment and provide clear explanations.

When to Ask: During training breaks and final evaluations to capture the full range of interaction experiences.

Pace Management

Question: How well did the instructor manage the pace of the training?

Type: Multiple Choice (“Too slow,” “Just right,” “Too fast”)

Purpose: Identifies pacing issues that can significantly impact learning effectiveness and participant engagement.

When to Ask: At the end of each training day for multi-day programs, or during natural breaks in single-day sessions.

Training Methods and Materials

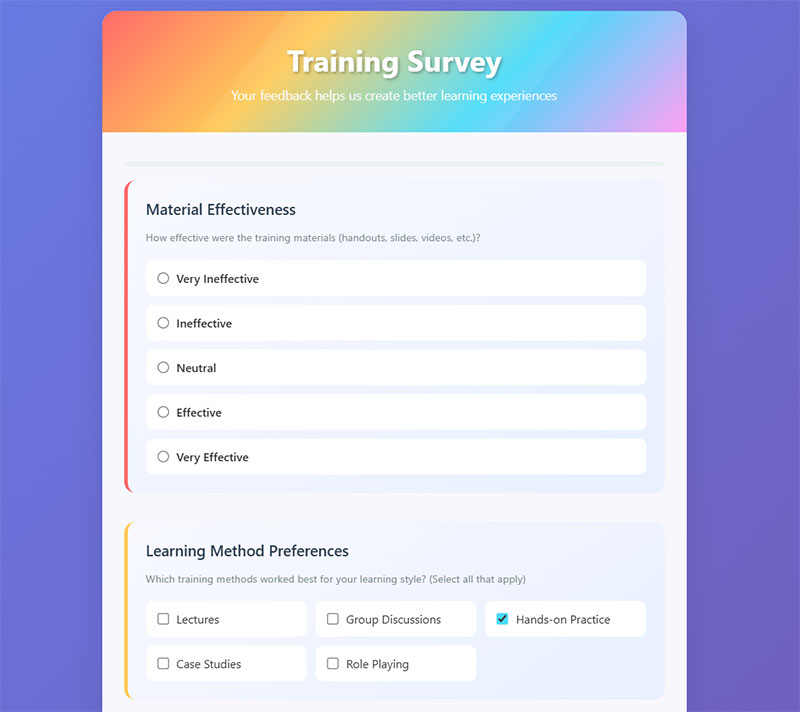

Material Effectiveness

Question: How effective were the training materials (handouts, slides, videos, etc.)?

Type: Multiple Choice (1–5 scale from “Very ineffective” to “Very effective”)

Purpose: Evaluates the quality and utility of supporting materials to guide future resource development and procurement decisions.

When to Ask: During training sessions when materials are fresh in participants’ minds and in post-training follow-ups.

Learning Method Preferences

Question: Which training methods worked best for your learning style?

Type: Multiple Choice with options like “Lectures,” “Group discussions,” “Hands-on practice,” “Case studies,” “Role playing”

Purpose: Identifies preferred learning modalities to optimize future training design and accommodate diverse learning preferences.

When to Ask: After experiencing multiple training methods, typically mid-way through and at the end of the program.

Activity Helpfulness

Question: Were the hands-on activities and exercises helpful?

Type: Multiple Choice (1–5 scale from “Not helpful at all” to “Extremely helpful”)

Purpose: Measures the effectiveness of experiential learning components and guides decisions about activity inclusion and design.

When to Ask: Immediately after major activities and in comprehensive post-training evaluations.

Technology Usage

Question: How would you rate the use of technology during the training?

Type: Multiple Choice (1–5 scale from “Poor” to “Excellent”)

Purpose: Assesses whether technology enhanced or hindered the learning experience and identifies areas for technical improvement.

When to Ask: During training sessions when technical experiences are recent and in final evaluations for overall assessment.

Learning Outcomes

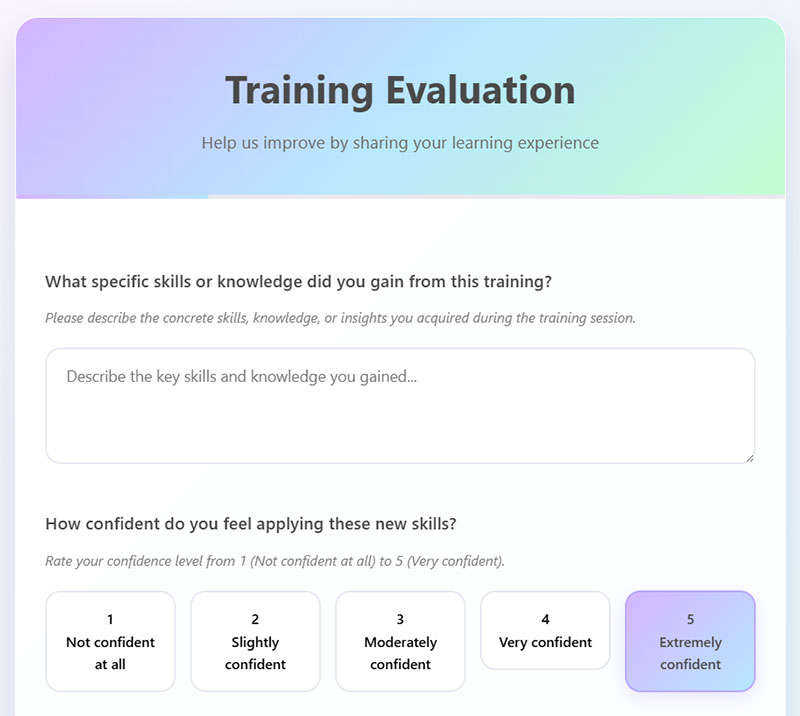

Skills and Knowledge Gained

Question: What specific skills or knowledge did you gain from this training?

Type: Open-ended

Purpose: Captures concrete learning outcomes and helps demonstrate training ROI while identifying successful curriculum elements.

When to Ask: Immediately post-training and in follow-up surveys to track retention and application of learning.

Application Confidence

Question: How confident do you feel applying these new skills?

Type: Multiple Choice (1–5 scale from “Not confident at all” to “Very confident”)

Purpose: Measures self-efficacy and predicts successful skill transfer while identifying areas where additional support may be needed.

When to Ask: At training completion and in follow-up surveys to track confidence changes over time.

Key Learning Insight

Question: What was the most important thing you learned?

Type: Open-ended

Purpose: Identifies the highest-impact learning moments and helps prioritize core curriculum elements for future training design.

When to Ask: During training wrap-up sessions and in post-training reflection surveys.

Unmet Learning Objectives

Question: Which learning objectives do you feel were not adequately addressed?

Type: Multiple Choice list of stated objectives plus open-ended option

Purpose: Identifies curriculum gaps and helps refine learning objectives to ensure they are achievable and measurable.

When to Ask: In post-training evaluations after participants have had time to reflect on the complete learning experience.

Logistics and Environment

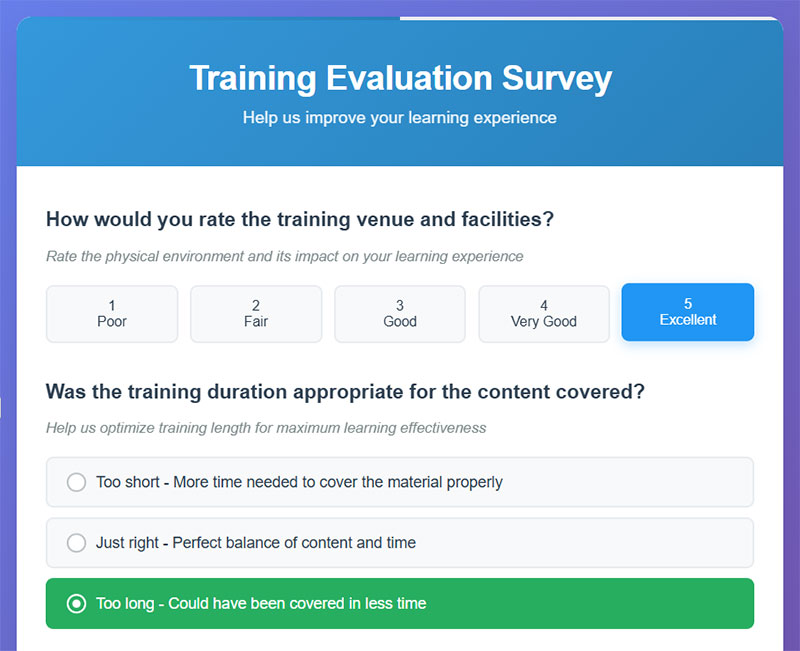

Venue and Facilities Rating

Question: How would you rate the training venue and facilities?

Type: Multiple Choice (1–5 scale from “Poor” to “Excellent”)

Purpose: Evaluates whether the physical environment supported or hindered learning and informs future venue selection decisions.

When to Ask: During training sessions and in post-training evaluations to capture both immediate and overall impressions.

Duration Appropriateness

Question: Was the training duration appropriate for the content covered?

Type: Multiple Choice (“Too short,” “Just right,” “Too long”)

Purpose: Helps optimize training length to maximize learning while respecting participant time constraints and attention spans.

When to Ask: At the end of training sessions and in follow-up surveys when participants can better assess content-to-time ratios.

Schedule Convenience

Question: How convenient were the training dates and times?

Type: Multiple Choice (1–5 scale from “Very inconvenient” to “Very convenient”)

Purpose: Identifies scheduling barriers that might affect attendance and participation quality in future training offerings.

When to Ask: During registration follow-up and post-training evaluations to inform future scheduling decisions.

Technical Issues Impact

Question: Were there any technical issues that interfered with your learning?

Type: Yes/No with open-ended follow-up for details

Purpose: Identifies technology-related barriers to learning and helps prioritize technical infrastructure improvements.

When to Ask: During training sessions when issues occur and in post-training surveys for comprehensive technical assessment.

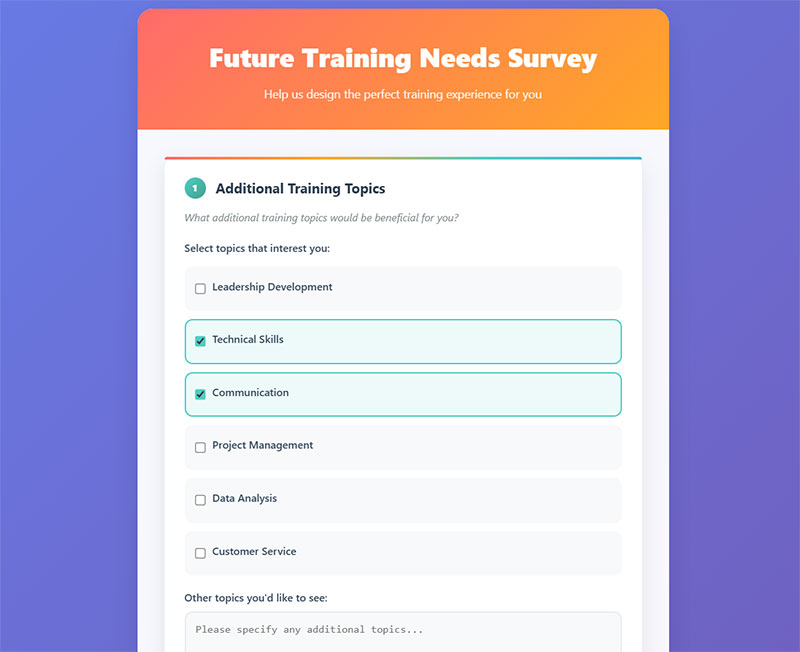

Future Training Needs

Additional Training Topics

Question: What additional training topics would be beneficial for you?

Type: Open-ended with optional multiple choice list of potential topics

Purpose: Identifies future training opportunities and helps build a pipeline of relevant programs that meet ongoing participant needs.

When to Ask: In post-training surveys and periodic needs assessment surveys throughout the year.

Preferred Training Format

Question: What format would you prefer for future training sessions?

Type: Multiple Choice (“In-person,” “Virtual/online,” “Hybrid,” “Self-paced,” “Microlearning”)

Purpose: Guides training delivery method selection to optimize accessibility, engagement, and cost-effectiveness.

When to Ask: In post-training evaluations and periodic preference surveys to track changing format preferences.

Training Frequency Preference

Question: How often would you like to receive training on this topic?

Type: Multiple Choice (“One-time only,” “Annually,” “Semi-annually,” “Quarterly,” “As needed”)

Purpose: Helps plan training cycles and refresh schedules to maintain skill currency without causing training fatigue.

When to Ask: In post-training surveys and follow-up assessments when participants can better judge their ongoing learning needs.

Advanced Training Interest

Question: Are there specific areas where you need more advanced training?

Type: Open-ended with optional skill level assessment

Purpose: Identifies opportunities for advanced or specialized training tracks and helps create learning pathways for skill development.

When to Ask: In post-training evaluations and periodic skill gap assessments throughout the year.

Impact and Application

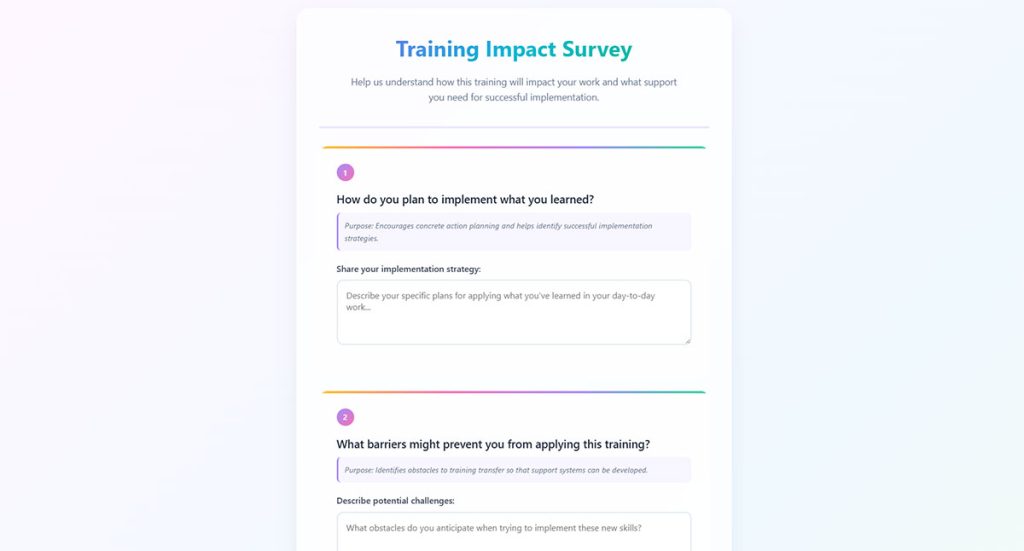

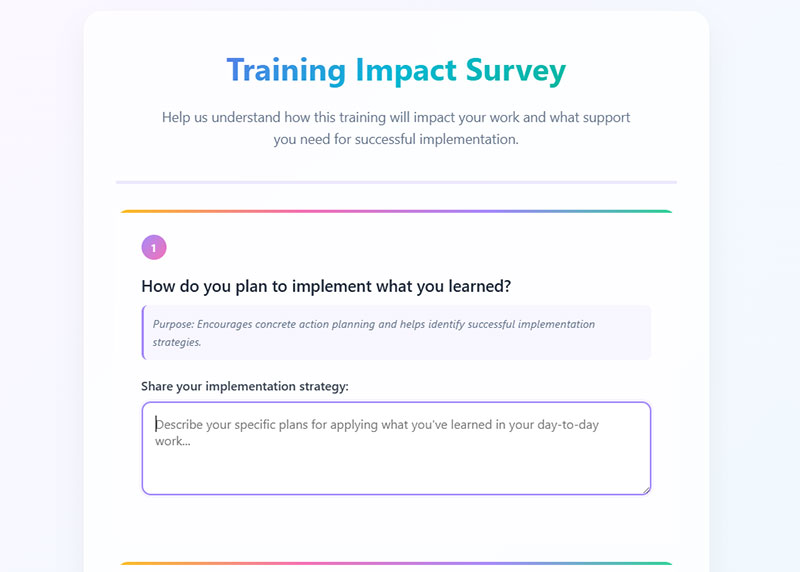

Implementation Planning

Question: How do you plan to implement what you learned?

Type: Open-ended

Purpose: Encourages concrete action planning and helps identify successful implementation strategies that can be shared with future participants.

When to Ask: At the end of training sessions and in 30-day follow-up surveys to track implementation progress.

Implementation Barriers

Question: What barriers might prevent you from applying this training?

Type: Open-ended with optional multiple choice list of common barriers

Purpose: Identifies obstacles to training transfer so that support systems and resources can be developed to overcome them.

When to Ask: Immediately post-training and in follow-up surveys to capture both anticipated and actual barriers.

Performance Impact Expectation

Question: How will this training help improve your job performance?

Type: Open-ended

Purpose: Connects training outcomes to business results and helps demonstrate ROI while identifying areas where impact measurement is needed.

When to Ask: In post-training evaluations and follow-up surveys to track both expected and actual performance improvements.

Support Needs

Question: What support do you need to successfully apply these skills?

Type: Open-ended with optional multiple choice list of support options

Purpose: Identifies post-training support requirements and helps design follow-up resources, coaching, or reinforcement programs.

When to Ask: At training completion and in follow-up surveys to ensure ongoing support aligns with actual implementation challenges.

Why Do Organizations Use Training Survey Questions

Companies run training programs constantly. Onboarding, compliance, leadership development, technical upskilling. But running a program and knowing whether it actually changed behavior are two completely different things.

Training survey questions close that gap.

They give organizations a direct line to learner satisfaction data, which correlates with engagement, knowledge transfer, and long-term skill application on the job.

The Kirkpatrick Model, developed by Donald Kirkpatrick in the 1950s and still the most widely referenced training evaluation framework, breaks evaluation into four levels: reaction, learning, behavior, and results.

Training surveys primarily address Level 1 (reaction) and parts of Level 2 (learning). They capture how participants felt about the experience and whether they believe they gained new competencies.

Organizations also use them to:

- Identify gaps in instructional design before rolling out training company-wide

- Benchmark trainer and facilitator performance across sessions

- Justify training ROI to stakeholders using quantitative survey analysis

- Spot recurring complaints about content delivery methods or pacing

- Comply with ISO 10015 and internal quality standards for corporate learning

SHRM and the Association for Talent Development (ATD) both recommend post-training assessments as a baseline practice for any structured employee development initiative.

One thing that trips people up: confusing a training survey with a training test. Tests measure whether someone learned the material. Surveys measure whether the training itself was any good. Both matter, but they answer different questions.

What Types of Training Survey Questions Exist

Not all questions do the same job. The types of survey questions you pick shape the kind of data you get back, and how useful that data actually is.

Here’s where most people go wrong. They default to one question format for the entire survey. That creates blind spots.

A strong training evaluation survey mixes quantitative and qualitative question types to capture both measurable scores and the “why” behind those scores.

What Are Likert Scale Questions for Training Surveys

Likert scale questions ask respondents to rate agreement on a fixed scale, typically 1-5 or 1-7. Example: “The training content was relevant to my daily responsibilities” with options from “Strongly Disagree” to “Strongly Agree.”

They produce clean numerical data that’s easy to average, compare across sessions, and track over time.

What Are Open-Ended Training Survey Questions

These collect unfiltered qualitative training data. “What would you change about this training?” is a classic.

They’re harder to analyze at scale but surface insights no rating scale ever will. Took me years to appreciate how much gold hides in free-text responses.

What Are Multiple-Choice Training Survey Questions

Respondents pick from predefined options. Works well for factual or categorical questions like “Which module was most useful?” or “How did you attend this training?” (in-person, virtual, self-paced).

Fast to answer, fast to analyze. Keep option lists under 7 choices or completion rates drop.

What Are Rating Scale Questions for Training Feedback

Similar to Likert but measuring intensity rather than agreement. “Rate the trainer’s knowledge of the subject” on a 1-10 scale.

These show up heavily in instructor evaluation forms and facilitator performance reviews.

What Are Yes/No Questions in Training Surveys

Binary questions like “Would you recommend this training to a colleague?” give you a quick pulse check. They’re the training equivalent of a Net Promoter Score (NPS) question.

Use sparingly. They confirm or deny but never explain.

What Should a Pre-Training Survey Include

A pre-training survey runs before the first session starts. Its job is to establish a baseline, not collect feedback.

Most L&D teams skip this step entirely, which makes it nearly impossible to measure actual knowledge gain later. If you don’t know where learners started, you can’t prove they moved.

Pre-training surveys align with the “A” (Analysis) phase of the ADDIE Model used in instructional design. They inform content calibration, pacing decisions, and facilitator preparation.

How Do Pre-Training Surveys Measure Existing Knowledge

Ask participants to self-rate their current confidence or familiarity with specific topics. Example: “How would you rate your current understanding of data privacy regulations?” on a 1-5 scale.

Pair self-assessments with 2-3 actual knowledge-check questions to compare perception against reality. The gap between the two is where the real story lives.

What Questions Identify Training Expectations

These capture what participants hope to walk away with. “What specific skills do you expect to gain from this training?” or “Which aspects of [topic] do you find most challenging in your current role?”

Expectations data helps trainers adjust emphasis in real time. It also sets a measurable benchmark for post-training comparison.

What Should a Post-Training Survey Include

Post-training surveys are where most organizations focus their evaluation energy. For good reason. This is the moment participants have the experience fresh enough to give honest, detailed feedback survey questions real answers.

A solid post-training evaluation survey covers four areas: knowledge retention, trainer effectiveness, content relevance, and overall learner experience.

The trick is keeping it tight. Participants just sat through a training session. Nobody wants to then fill out a 40-question survey. Applying best practices for creating feedback forms keeps response quality high and completion rates above 70%.

How Do Post-Training Surveys Measure Knowledge Retention

Compare post-training self-assessments against pre-training baselines. “How confident are you now in applying [specific skill]?” measured on the same scale used before the training.

Some organizations add short scenario-based questions to test applied understanding, not just perceived confidence. According to Bloom’s Taxonomy, application-level questions reveal more than simple recall.

What Questions Evaluate Trainer Effectiveness

“The instructor demonstrated deep subject matter expertise” (Likert scale). “The facilitator encouraged questions and participation” (Likert scale). “What could the trainer improve?” (open-ended).

Always separate content quality from delivery quality. A brilliant curriculum taught poorly still fails, and trainer evaluation questions help isolate which variable needs fixing.

How Do Post-Training Surveys Assess Content Relevance

Ask directly: “How relevant was this training to your current job responsibilities?” and “Which topics covered will you apply within the next 30 days?”

Content relevance scores below 3.5 out of 5 across a cohort signal a serious alignment problem between what L&D built and what employees actually need. That’s a redesign trigger, not a minor tweak.

For organizations managing these surveys through their website, building them with a survey form tool simplifies distribution and keeps response data organized in one place.

How to Write Effective Training Survey Questions

Bad questions produce bad data. And bad data leads to training programs that keep repeating the same mistakes quarter after quarter.

Writing strong training evaluation survey questions comes down to three things: clarity, neutrality, and purpose. Every question needs a reason for being there. If you can’t explain what you’ll do with the answer, cut it.

What Makes a Training Survey Question Clear

One idea per question. “Was the training useful and well-paced?” is two questions jammed together, and the response becomes meaningless. Split them.

Use specific language tied to observable outcomes. “Rate the usefulness of Module 3 on conflict resolution techniques” beats “Rate the training content” every time.

How to Avoid Bias in Training Survey Questions

Leading questions like “How excellent was the trainer’s presentation?” push respondents toward positive answers. Neutral framing: “How would you rate the trainer’s presentation skills?”

Avoid acquiescence bias by mixing positively and negatively worded statements in your Likert scale sections. Applying solid form design principles to your survey layout also reduces position bias and primacy effects.

What Is the Right Number of Questions for a Training Survey

Between 8 and 15 questions for post-training surveys. Pre-training surveys should stay under 10.

Anything beyond 15 questions and you start losing respondents, especially on mobile devices. Research from Qualtrics shows completion rates drop roughly 5-10% for every additional minute past the 5-minute mark. Keep it tight, or risk survey fatigue tanking your response quality.

How to Measure Training Effectiveness with Survey Data

Collecting responses is the easy part. Turning that data into something a VP of HR actually acts on requires a framework.

Most organizations default to averaging satisfaction scores and calling it a day. That tells you people enjoyed the lunch, not whether the training changed performance.

What Is the Kirkpatrick Model for Training Evaluation

Four levels: Reaction (did they like it?), Learning (did they absorb it?), Behavior (did they apply it?), Results (did business metrics move?). Training surveys primarily capture Levels 1 and 2.

Jack Phillips later added a fifth level focused on training ROI measurement, calculating the monetary return against program costs. The ROI Institute publishes benchmarks across industries for comparison.

How Do Training Surveys Connect to ROI

Pair survey data with performance metrics collected 30, 60, and 90 days post-training. If satisfaction scores are high but behavior change scores are flat, the content resonated emotionally but failed to transfer.

ATD’s Talent Development Reporting Principles (TDRp) framework provides standardized metrics for connecting learner satisfaction data to organizational outcomes. Analyzing survey data properly means going beyond averages and looking at distribution, outliers, and cross-segment comparisons.

What Are Common Mistakes in Training Survey Design

These show up constantly, even in organizations with mature L&D teams.

- Double-barreled questions that ask two things at once (“Was the content relevant and the pace appropriate?”)

- Distributing the survey days after the training, when recall has already faded

- Using only closed-ended questions with no space for qualitative training data

- Failing to guarantee anonymity, which suppresses honest negative feedback

- No pre-training baseline, making it impossible to prove knowledge gain

- Ignoring form validation on required fields, resulting in incomplete submissions

- Asking about overall satisfaction without breaking it down by module, trainer, or format

The biggest one, honestly? Not acting on the results. People stop giving thoughtful feedback when they see nothing changes.

Training Survey Question Examples by Training Type

Context changes what you should ask. A compliance training evaluation for OSHA regulations needs different questions than a leadership development workshop.

Below are focused question sets organized by training category. These pull from the most common corporate training contexts where post-training assessment actually drives decisions.

What Are Good Survey Questions for Onboarding Training

“Did this onboarding program prepare you for your first 30 days?” and “Which topic should receive more time in future sessions?” cover the two things that matter most: readiness and content gaps. For deeper insights into structuring onboarding survey questions, matching questions to specific onboarding phases produces better data.

What Are Good Survey Questions for Compliance Training

“Can you identify the correct reporting procedure for a workplace safety incident?” tests actual knowledge transfer. “How relevant was this compliance training to your daily responsibilities?” catches content-role misalignment.

OSHA-regulated industries often require documented proof that training was both delivered and understood.

What Are Good Survey Questions for Leadership Development Training

“Rate your confidence in giving constructive feedback to direct reports” (pre and post comparison). “Which leadership framework covered in this program will you apply first?”

Leadership programs run by organizations using platforms like LinkedIn Learning or Coursera for Business benefit from pairing platform analytics with separate survey data.

What Are Good Survey Questions for Technical Skills Training

“Can you complete [specific task] independently after this training?” is the only question that really matters. Follow it with “What additional resources or practice time would help you apply these skills?”

Technical training surveys should lean harder on competency-based assessment questions than satisfaction ratings.

What Are Good Survey Questions for Soft Skills Training

“Describe a situation where you could apply the communication techniques covered today.” Open-ended works better here than scales because soft skill application is highly contextual.

“How likely are you to change your approach to [specific interpersonal scenario] based on this training?” captures behavioral intent, which is the closest proxy for Level 3 (behavior change) you can get from a survey alone.

How to Distribute and Collect Training Surveys

Timing and delivery method affect response rates more than most people realize. A perfectly written survey sent at the wrong time through the wrong channel gets ignored.

When Is the Best Time to Send a Training Survey

Immediately after the session ends for reaction-level feedback. Within 24 hours maximum, or memory distortion kicks in.

For behavior change and application questions, send a follow-up survey at the 30 or 60-day mark. Two touchpoints give you a reaction snapshot plus a delayed impact measurement. Using survey form templates speeds up deployment so timing never becomes the bottleneck.

What Platforms Work Best for Training Surveys

SurveyMonkey and Qualtrics dominate enterprise training evaluation. Google Forms and Microsoft Forms work fine for smaller teams with simple needs. Typeform excels at mobile form experiences where completion rate matters more than advanced branching logic.

If your training runs through a Learning Management System (LMS) like Moodle, Cornerstone OnDemand, or SAP SuccessFactors, most have built-in survey modules. The trade-off: built-in tools are convenient but usually limited in question type variety and reporting depth.

For WordPress-based training sites, WordPress survey plugins integrate directly into your existing setup without forcing participants to leave the platform. Using conditional logic lets you show different follow-up questions based on initial responses, keeping surveys short and relevant for each participant.

How to Analyze Training Survey Results

Raw numbers don’t tell stories. A 4.2 out of 5 satisfaction score sounds good until you realize the previous quarter scored 4.6 and the industry benchmark sits at 4.5.

Analysis requires context, comparison, and segmentation.

Split results by department, training format (in-person vs. virtual), trainer, and experience level. Aggregate scores hide the patterns that actually matter.

For quantitative data, calculate mean scores per question, track trends across training cohorts, and flag any question where the standard deviation exceeds 1.5 on a 5-point scale. High variance means the group had wildly different experiences, which is a red flag worth investigating.

Qualitative responses from open-ended questions need thematic coding. Group comments into categories like content quality, pacing, trainer skill, and practical applicability. Three or more mentions of the same issue across a cohort constitutes a pattern, not an outlier.

Build a one-page summary for stakeholders. Include top-line satisfaction scores, biggest strengths, top 3 improvement areas, and a comparison to previous sessions.

Gallup research shows organizations that consistently act on employee satisfaction survey feedback see 14% higher engagement scores. The same principle applies to training surveys. Close the loop, show participants their feedback drove changes, and response quality improves over time.

For organizations collecting training feedback alongside other types of forms across their website, centralizing survey data into a single reporting dashboard prevents insights from getting siloed across disconnected tools.

FAQ on Training Survey Questions

What are training survey questions?

Training survey questions are structured prompts used to collect participant feedback about a learning experience. They measure content relevance, instructor effectiveness, knowledge retention, and overall learner satisfaction after workshops, eLearning modules, or corporate training programs.

What is the best time to send a training survey?

Immediately after the session ends for reaction-level feedback. Within 24 hours maximum. For behavior change measurement, send a follow-up at the 30 or 60-day mark to capture skill application data.

How many questions should a training survey have?

Between 8 and 15 questions for post-training evaluations. Pre-training surveys should stay under 10. Anything beyond 15 risks survey fatigue, which drops completion rates and degrades response quality significantly.

What is the Kirkpatrick Model in training evaluation?

The Kirkpatrick Model is a four-level framework developed by Donald Kirkpatrick: Reaction, Learning, Behavior, and Results. Training surveys primarily address Levels 1 and 2, measuring participant satisfaction and perceived knowledge gain.

What types of questions work best in training surveys?

A mix of Likert scale questions for measurable ratings, open-ended questions for qualitative insights, and multiple-choice questions for categorical data. Combining different types of survey questions prevents blind spots in your evaluation.

Should training surveys be anonymous?

Yes, when collecting honest feedback about trainer effectiveness or content quality. Anonymity reduces social desirability bias. Identified surveys work better for tracking individual learning progress over time across multiple training sessions.

What is the difference between pre-training and post-training surveys?

Pre-training surveys establish a knowledge baseline and capture learner expectations before the session. Post-training surveys measure satisfaction, knowledge retention, and content relevance after delivery. Comparing both reveals actual learning gains.

How do you measure training ROI with surveys?

Pair survey satisfaction scores with performance metrics collected 30-90 days post-training. Jack Phillips’ ROI methodology calculates monetary return against program costs. ATD’s TDRp framework provides standardized metrics for connecting feedback to business outcomes.

What are common mistakes in training survey design?

Double-barreled questions asking two things at once, leading language that biases responses, no pre-training baseline for comparison, delayed distribution after memory fades, and failing to act on results. Each one undermines data quality.

Can you use the same survey for different training types?

Not effectively. Compliance training evaluation needs knowledge-check questions tied to OSHA or regulatory standards. Leadership development surveys focus on behavioral intent. Technical skills training requires competency-based assessment. Context shapes which questions produce useful data.

Conclusion

Well-designed training survey questions turn a routine feedback exercise into a decision-making tool that shapes how organizations build, deliver, and improve their learning programs.

The difference between useful survey data and wasted effort sits in the details. Question clarity, response scale selection, distribution timing, and proper segmentation during analysis all compound.

Whether you’re evaluating a compliance workshop against OSHA standards or measuring behavioral intent after a leadership development program, the structure of your employee training feedback determines what you can actually prove to stakeholders.

Start with a pre-training baseline. Keep post-training surveys between 8 and 15 questions. Mix Likert scale ratings with open-ended responses. And close the loop by showing participants their input drove real changes.

Organizations that treat training evaluation as a continuous improvement cycle, not a checkbox, consistently build stronger programs and higher engagement over time.