Most surveys collect answers. Few collect anything useful. The difference comes down to the questions you ask. Vague prompts get vague responses. Specific, well-structured feedback survey questions get data you…

Table of Contents

A single product defect can cost you a customer for life. But most quality issues stay invisible until returns pile up or reviews tank.

Survey questions about product quality catch problems early, straight from the people who actually use what you sell.

The right questions reveal durability concerns, performance gaps, and satisfaction levels that internal testing misses.

This guide covers the exact question types that work, when to send them, and how to turn responses into measurable quality improvements.

You will find ready-to-use examples for rating scales, open-ended feedback, and comparative assessments across different product categories.

What Are Survey Questions About Product Quality

Survey questions about product quality are structured inquiries that collect customer feedback on performance, durability, reliability, and overall satisfaction with a specific product.

These questions measure how well a product meets consumer expectations.

They use rating scales, open-ended responses, and comparative assessments to gather actionable data.

Product quality measurement through surveys gives businesses direct insight into what customers actually experience after purchase.

The data feeds into quality control processes, product development cycles, and customer satisfaction tracking systems.

Most companies use tools like SurveyMonkey, Qualtrics, or Google Forms to deploy these surveys at scale.

Survey Questions About Product Quality

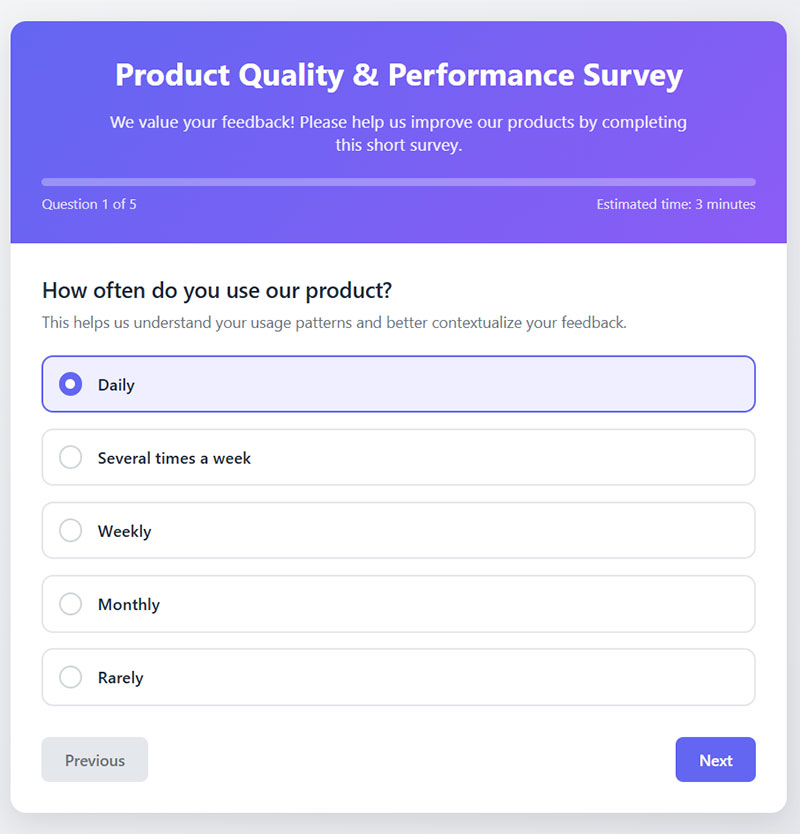

Product Usage Experience

Usage Frequency

Question: How often do you use our product?

Type: Multiple Choice (Daily, Several times a week, Weekly, Monthly, Rarely)

Purpose: Establishes usage patterns to contextualize feedback and segment users by engagement level.

When to Ask: At the beginning of the survey to filter responses or segment analysis.

Feature Utilization

Question: Which features do you use most frequently?

Type: Multiple Selection (List of key product features)

Purpose: Identifies which features provide the most value and which may need improvement or better visibility.

When to Ask: Early in the survey before asking detailed questions about specific features.

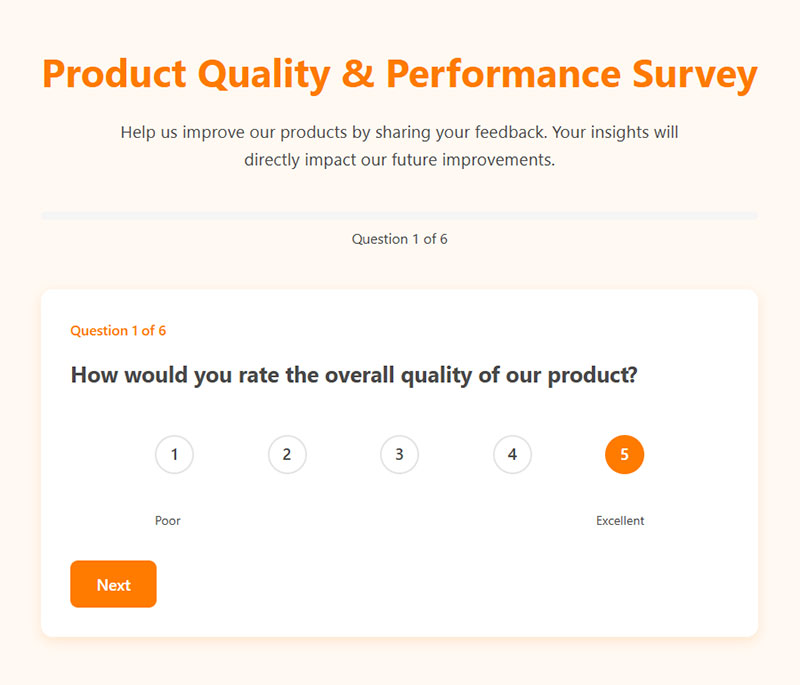

Overall Satisfaction Rating

Question: Rate your overall satisfaction with the product performance.

Type: Rating Scale (1-10 from “Completely dissatisfied” to “Extremely satisfied”)

Purpose: Provides a benchmark metric for overall product performance and user sentiment.

When to Ask: Either at the beginning to capture initial impressions or at the end to reflect comprehensive assessment.

Product Appeal

Question: What specifically attracts you to this product?

Type: Open-ended

Purpose: Uncovers unique selling points from the user perspective and reveals unexpected value propositions.

When to Ask: After basic usage questions but before detailed feature evaluations.

Competitive Comparison

Question: How does our product compare to others you’ve tried?

Type: Multiple Choice with comment field (Much worse, Somewhat worse, About the same, Somewhat better, Much better)

Purpose: Gauges competitive positioning and identifies areas where competitors may be outperforming.

When to Ask: Midway through the survey after establishing usage patterns.

Quality Assessment

Build Quality Rating

Question: On a scale of 1-10, how would you rate the build quality?

Type: Numeric Scale (1-10)

Purpose: Measures perception of physical construction and materials quality.

When to Ask: During the product quality section of your survey.

Defect Identification

Question: Have you noticed any defects or issues with the product?

Type: Yes/No with comment field for “Yes” responses

Purpose: Identifies specific quality control issues requiring attention.

When to Ask: After general quality questions but before asking about warranty or support experiences.

Durability Assessment

Question: How durable has the product been during your ownership?

Type: Rating Scale (1-5 from “Not at all durable” to “Extremely durable”)

Purpose: Evaluates product longevity and resistance to wear or damage.

When to Ask: After asking about length of ownership and usage frequency.

Expectations Alignment

Question: Does the product meet the quality standards you expected?

Type: Multiple Choice (Falls far short, Falls somewhat short, Meets expectations, Exceeds somewhat, Far exceeds expectations)

Purpose: Measures gap between expected and actual quality, helping identify marketing or messaging issues.

When to Ask: Midway through the survey after establishing usage context.

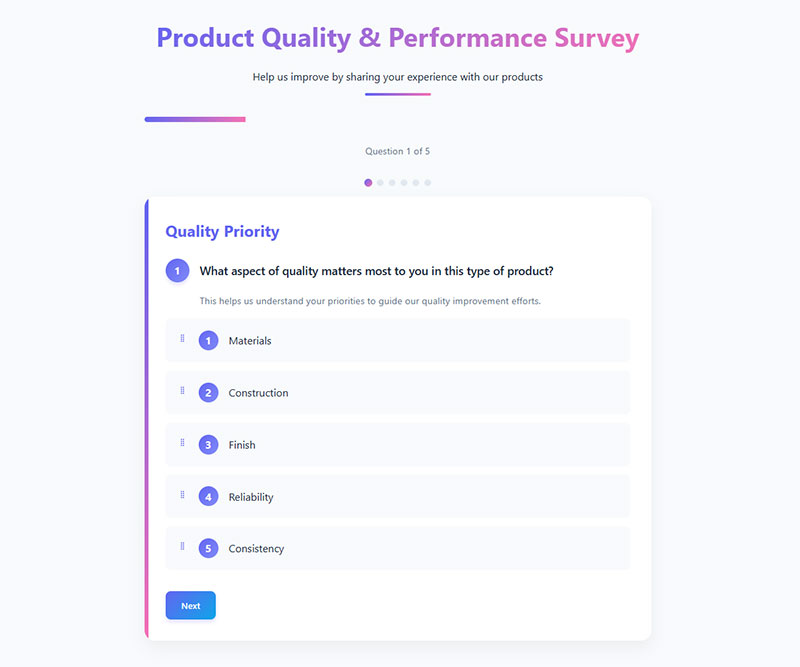

Quality Priority

Question: What aspect of quality matters most to you in this type of product?

Type: Ranking or Multiple Choice (Materials, Construction, Finish, Reliability, Consistency)

Purpose: Reveals user priorities to guide quality improvement efforts.

When to Ask: Near the beginning of the quality assessment section.

Performance Evaluation

Marketing Claims Verification

Question: Does the product perform as advertised?

Type: Likert Scale (Strongly disagree to Strongly agree)

Purpose: Validates marketing claims and identifies potential areas of overpromising.

When to Ask: After establishing familiarity with the product.

Strength Identification

Question: What tasks does the product handle well?

Type: Open-ended or Multiple Selection

Purpose: Identifies core competencies and primary use cases from user perspective.

When to Ask: During the performance section after general performance questions.

Performance Gaps

Question: Where does the product fall short in performance?

Type: Open-ended

Purpose: Reveals performance weaknesses and opportunities for improvement.

When to Ask: After asking about strengths to encourage balanced feedback.

Performance Evolution

Question: Has the product’s performance changed over time?

Type: Multiple Choice with comment field (Significantly worse, Somewhat worse, No change, Somewhat better, Significantly better)

Purpose: Tracks performance degradation or improvement through product lifecycle.

When to Ask: Only after establishing moderate to long-term usage.

Desired Improvements

Question: What performance improvements would make this product better for you?

Type: Open-ended

Purpose: Gathers specific improvement suggestions directly related to user needs.

When to Ask: Near the end of the performance section after evaluating current capabilities.

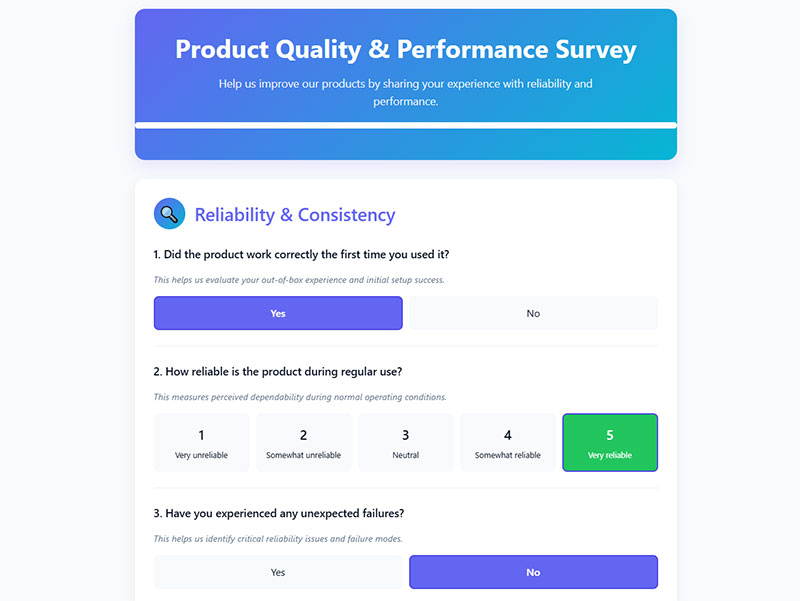

Reliability & Consistency

First-Use Success

Question: Did the product work correctly the first time you used it?

Type: Yes/No with comment field for “No” responses

Purpose: Evaluates out-of-box experience and initial setup success.

When to Ask: When surveying newer customers or asking about onboarding experiences.

Operational Reliability

Question: How reliable is the product during regular use?

Type: Rating Scale (1-5 from “Very unreliable” to “Very reliable”)

Purpose: Measures perceived dependability during normal operating conditions.

When to Ask: After establishing usage patterns.

Failure Experience

Question: Have you experienced any unexpected failures?

Type: Yes/No with details field for “Yes” responses

Purpose: Identifies critical reliability issues and failure modes.

When to Ask: After general reliability questions but before asking about support experiences.

Consistency Assessment

Question: Does the product perform consistently each time you use it?

Type: Likert Scale (Never to Always)

Purpose: Evaluates performance predictability and consistency across usage sessions.

When to Ask: After questions about usage frequency and patterns.

Trust Enhancement

Question: What would make you trust this product more?

Type: Open-ended

Purpose: Reveals trust barriers and opportunities to build confidence in the product.

When to Ask: Near the end of the reliability section after identifying any issues.

Value Assessment

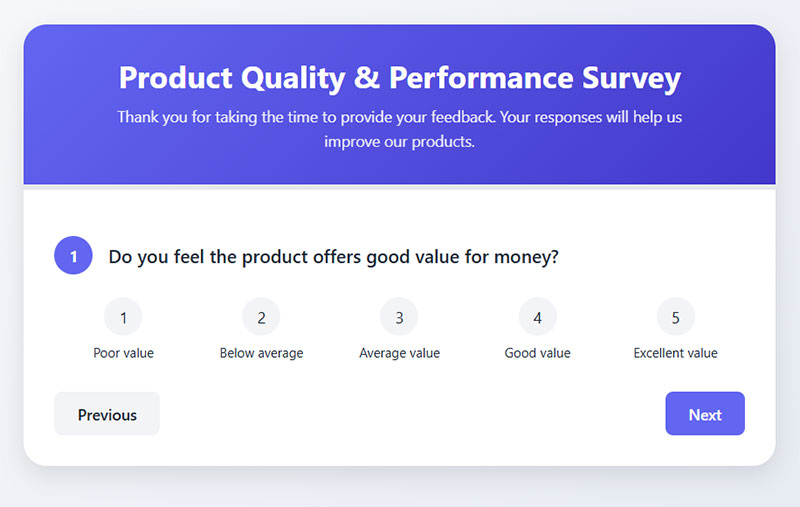

Value Perception

Question: Do you feel the product offers good value for money?

Type: Rating Scale (1-5 from “Poor value” to “Excellent value”)

Purpose: Measures price-to-value relationship from user perspective.

When to Ask: After performance and quality questions to ensure context for value judgment.

Repurchase Intent

Question: Would you purchase this product again at its current price point?

Type: Multiple Choice (Definitely not, Probably not, Unsure, Probably yes, Definitely yes)

Purpose: Indicates overall satisfaction and perceived value relative to cost.

When to Ask: Near the end of the survey after comprehensive product evaluation.

Competitive Pricing

Question: How does our pricing compare to the quality delivered?

Type: Multiple Choice (Overpriced, Slightly overpriced, Fairly priced, Good deal, Excellent value)

Purpose: Evaluates price positioning relative to quality perception.

When to Ask: After quality assessment questions.

Value-Added Features

Question: What additional features would justify a higher price?

Type: Open-ended

Purpose: Identifies potential premium features for upselling or new product development.

When to Ask: After asking about current features and pricing perception.

Recommendation Likelihood

Question: Would you recommend this product to friends based on its value?

Type: Rating Scale (0-10, Net Promoter Score format)

Purpose: Measures advocacy potential based specifically on value proposition.

When to Ask: Near the end of the survey after comprehensive evaluation.

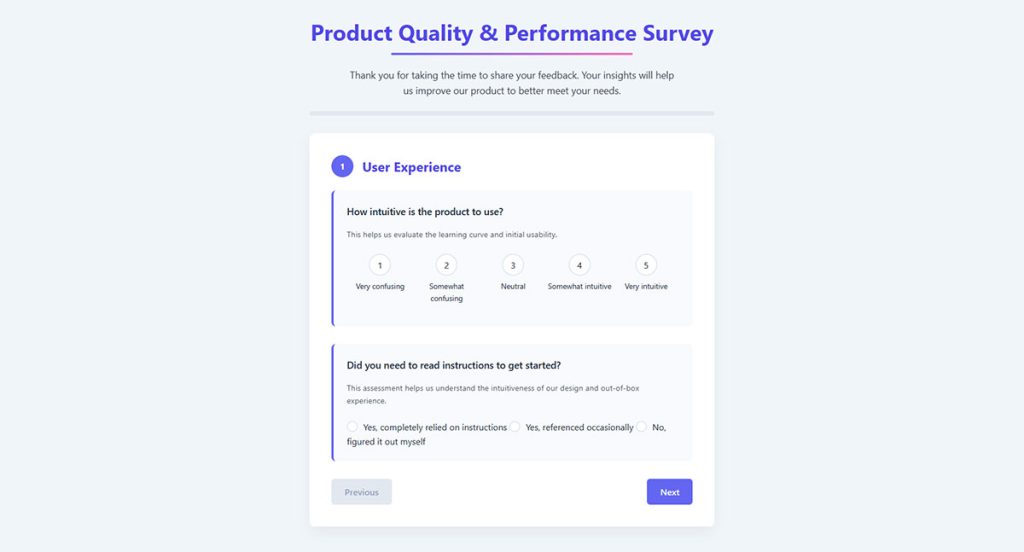

User Experience

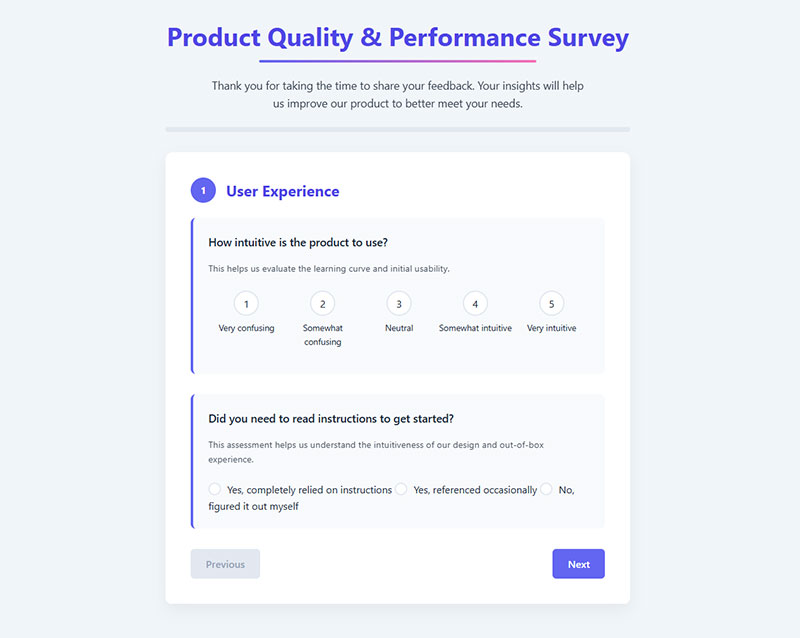

Intuitive Design

Question: How intuitive is the product to use?

Type: Rating Scale (1-5 from “Very confusing” to “Very intuitive”)

Purpose: Evaluates learning curve and initial usability.

When to Ask: Early in the user experience section.

Instructions Necessity

Question: Did you need to read instructions to get started?

Type: Multiple Choice (Yes, completely relied on instructions; Yes, referenced occasionally; No, figured it out myself)

Purpose: Assesses intuitiveness of design and out-of-box experience.

When to Ask: When evaluating onboarding or initial setup experience.

Pain Points

Question: What frustrations have you experienced while using the product?

Type: Open-ended

Purpose: Identifies friction points and usability issues requiring attention.

When to Ask: After general usability questions but before asking about specific features.

Joy Points

Question: Which aspects of using the product bring you the most joy?

Type: Open-ended

Purpose: Reveals emotional connections and highlights features that create positive experiences.

When to Ask: After asking about frustrations to balance the feedback.

Usability Improvement

Question: How could we make the product easier to use?

Type: Open-ended

Purpose: Gathers specific suggestions for usability enhancements.

When to Ask: Near the end of the user experience section after identifying any issues.

Specific Performance Metrics

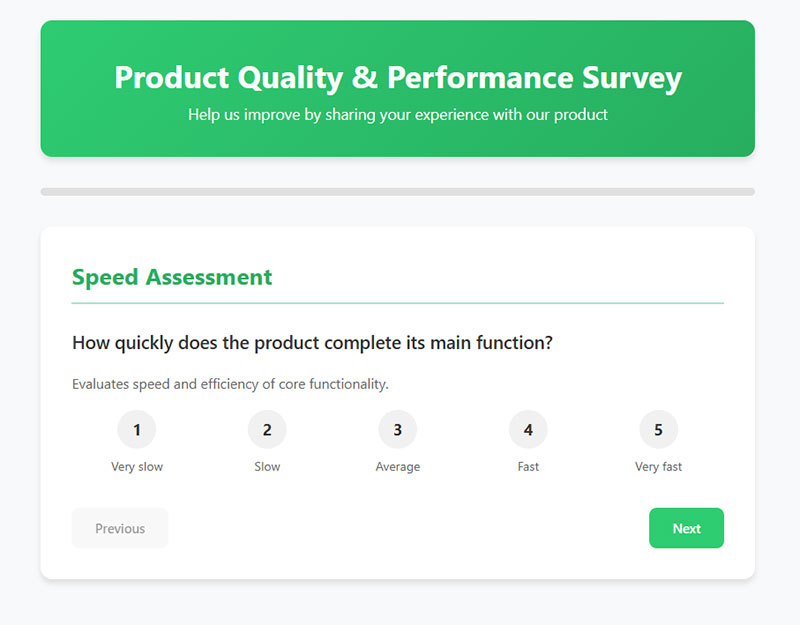

Speed Assessment

Question: How quickly does the product complete its main function?

Type: Rating Scale (1-5 from “Very slow” to “Very fast”)

Purpose: Evaluates speed and efficiency of core functionality.

When to Ask: During the performance metrics section.

Performance Under Load

Question: Does the product maintain consistent performance under heavy use?

Type: Multiple Choice (Performance significantly drops, Slight performance decrease, Maintains consistent performance)

Purpose: Identifies performance degradation under stress conditions.

When to Ask: Only relevant for users who have indicated heavy usage patterns.

Edge Case Handling

Question: How has the product handled unexpected situations?

Type: Open-ended

Purpose: Evaluates resilience and adaptability to nonstandard use cases.

When to Ask: After establishing familiarity with normal usage patterns.

Efficiency Rating

Question: Rate the product’s energy efficiency or battery life.

Type: Rating Scale (1-5 from “Poor” to “Excellent”)

Purpose: Measures resource consumption and operational efficiency.

When to Ask: Relevant for electronic or powered products.

Performance Bottlenecks

Question: What performance bottlenecks have you identified?

Type: Open-ended

Purpose: Discovers specific performance limitations from power users.

When to Ask: Best directed at experienced users who have used the product extensively.

Improvement Suggestions

Primary Improvement Area

Question: What one thing would you improve about this product?

Type: Open-ended

Purpose: Forces prioritization of the most critical improvement need.

When to Ask: Near the end of the survey after comprehensive evaluation.

Feature Request

Question: Which additional feature would most increase your satisfaction?

Type: Open-ended or Multiple Choice based on product roadmap options

Purpose: Identifies high-impact feature additions for future development.

When to Ask: After evaluating current features.

Quality Issue Priority

Question: What quality issues need immediate attention?

Type: Open-ended

Purpose: Highlights critical quality concerns requiring urgent fixes.

When to Ask: After quality assessment questions.

Longevity Enhancement

Question: How could we enhance the product’s longevity?

Type: Open-ended

Purpose: Gathers ideas for improving product lifespan and durability.

When to Ask: After durability and reliability questions.

Upgrade Triggers

Question: What performance upgrades would make you upgrade to a newer version?

Type: Open-ended or Multiple Selection from potential upgrade options

Purpose: Identifies compelling features for product roadmap and future versions.

When to Ask: Near the end of the survey to capture forward-looking insights.

Why Should Businesses Measure Product Quality Through Surveys

Surveys reveal quality issues before they become widespread complaints or returns.

A 2023 study by the American Customer Satisfaction Index found that companies tracking product quality metrics saw 23% higher customer retention rates.

Direct feedback beats assumptions every time.

Quality perception studies show that customers who complete post-purchase surveys feel more connected to brands, even when reporting problems.

The feedback loop matters as much as the data itself.

Product improvement becomes targeted rather than guesswork. You fix what actually bothers people.

Quality assurance metrics from surveys also support ISO 9001 compliance documentation and Total Quality Management programs.

What Types of Product Quality Survey Questions Exist

Four main types of survey questions work for measuring product quality: rating scale, open-ended, comparative, and binary.

Each serves a different purpose in your quality assessment strategy.

What Are Rating Scale Questions for Product Quality

Rating scale questions use the Likert Scale (1-5 or 1-7) or numeric scales (1-10) to quantify satisfaction levels.

They generate measurable data for tracking quality trends over time and calculating scores like CSAT or NPS.

What Are Open-Ended Product Quality Questions

Open-ended questions capture specific details that rating scales miss.

Customers describe exact defects, unexpected benefits, or usage scenarios you never considered.

What Are Comparative Product Quality Questions

These questions ask customers to compare your product against competitors or previous versions.

Useful for benchmarking and understanding market position, especially after product updates.

What Are Binary Product Quality Questions

Yes/no questions work for specific quality checkpoints: “Did the product arrive undamaged?” or “Does the product match the description?”

Fast to answer; easy to analyze.

How to Write Effective Product Quality Survey Questions

Good survey design determines response quality. Poorly written questions produce useless data.

Following best practices for creating feedback forms prevents common mistakes that skew results.

What Makes a Product Quality Question Clear

One concept per question. No double-barreled questions like “How satisfied are you with product quality and shipping speed?”

Use specific language: “battery life” instead of “power performance.”

How Long Should Product Quality Questions Be

Keep questions under 20 words. Shorter questions get more accurate responses.

The entire survey should take under 5 minutes to complete, or you risk survey fatigue and abandoned responses.

What Response Formats Work Best for Quality Surveys

Match the format to what you need to learn:

- Rating scales – tracking satisfaction trends over time

- Multiple choice – identifying specific problem areas

- Open text – discovering unknown issues

- Matrix questions – evaluating multiple product attributes efficiently

Mixing formats within a single survey keeps respondents engaged and captures both quantitative and qualitative data.

What Product Quality Dimensions Should Surveys Measure

The Kano Model and SERVQUAL framework identify key quality dimensions that matter to customers.

Focus your questions on durability, performance, and design satisfaction, the three areas that drive most purchase decisions and returns.

How to Measure Product Durability in Surveys

Ask about wear patterns, breakage incidents, and longevity expectations versus reality.

Time-based questions work well: “After 3 months of use, how would you rate product condition?”

How to Assess Product Performance Through Questions

Performance questions target functionality: Does it do what it should? How well? How consistently?

Include questions about reliability testing from the customer perspective, covering repeated use scenarios.

How to Evaluate Product Design Satisfaction

Design covers aesthetics, ergonomics, and user experience.

Questions should address ease of use, visual appeal, and how the product fits into daily routines or workflows.

When Should Businesses Send Product Quality Surveys

Timing affects response rates and data accuracy. Send too early, customers lack experience; too late, they forget details.

The product lifecycle stage determines optimal survey windows:

- Immediate post-purchase (24-48 hours) – packaging, delivery condition, first impressions

- Early use phase (7-14 days) – initial functionality, setup experience, learning curve

- Extended use (30-90 days) – durability, performance consistency, ongoing satisfaction

- Long-term ownership (6-12 months) – reliability, wear patterns, repurchase intent

Consumer products typically need 2-4 weeks before quality assessment makes sense.

Software and digital products can survey within days since usage patterns emerge faster.

Subscription products benefit from quarterly quality check-ins aligned with billing cycles.

Build surveys using survey form templates to speed deployment at each lifecycle stage.

What Tools Measure Product Quality Survey Results

Survey platforms and analytics tools turn raw responses into quality metrics you can act on.

The right tool depends on survey volume, analysis needs, and existing tech stack.

Survey Platforms for Quality Data Collection

Qualtrics handles enterprise-level product feedback with advanced branching logic and statistical analysis built in.

Typeform creates conversational survey experiences that boost completion rates on quality assessments.

WordPress survey plugins work well for businesses already running WordPress sites.

Medallia and Hotjar combine surveys with behavioral data for deeper quality insights.

Metrics and Scoring Systems

Standard quality metrics include:

- Net Promoter Score (NPS) – likelihood to recommend, scored -100 to +100

- Customer Satisfaction Score (CSAT) – direct satisfaction rating, typically percentage-based

- Customer Effort Score (CES) – ease of product use, lower effort means higher quality perception

J.D. Power and Consumer Reports use proprietary quality scoring that many industries reference for benchmarking.

The Malcolm Baldrige National Quality Award criteria provide a comprehensive quality evaluation framework used by enterprises.

Analysis Methods

Pareto Analysis identifies the 20% of quality issues causing 80% of complaints.

Root Cause Analysis digs into why defects occur, not just what customers report.

Statistical Process Control tracks quality metrics over time to spot trends before they become problems.

Quality Function Deployment connects survey feedback directly to product specifications and engineering requirements.

For structured data collection, proper form design principles apply to quality surveys just like any other feedback form.

FAQ on Survey Questions About Product Quality

What is the best rating scale for product quality surveys?

The Likert Scale (1-5 or 1-7) works best for most product quality assessments. It provides enough range for meaningful analysis without overwhelming respondents. NPS uses a 0-10 scale specifically for recommendation likelihood.

How many questions should a product quality survey include?

Keep surveys between 5-10 questions for optimal completion rates. Longer surveys increase abandonment. Focus on core quality dimensions: durability, performance, and satisfaction rather than covering everything at once.

When is the best time to send product quality surveys?

Send surveys 2-4 weeks after purchase for physical products. This allows enough usage time for quality assessment. Digital products can survey within 7-14 days since users experience functionality faster.

What is the difference between CSAT and NPS for quality measurement?

Customer Satisfaction Score measures direct satisfaction with specific product attributes. Net Promoter Score measures likelihood to recommend, reflecting overall quality perception. Use CSAT for detailed feedback; NPS for benchmarking loyalty trends.

Should product quality surveys be anonymous?

Anonymous surveys typically generate more honest feedback about quality issues. However, identified responses allow follow-up on specific complaints. Offer anonymity as an option while explaining benefits of identification for resolution.

How do I measure product durability through surveys?

Ask time-based questions about wear patterns and condition changes. Include questions like “After 30 days of use, how would you rate product condition?” Compare responses across time intervals to track durability trends.

What open-ended questions work best for quality feedback?

Ask “What one improvement would increase your satisfaction?” and “Describe any quality issues experienced.” These capture specific defect details and improvement ideas that rating scales miss. Limit open-ended questions to 2-3 per survey.

How do I increase response rates on quality surveys?

Keep surveys under 5 minutes, use mobile-friendly design, and send at optimal times. Offer small incentives for completion. Explain how feedback improves products. Personalize invitations with the customer’s name and purchased product.

Can I combine quality surveys with other feedback types?

Yes, but carefully. Combine quality questions with customer service survey questions or feedback survey questions only when topics relate directly. Mixing unrelated topics confuses respondents and reduces data quality.

What tools analyze product quality survey data?

Qualtrics and Medallia offer advanced quality analytics. Google Forms works for basic collection. Use Pareto Analysis to identify top issues and Statistical Process Control for trend monitoring across survey cycles.

Conclusion

Effective survey questions about product quality turn customer opinions into measurable improvements. The data drives real change when you ask the right questions at the right time.

Start with clear quality dimensions: reliability testing, value perception, and design satisfaction.

Use Customer Effort Score alongside traditional CSAT metrics to capture the full picture.

Build feedback loop systems that connect survey responses directly to your quality control processes. Root Cause Analysis transforms complaints into product fixes.

Keep surveys short. Send them at logical points in the customer journey. Track results through Quality Function Deployment methods.

Your customers already know what needs fixing. Quality surveys simply give them a structured way to tell you.