Most corporate training programs collect feedback. Few collect feedback that actually changes anything. The difference comes down to asking the right training survey questions, ones that measure knowledge retention, trainer…

Table of Contents

Most surveys collect answers. Few collect anything useful.

The difference comes down to the questions you ask. Vague prompts get vague responses. Specific, well-structured feedback survey questions get data you can actually use to fix problems, improve retention, and make better product decisions.

This guide covers the exact questions that work across customer satisfaction surveys, employee engagement programs, post-purchase flows, and product research. You’ll find ready-to-use examples for every scenario, from NPS and CSAT measurement to usability testing and exit interviews.

No filler. Just the questions, the reasoning behind them, and the mistakes to avoid.

What Are Feedback Survey Questions

Feedback survey questions are structured prompts used to collect opinions, ratings, and qualitative responses from customers, employees, or users about a specific experience, product, or service. Organizations use these questions inside feedback forms, email campaigns, and in-app prompts to measure customer satisfaction, track sentiment over time, and identify areas that need improvement.

The difference between a useful survey and a wasted one usually comes down to the questions themselves.

A poorly worded question gives you garbage data. A well-constructed one gives you something you can actually act on.

Feedback surveys show up everywhere. Post-purchase emails from Shopify stores. Quarterly employee check-ins run through Qualtrics. SurveyMonkey links dropped into Slack channels after a product launch. Typeform pop-ups on SaaS dashboards.

They all serve the same function: closing the gap between what you think is happening and what people actually experience.

The format varies. Some surveys use a Likert scale (strongly agree to strongly disagree). Others rely on open-ended text boxes or simple star ratings. The right format depends entirely on what you’re measuring, who you’re asking, and when you’re asking them.

Google Forms works fine for quick internal polls. But if you’re running a quarterly brand perception study or tracking Net Promoter Score across multiple customer segments, you’ll need something with more analytical depth, like Medallia or Alchemer.

Feedback Survey Questions

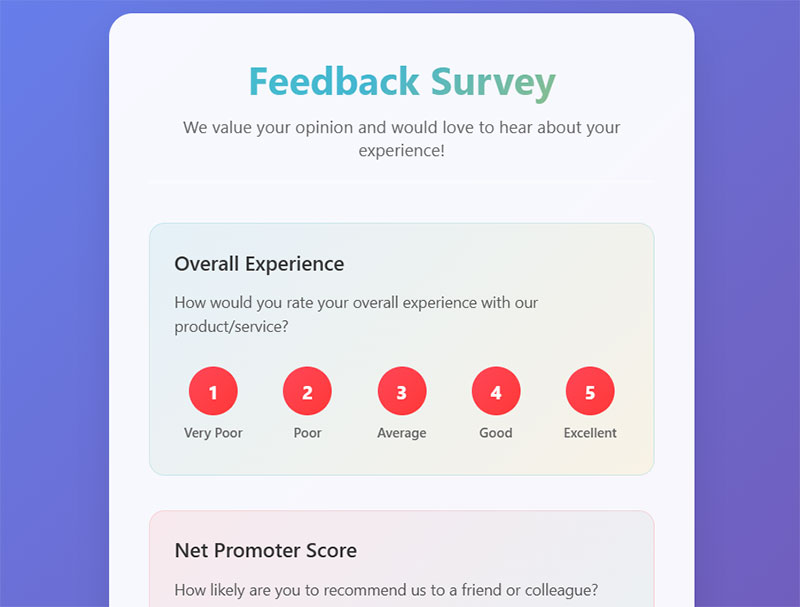

Overall Experience

Overall Experience Rating

Question: How would you rate your overall experience with [product/service/event]?

Type: Multiple Choice (1–5 scale from “Very poor” to “Excellent”)

Purpose: Provides a comprehensive snapshot of customer satisfaction and serves as a key performance indicator for overall business health.

When to Ask: At the end of any customer interaction, purchase, or service completion.

Net Promoter Score

Question: How likely are you to recommend us to a friend or colleague?

Type: Scale (0–10 where 0 is “Not at all likely” and 10 is “Extremely likely”)

Purpose: Measures customer loyalty and predicts business growth by identifying promoters, passives, and detractors.

When to Ask: After significant interactions, purchases, or periodically for ongoing relationships.

Overall Satisfaction Level

Question: What is your overall satisfaction level on a scale of 1-10?

Type: Scale (1–10 where 1 is “Completely dissatisfied” and 10 is “Completely satisfied”)

Purpose: Quantifies general contentment and helps track satisfaction trends over time.

When to Ask: During regular check-ins, after service completion, or in periodic customer surveys.

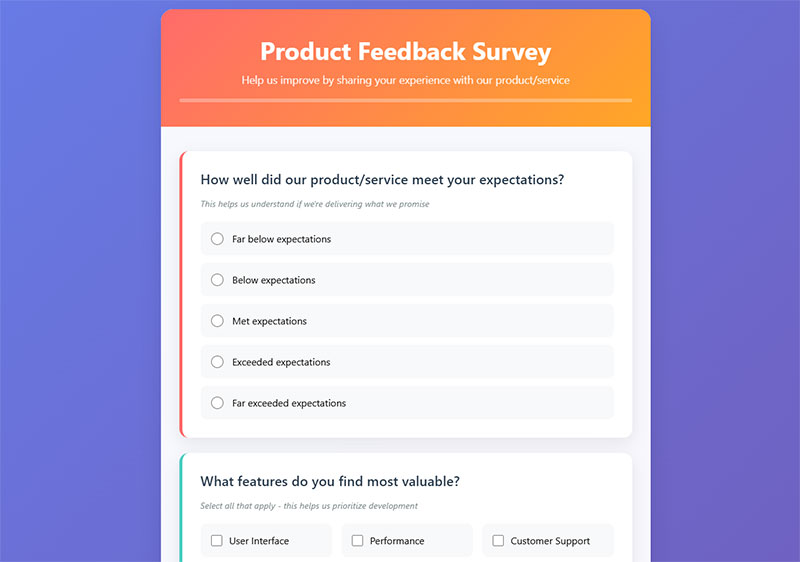

Product/Service Quality

Expectation Alignment

Question: How well did our product/service meet your expectations?

Type: Multiple Choice (5-point scale from “Far below expectations” to “Far exceeded expectations”)

Purpose: Identifies gaps between customer expectations and actual delivery, helping improve marketing messaging and product development.

When to Ask: Shortly after purchase or first use of the product/service.

Most Valuable Features

Question: What features do you find most valuable?

Type: Multiple Choice (list of features) or Open-ended

Purpose: Identifies key value drivers to emphasize in marketing and prioritize in development efforts.

When to Ask: After users have had sufficient time to explore and use various features.

Least Useful Features

Question: What features do you find least useful or unnecessary?

Type: Multiple Choice (list of features) or Open-ended

Purpose: Helps streamline offerings, reduce complexity, and reallocate development resources to more valuable features.

When to Ask: During comprehensive product reviews or when considering feature updates.

Competitive Quality Comparison

Question: How would you rate the quality compared to similar products/services?

Type: Multiple Choice (5-point scale from “Much worse” to “Much better”)

Purpose: Benchmarks competitive positioning and identifies areas where you lead or lag behind competitors.

When to Ask: When customers have experience with competitors or during market research initiatives.

Issues and Problems

Question: Were there any issues or problems you encountered?

Type: Yes/No followed by open-ended explanation if “Yes”

Purpose: Identifies pain points, bugs, or service failures that need immediate attention and resolution.

When to Ask: Immediately after problem resolution or during regular quality assessments.

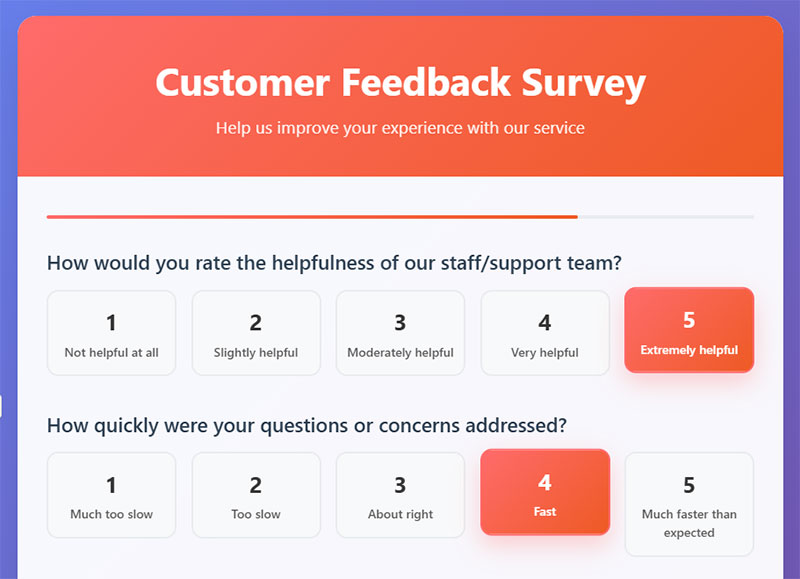

Customer Service

Staff Helpfulness Rating

Question: How would you rate the helpfulness of our staff/support team?

Type: Multiple Choice (1–5 scale from “Not helpful at all” to “Extremely helpful”)

Purpose: Evaluates team performance and identifies training needs or recognition opportunities.

When to Ask: Immediately after any customer service interaction or support request.

Response Time Satisfaction

Question: How quickly were your questions or concerns addressed?

Type: Multiple Choice (5-point scale from “Much too slow” to “Much faster than expected”)

Purpose: Measures efficiency of support processes and helps set appropriate response time expectations.

When to Ask: After support tickets are resolved or customer inquiries are answered.

Staff Knowledge Assessment

Question: Did our team demonstrate good knowledge of the product/service?

Type: Multiple Choice (1–5 scale from “Very poor knowledge” to “Excellent knowledge”)

Purpose: Identifies knowledge gaps in staff training and ensures customers receive accurate information.

When to Ask: Following technical support interactions or product consultations.

Communication Professionalism

Question: How professional and courteous was our communication?

Type: Multiple Choice (1–5 scale from “Very unprofessional” to “Very professional”)

Purpose: Maintains service quality standards and identifies coaching opportunities for customer-facing staff.

When to Ask: After any direct communication with customers, especially for complaint resolution.

Usability and Convenience

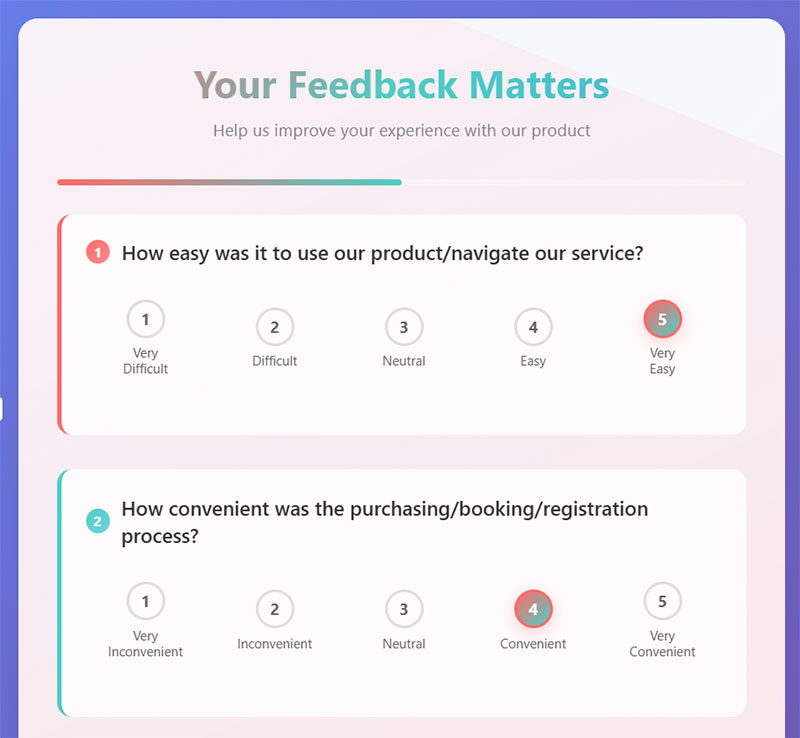

Ease of Use Rating

Question: How easy was it to use our product/navigate our service?

Type: Multiple Choice (1–5 scale from “Very difficult” to “Very easy”)

Purpose: Identifies usability barriers and guides user experience improvements.

When to Ask: During onboarding processes or after initial product exploration.

Process Convenience

Question: How convenient was the purchasing/booking/registration process?

Type: Multiple Choice (1–5 scale from “Very inconvenient” to “Very convenient”)

Purpose: Optimizes conversion funnels and reduces abandonment rates by identifying friction points.

When to Ask: Immediately after completing any transactional process.

Usability Improvement Suggestions

Question: What would make our product/service easier to use?

Type: Open-ended

Purpose: Gathers specific improvement ideas directly from users to guide UX/UI enhancements.

When to Ask: During user testing sessions or comprehensive experience surveys.

Technical Difficulties

Question: Did you encounter any technical difficulties?

Type: Yes/No followed by detailed description if “Yes”

Purpose: Identifies bugs, system issues, or technical barriers that impact user experience.

When to Ask: After system interactions or when troubleshooting user problems.

Value and Pricing

Value for Money Assessment

Question: Do you feel you received good value for money?

Type: Multiple Choice (1–5 scale from “Very poor value” to “Excellent value”)

Purpose: Evaluates pricing strategy effectiveness and identifies opportunities for value communication or pricing adjustments.

When to Ask: After purchase completion or during renewal discussions.

Competitive Price Comparison

Question: How do our prices compare to competitors?

Type: Multiple Choice (5-point scale from “Much more expensive” to “Much less expensive”)

Purpose: Benchmarks pricing position in the market and informs competitive pricing strategies.

When to Ask: During market research or when customers are evaluating alternatives.

Pricing Fairness Perception

Question: Would you consider our pricing fair and reasonable?

Type: Yes/No with optional explanation

Purpose: Assesses price sensitivity and helps justify pricing to stakeholders and customers.

When to Ask: When introducing new pricing or addressing pricing objections.

Improvement and Suggestions

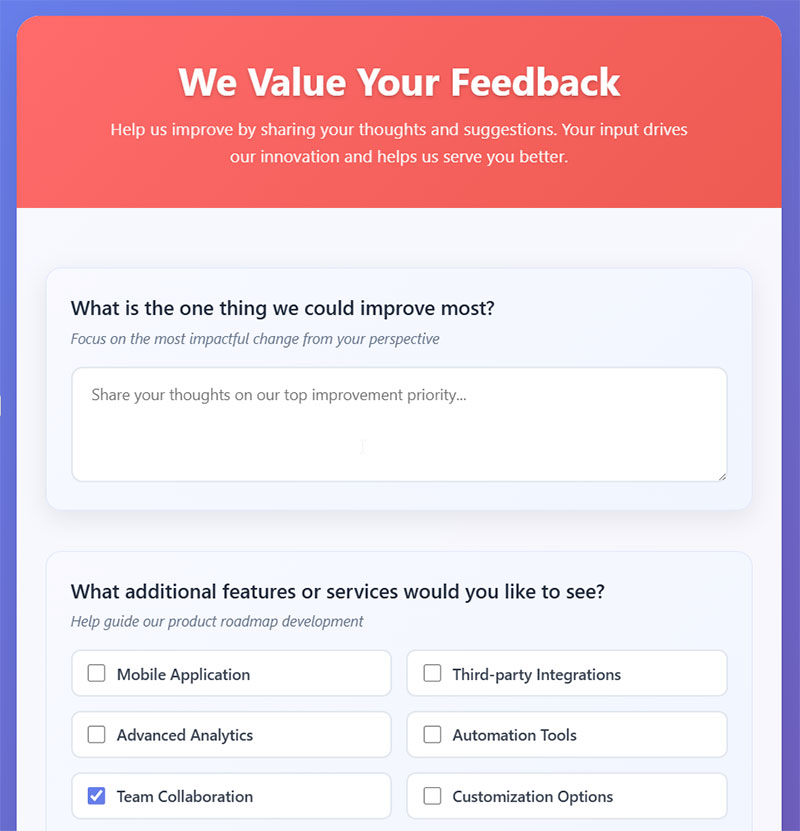

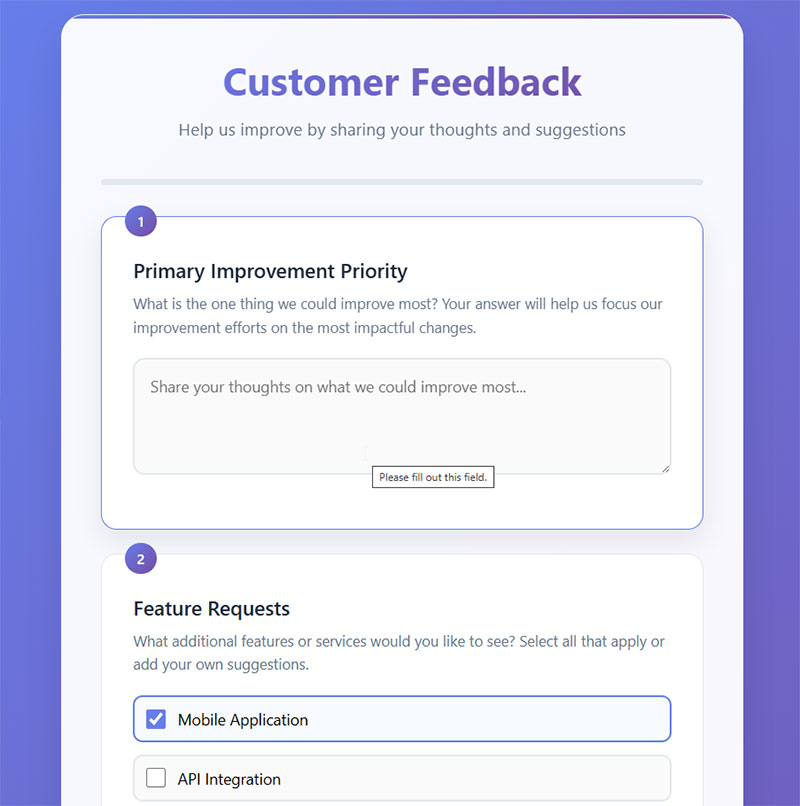

Primary Improvement Priority

Question: What is the one thing we could improve most?

Type: Open-ended

Purpose: Focuses improvement efforts on the most impactful changes from the customer perspective.

When to Ask: During comprehensive reviews or when planning major updates.

Feature Requests

Question: What additional features or services would you like to see?

Type: Open-ended or Multiple Choice (list of potential features)

Purpose: Guides product roadmap development and identifies new revenue opportunities.

When to Ask: During strategic planning periods or when engaging power users.

Elimination Recommendations

Question: Is there anything we should stop doing?

Type: Open-ended

Purpose: Identifies wasteful practices, annoying features, or processes that detract from customer experience.

When to Ask: During efficiency reviews or when streamlining operations.

Increased Usage Drivers

Question: What would make you more likely to use our product/service again?

Type: Open-ended

Purpose: Identifies retention drivers and helps develop customer loyalty strategies.

When to Ask: With at-risk customers or during retention campaigns.

Demographics and Context

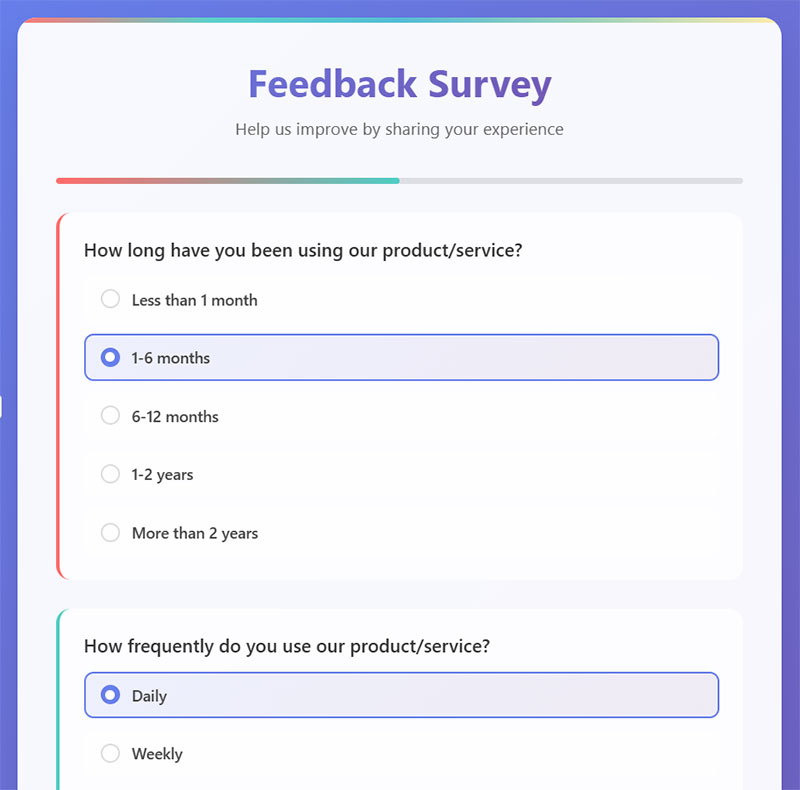

Usage Duration

Question: How long have you been using our product/service?

Type: Multiple Choice (time ranges like “Less than 1 month,” “1-6 months,” etc.)

Purpose: Segments feedback by customer maturity and identifies patterns in the customer journey.

When to Ask: In any comprehensive survey to provide context for other responses.

Usage Frequency

Question: How frequently do you use it?

Type: Multiple Choice (frequency options like “Daily,” “Weekly,” “Monthly,” etc.)

Purpose: Understands engagement levels and identifies opportunities to increase usage.

When to Ask: During regular check-ins or when analyzing user behavior patterns.

Primary Use Case

Question: What is your primary use case or reason for choosing us?

Type: Multiple Choice (list of common use cases) or Open-ended

Purpose: Identifies core value propositions and helps tailor marketing messages to different customer segments.

When to Ask: During onboarding or when developing customer personas.

Discovery Channel

Question: How did you first hear about us?

Type: Multiple Choice (list of marketing channels and referral sources)

Purpose: Measures marketing channel effectiveness and optimizes customer acquisition strategies.

When to Ask: During initial interactions or customer onboarding processes.

Open-Ended Questions

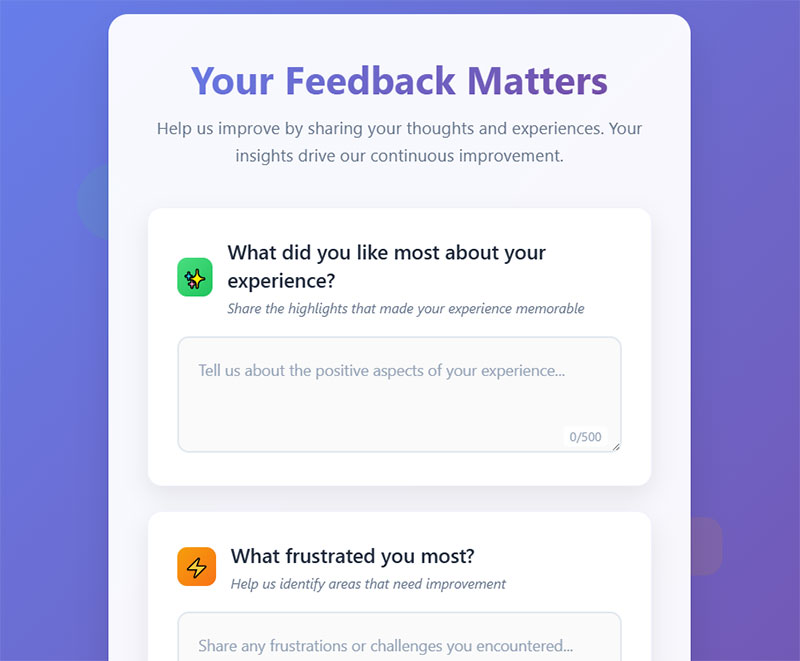

Positive Experience Highlights

Question: What did you like most about your experience?

Type: Open-ended

Purpose: Identifies strengths to maintain and amplify, and provides content for testimonials and case studies.

When to Ask: After positive interactions or successful project completions.

Frustration Points

Question: What frustrated you most?

Type: Open-ended

Purpose: Uncovers emotional pain points that quantitative metrics might miss, enabling targeted improvements.

When to Ask: After problem resolution or during comprehensive experience reviews.

Additional Feedback

Question: Any additional comments or suggestions?

Type: Open-ended

Purpose: Captures unexpected insights and gives customers a voice for concerns not covered by structured questions.

When to Ask: At the end of any survey to ensure comprehensive feedback collection.

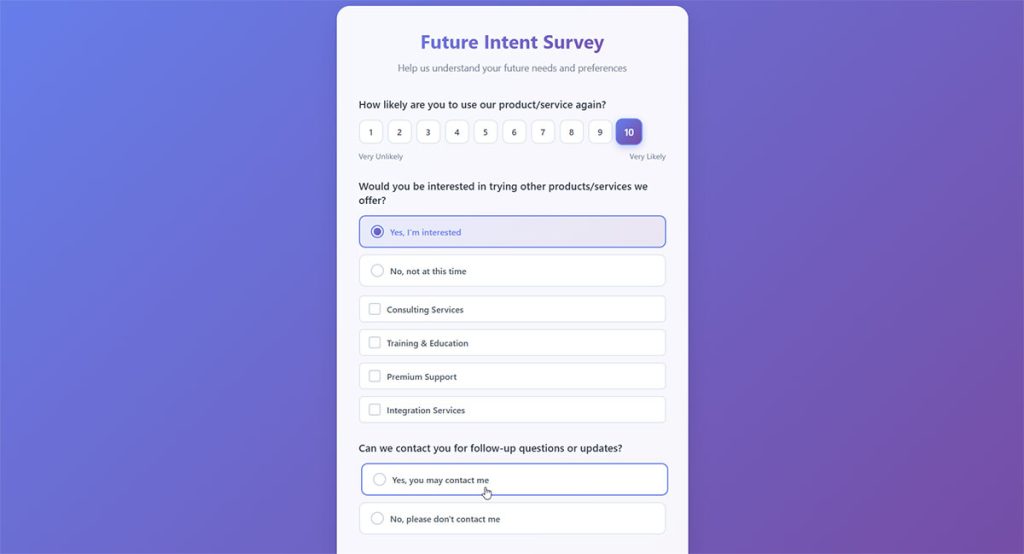

Future Intent

Repeat Usage Likelihood

Question: How likely are you to use our product/service again?

Type: Scale (1–10 where 1 is “Very unlikely” and 10 is “Very likely”)

Purpose: Predicts customer retention and identifies at-risk accounts requiring intervention.

When to Ask: After project completion or during regular relationship reviews.

Cross-Selling Interest

Question: Would you be interested in trying other products/services we offer?

Type: Yes/No with optional specification of interest areas

Purpose: Identifies upselling and cross-selling opportunities to increase customer lifetime value.

When to Ask: With satisfied customers or during account expansion discussions.

Follow-up Permission

Question: Can we contact you for follow-up questions or updates?

Type: Yes/No with preferred contact method

Purpose: Builds permission for ongoing communication and enables deeper relationship development.

When to Ask: At the end of surveys or after positive interactions to maintain engagement.

What Are the Types of Feedback Survey Questions

There are several core types of survey questions, each suited for different measurement goals.

- Open-ended questions let respondents write freely. “What could we improve about your checkout experience?” No predefined answers. Useful for qualitative insights but harder to analyze at scale.

- Closed-ended questions force a choice from a set list. Faster to answer, easier to quantify.

- Likert scale questions measure agreement or satisfaction on a 5 or 7-point scale. “How satisfied are you with our response time?” from “Very dissatisfied” to “Very satisfied.”

- Rating scale questions assign a numeric value. The classic 1-to-10 NPS question falls here.

- Multiple choice questions offer several options where one or more can be selected. Best for demographic survey questions or categorizing preferences.

- Dichotomous questions are yes/no or true/false. Binary, fast, high completion rates.

- Matrix questions group related items into a grid. Efficient for comparing multiple service attributes, but can cause survey fatigue if overused.

The mix you choose depends on whether you’re after quantitative benchmarking or qualitative depth. Most effective surveys combine at least two or three of these formats.

How to Write Effective Feedback Survey Questions

Writing effective feedback survey questions requires clarity, neutrality, and a single focus per question. Every question should target one specific thing you want to learn, phrased in a way that doesn’t push the respondent toward a particular answer.

Don Dillman’s Tailored Design Method, published through Washington State University, remains one of the most cited frameworks for survey methodology. Its core rule: make every question easy to understand on the first read.

That sounds obvious. But most surveys fail here. People add jargon, double up on concepts in a single question, or use scales that don’t match the question being asked.

If you’re building these surveys on a website, following best practices for creating feedback forms from the start saves you from redesigning later.

What Makes a Good Feedback Survey Question

A good feedback question is specific, neutral, and asks about one thing only. It uses language the respondent already understands, not internal company terms.

Bad: “How would you rate our amazing new onboarding experience and support team?” (leading, double-barreled).

Good: “How easy was it to complete the account setup process?” (single-focus, neutral, measurable).

Match the scale to the question. Don’t ask “How likely are you to recommend us?” on a 3-point scale. The standard NPS question from Fred Reichheld and Bain & Company uses 0-to-10 for a reason.

What Are Common Mistakes in Writing Feedback Survey Questions

Double-barreled questions ask two things at once. “Was the product affordable and high-quality?” If the answer is yes to one and no to the other, the data is useless.

Leading questions push a specific answer. “How great was your experience?” assumes the experience was great.

Loaded questions contain assumptions. “Why do you prefer our service over competitors?” presumes they do.

Using company jargon, adding too many matrix grids, or running a 40-question survey when 12 would do. All common problems. If you want to dig deeper into avoiding these pitfalls, there’s solid guidance on avoiding survey fatigue that covers length and cognitive load.

Customer Feedback Survey Questions

Customer feedback survey questions measure how people experience your product, service, or brand at specific touchpoints. They’re used post-purchase, after support interactions, and on a recurring schedule to track satisfaction trends.

Three standardized measurement systems dominate this space: Customer Satisfaction Score (CSAT), Net Promoter Score (NPS), and Customer Effort Score (CES). Each one answers a different question about the customer relationship.

Collecting this feedback through your website is straightforward if you already have a survey form set up. The real work is in the question selection.

Here are customer feedback questions that actually produce usable data:

- “How satisfied are you with your recent purchase?” (CSAT, 1-5 scale)

- “What was the main reason for your score?” (open-ended follow-up)

- “How likely are you to buy from us again?” (retention signal)

- “How well did our product meet your expectations?” (expectation gap)

- “What almost stopped you from completing your purchase?” (friction identifier)

- “How would you rate the value for money of our product?” (perceived value)

- “Is there anything we could do differently?” (open improvement prompt)

- “How would you describe our brand to a friend?” (brand perception, open-ended)

For e-commerce specifically, post-purchase survey questions focused on checkout, delivery, and unboxing are where you’ll find the most actionable friction points.

What Are Customer Satisfaction Survey Questions

CSAT questions ask customers to rate satisfaction on a scale, typically 1-to-5 or 1-to-7. They’re the most widely used feedback metric in SaaS, retail, hospitality, and healthcare.

Standard CSAT examples include: “How satisfied are you with [specific interaction]?” and “Rate your overall experience with our service.” Follow each rating with an open-text “Why?” to get context behind the number. The American Customer Satisfaction Index (ACSI) tracks these scores at a national level across industries.

What Are Net Promoter Score Questions

The NPS question is: “On a scale of 0 to 10, how likely are you to recommend [company/product] to a friend or colleague?” Scores 9-10 are promoters, 7-8 passive, 0-6 detractors.

Fred Reichheld developed NPS at Bain & Company, first published in Harvard Business Review in 2003. The single-number score is useful for benchmarking, but it needs a follow-up question to be actionable. “What’s the primary reason for your score?” is the standard second question. You can explore more variations in a dedicated guide on NPS survey questions.

What Are Customer Effort Score Questions

CES measures how easy it was for a customer to accomplish something. The original CES question: “How much effort did you personally have to put forth to handle your request?” on a 1-to-7 scale.

A 2010 Harvard Business Review study found that reducing customer effort is a stronger loyalty driver than exceeding expectations. CES works best right after support interactions or task completion. When NPS tells you sentiment, CES tells you friction. Use both, but CES is better for customer service survey questions specifically.

Employee Feedback Survey Questions

Employee feedback survey questions measure engagement, satisfaction, management quality, and workplace culture. They’re typically deployed quarterly, biannually, or during specific transitions like onboarding and offboarding.

The Gallup Q12 framework is the most researched employee engagement instrument, consisting of 12 core questions validated across millions of respondents. It covers clarity of expectations, recognition, development opportunities, and sense of purpose.

Anonymous surveys tend to produce more honest responses, particularly around management quality and compensation. But anonymity also makes follow-up harder, so there’s always a tradeoff.

Strong employee feedback questions include:

- “Do you know what is expected of you at work?” (Gallup Q12, role clarity)

- “In the last seven days, have you received recognition for doing good work?”

- “Do you have a best friend at work?” (belonging indicator)

- “Does your manager seem to care about you as a person?”

- “How confident are you in the company’s direction over the next year?”

- “Do you have the tools and resources to do your job well?”

- “How likely are you to recommend this company as a place to work?” (eNPS)

- “What one thing would you change about your day-to-day work?”

What Are Employee Engagement Survey Questions

Engagement surveys go beyond “are you happy?” to measure psychological investment in the work itself. Gallup’s research across 2.7 million employees shows that engaged teams have 41% lower absenteeism and 17% higher productivity.

Key engagement questions: “Do you feel your opinions count at work?” and “In the last six months, has someone talked to you about your progress?” Keep these anonymous. Send them quarterly through a dedicated tool or a simple survey form template to track changes over time.

What Are 360-Degree Feedback Survey Questions

360-degree feedback collects input from four perspectives: self-assessment, manager review, peer evaluation, and direct report feedback. Each group answers the same core questions, revealing gaps between self-perception and external observation.

Manager-rated example: “How effectively does this person communicate priorities to their team?” Peer-rated: “How well does this person collaborate across departments?” Self-assessment: “How confident are you in your conflict resolution skills?” Direct report: “Does yo

What Are Feedback Survey Questions

Feedback survey questions are structured prompts used to collect opinions, ratings, and qualitative responses from customers, employees, or users about a specific experience, product, or service. Organizations use these questions inside feedback forms, email campaigns, and in-app prompts to measure customer satisfaction, track sentiment over time, and identify areas that need improvement.

The difference between a useful survey and a wasted one usually comes down to the questions themselves.

A poorly worded question gives you garbage data. A well-constructed one gives you something you can actually act on.

Feedback surveys show up everywhere. Post-purchase emails from Shopify stores. Quarterly employee check-ins run through Qualtrics. SurveyMonkey links dropped into Slack channels after a product launch. Typeform pop-ups on SaaS dashboards.

They all serve the same function: closing the gap between what you think is happening and what people actually experience.

The format varies. Some surveys use a Likert scale (strongly agree to strongly disagree). Others rely on open-ended text boxes or simple star ratings. The right format depends entirely on what you’re measuring, who you’re asking, and when you’re asking them.

Google Forms works fine for quick internal polls. But if you’re running a quarterly brand perception study or tracking Net Promoter Score across multiple customer segments, you’ll need something with more analytical depth, like Medallia or Alchemer.

What Is the Purpose of Feedback Survey Questions

Feedback survey questions exist to turn subjective experience into measurable data, giving teams a clear basis for decisions about products, services, and internal operations. Without structured feedback collection, you’re guessing.

According to Gallup, companies that regularly measure employee engagement see 21% higher profitability. Harvard Business Review research on Customer Effort Score showed that reducing effort (not increasing delight) is the strongest predictor of repeat purchases.

The response rate matters as much as the questions. A 2024 Qualtrics benchmark report puts the average survey response rate between 20% and 30% for external customer surveys, higher for in-app and post-interaction triggers.

What Are the Types of Feedback Survey Questions

There are several core types of survey questions, each suited for different measurement goals.

- Open-ended questions let respondents write freely. “What could we improve about your checkout experience?” No predefined answers. Useful for qualitative insights but harder to analyze at scale.

- Closed-ended questions force a choice from a set list. Faster to answer, easier to quantify.

- Likert scale questions measure agreement or satisfaction on a 5 or 7-point scale. “How satisfied are you with our response time?” from “Very dissatisfied” to “Very satisfied.”

- Rating scale questions assign a numeric value. The classic 1-to-10 NPS question falls here.

- Multiple choice questions offer several options where one or more can be selected. Best for demographic survey questions or categorizing preferences.

- Dichotomous questions are yes/no or true/false. Binary, fast, high completion rates.

- Matrix questions group related items into a grid. Efficient for comparing multiple service attributes, but can cause survey fatigue if overused.

The mix you choose depends on whether you’re after quantitative benchmarking or qualitative depth. Most effective surveys combine at least two or three of these formats.

How to Write Effective Feedback Survey Questions

Writing effective feedback survey questions requires clarity, neutrality, and a single focus per question. Every question should target one specific thing you want to learn, phrased in a way that doesn’t push the respondent toward a particular answer.

Don Dillman’s Tailored Design Method, published through Washington State University, remains one of the most cited frameworks for survey methodology. Its core rule: make every question easy to understand on the first read.

That sounds obvious. But most surveys fail here. People add jargon, double up on concepts in a single question, or use scales that don’t match the question being asked.

If you’re building these surveys on a website, following best practices for creating feedback forms from the start saves you from redesigning later.

What Makes a Good Feedback Survey Question

A good feedback question is specific, neutral, and asks about one thing only. It uses language the respondent already understands, not internal company terms.

Bad: “How would you rate our amazing new onboarding experience and support team?” (leading, double-barreled).

Good: “How easy was it to complete the account setup process?” (single-focus, neutral, measurable).

Match the scale to the question. Don’t ask “How likely are you to recommend us?” on a 3-point scale. The standard NPS question from Fred Reichheld and Bain & Company uses 0-to-10 for a reason.

What Are Common Mistakes in Writing Feedback Survey Questions

Double-barreled questions ask two things at once. “Was the product affordable and high-quality?” If the answer is yes to one and no to the other, the data is useless.

Leading questions push a specific answer. “How great was your experience?” assumes the experience was great.

Loaded questions contain assumptions. “Why do you prefer our service over competitors?” presumes they do.

Using company jargon, adding too many matrix grids, or running a 40-question survey when 12 would do. All common problems. If you want to dig deeper into avoiding these pitfalls, there’s solid guidance on avoiding survey fatigue that covers length and cognitive load.

Customer Feedback Survey Questions

Customer feedback survey questions measure how people experience your product, service, or brand at specific touchpoints. They’re used post-purchase, after support interactions, and on a recurring schedule to track satisfaction trends.

Three standardized measurement systems dominate this space: Customer Satisfaction Score (CSAT), Net Promoter Score (NPS), and Customer Effort Score (CES). Each one answers a different question about the customer relationship.

Collecting this feedback through your website is straightforward if you already have a survey form set up. The real work is in the question selection.

Here are customer feedback questions that actually produce usable data:

- “How satisfied are you with your recent purchase?” (CSAT, 1-5 scale)

- “What was the main reason for your score?” (open-ended follow-up)

- “How likely are you to buy from us again?” (retention signal)

- “How well did our product meet your expectations?” (expectation gap)

- “What almost stopped you from completing your purchase?” (friction identifier)

- “How would you rate the value for money of our product?” (perceived value)

- “Is there anything we could do differently?” (open improvement prompt)

- “How would you describe our brand to a friend?” (brand perception, open-ended)

For e-commerce specifically, post-purchase survey questions focused on checkout, delivery, and unboxing are where you’ll find the most actionable friction points.

What Are Customer Satisfaction Survey Questions

CSAT questions ask customers to rate satisfaction on a scale, typically 1-to-5 or 1-to-7. They’re the most widely used feedback metric in SaaS, retail, hospitality, and healthcare.

Standard CSAT examples include: “How satisfied are you with [specific interaction]?” and “Rate your overall experience with our service.” Follow each rating with an open-text “Why?” to get context behind the number. The American Customer Satisfaction Index (ACSI) tracks these scores at a national level across industries.

What Are Net Promoter Score Questions

The NPS question is: “On a scale of 0 to 10, how likely are you to recommend [company/product] to a friend or colleague?” Scores 9-10 are promoters, 7-8 passive, 0-6 detractors.

Fred Reichheld developed NPS at Bain & Company, first published in Harvard Business Review in 2003. The single-number score is useful for benchmarking, but it needs a follow-up question to be actionable. “What’s the primary reason for your score?” is the standard second question. You can explore more variations in a dedicated guide on NPS survey questions.

What Are Customer Effort Score Questions

CES measures how easy it was for a customer to accomplish something. The original CES question: “How much effort did you personally have to put forth to handle your request?” on a 1-to-7 scale.

A 2010 Harvard Business Review study found that reducing customer effort is a stronger loyalty driver than exceeding expectations. CES works best right after support interactions or task completion. When NPS tells you sentiment, CES tells you friction. Use both, but CES is better for customer service survey questions specifically.

Employee Feedback Survey Questions

Employee feedback survey questions measure engagement, satisfaction, management quality, and workplace culture. They’re typically deployed quarterly, biannually, or during specific transitions like onboarding and offboarding.

The Gallup Q12 framework is the most researched employee engagement instrument, consisting of 12 core questions validated across millions of respondents. It covers clarity of expectations, recognition, development opportunities, and sense of purpose.

Anonymous surveys tend to produce more honest responses, particularly around management quality and compensation. But anonymity also makes follow-up harder, so there’s always a tradeoff.

Strong employee feedback questions include:

- “Do you know what is expected of you at work?” (Gallup Q12, role clarity)

- “In the last seven days, have you received recognition for doing good work?”

- “Do you have a best friend at work?” (belonging indicator)

- “Does your manager seem to care about you as a person?”

- “How confident are you in the company’s direction over the next year?”

- “Do you have the tools and resources to do your job well?”

- “How likely are you to recommend this company as a place to work?” (eNPS)

- “What one thing would you change about your day-to-day work?”

What Are Employee Engagement Survey Questions

Engagement surveys go beyond “are you happy?” to measure psychological investment in the work itself. Gallup’s research across 2.7 million employees shows that engaged teams have 41% lower absenteeism and 17% higher productivity.

Key engagement questions: “Do you feel your opinions count at work?” and “In the last six months, has someone talked to you about your progress?” Keep these anonymous. Send them quarterly through a dedicated tool or a simple survey form template to track changes over time.

What Are 360-Degree Feedback Survey Questions

360-degree feedback collects input from four perspectives: self-assessment, manager review, peer evaluation, and direct report feedback. Each group answers the same core questions, revealing gaps between self-perception and external observation.

Manager-rated example: “How effectively does this person communicate priorities to their team?” Peer-rated: “How well does this person collaborate across departments?” Self-assessment: “How confident are you in your conflict resolution skills?” Direct report: “Does your manager provide clear, timely feedback?”

What Are Exit Interview Survey Questions

Exit interviews capture why people leave. The data is only valuable if you aggregate it over time and look for patterns, not individual complaints.

Effective exit questions: “What prompted you to start looking for a new role?” “Did you feel you had enough opportunities for growth?” “How would you describe the management culture?” “Would you consider returning to this company?” “What could we have done differently to keep you?”

Product Feedback Survey Questions

Product feedback questions connect user experience directly to the development roadmap. They measure usability, feature satisfaction, and product-market fit across the product lifecycle, from beta testing to mature feature evaluation.

Sean Ellis, who coined the term “growth hacking,” created the most widely used product-market fit question: “How would you feel if you could no longer use this product?” If 40% or more say “very disappointed,” you’ve hit product-market fit.

That single question has influenced product strategy at hundreds of startups. But it’s just one data point.

Broader product feedback questions worth using:

- “What is the main benefit you get from using our product?”

- “What feature do you use most frequently?”

- “What feature is missing that would make this product more useful for you?”

- “How would you rate the overall quality of the product?” (For deeper coverage, see survey questions about product quality.)

- “How does our product compare to alternatives you’ve tried?”

- “What task were you trying to accomplish when you first started using this product?”

Collecting this through a simple website form embedded on your product page keeps the feedback loop short and the response rate higher than email-based surveys.

What Are Usability Feedback Questions

Usability questions measure how easily someone can accomplish tasks within your product. The System Usability Scale (SUS), developed by John Brooke in 1986, is a 10-question standardized test scored out of 100. An average SUS score is 68.

Practical usability questions: “How easy was it to find what you were looking for?” “Did you encounter any confusing steps during [specific task]?” “How would you rate the navigation of our product?” Jakob Nielsen’s usability heuristics, including visibility of system status and error prevention, are a good framework for generating these questions. For website-specific usability measurement, there’s a focused set of website usability survey questions that covers the right ground.

What Are Feature Request Survey Questions

Feature request questions help product teams prioritize what to build next. The Kano model classifies features into five categories: must-be, one-dimensional, attractive, indifferent, and reverse.

Use questions like: “If we added [feature], how would that affect your experience?” and “Which of these potential features would be most valuable to you?” paired with a ranking mechanism. Keep the list to 5-7 options. Beyond that, respondents start picking randomly, and applying conditional logic helps tailor follow-ups based on their initial selection.

FAQ on Feedback Survey Questions

What are feedback survey questions?

Feedback survey questions are structured prompts designed to collect opinions, ratings, and suggestions from customers, employees, or users. They appear in tools like SurveyMonkey, Google Forms, and Qualtrics to measure satisfaction, identify problems, and guide decisions based on real respondent data.

How many questions should a feedback survey have?

Most effective feedback surveys contain between 5 and 15 questions. Shorter surveys get higher response rates. Anything over 20 questions increases abandonment significantly. Match the length to the context, because post-purchase surveys can be shorter than annual employee engagement surveys.

What is the difference between open-ended and closed-ended survey questions?

Closed-ended questions offer predefined answers like Likert scales, multiple choice, or yes/no options. Open-ended questions let respondents write freely. Closed-ended gives you quantitative data for benchmarking. Open-ended gives you qualitative insights for understanding the “why” behind scores.

What is a good response rate for a feedback survey?

External customer surveys average 20-30% response rates according to Qualtrics benchmarks. Internal employee surveys reach 60-80%. In-app and post-interaction triggers perform best. Survey length, timing, mobile optimization, and whether you offer incentives all directly affect completion rates.

What is the Net Promoter Score question?

NPS asks: “On a scale of 0-10, how likely are you to recommend us to a friend?” Developed by Fred Reichheld at Bain & Company, it segments respondents into promoters (9-10), passives (7-8), and detractors (0-6) for a single loyalty metric.

How do I avoid bias in feedback survey questions?

Use neutral language. Avoid leading phrasing like “How great was your experience?” Ask about one thing per question to prevent double-barreled prompts. Randomize answer options where possible. Test questions with a small group before full distribution to catch assumptions you missed.

When is the best time to send a feedback survey?

Send post-purchase surveys within 24 hours. Customer support surveys immediately after resolution. Employee engagement surveys quarterly or biannually. Event feedback within 48 hours. Recency matters because the longer you wait, the less accurate and detailed the responses become.

What is the difference between CSAT, NPS, and CES?

CSAT measures satisfaction with a specific interaction. NPS measures overall loyalty and likelihood to recommend. Customer Effort Score measures how easy it was to accomplish a task. Each answers a different question. Most organizations use at least two of the three together.

Can I use AI-generated questions for feedback surveys?

AI tools can help draft initial question lists, but every question needs human review for clarity, bias, and contextual fit. Generic AI-generated prompts often lack the specificity needed for actionable data. Use AI as a starting point, then refine based on your actual audience and goals.

How do I analyze feedback survey results?

Quantitative responses get cross-tabulation and trend analysis across segments. Qualitative open-ended answers need thematic coding or sentiment analysis. Look for patterns across respondent groups, not individual outliers. Close the feedback loop by sharing findings and acting on the top-priority issues identified.

Conclusion

The right feedback survey questions give you a direct line to what customers, employees, and users actually think. Not what you assume they think.

Whether you’re measuring loyalty with NPS, tracking effort with CES, or running quarterly pulse surveys through Typeform or Qualtrics, the quality of your questions determines the quality of your data.

Keep questions specific. One focus per question. Match the scale to what you’re measuring.

Use feedback form templates to speed up deployment, but always customize the wording to fit your audience and context. Generic questions get generic answers.

Collect responses, run your sentiment analysis or cross-tabulation, and close the loop. The organizations that act on survey data, not just collect it, are the ones that see real improvement in retention, satisfaction, and long-term growth.

Start with five focused questions. Build from there.